by Pandora FMS team | Last updated Apr 29, 2025 | Community, Pandora FMS

Madrid, April 2025 – The monitoring and observability platform Pandora FMS has been recognized in 35 leading reports in the G2 Spring 2025 edition, solidifying its position as one of the most versatile solutions for managing complex IT infrastructures, hybrid environments, and critical operations.

This recognition reflects the trust of thousands of technical professionals who value its performance, scalability, and adaptability across industries such as telecommunications, manufacturing, public administration, and digital services.

Over 270 Mentions and 35 Key Reports

Pandora FMS has been featured in 277 G2 reports, with a standout presence in 35 of the most influential ones, categorized by function, region, business segment, and performance index.

Top Functional Categories:

- Enterprise Monitoring

- Cloud Infrastructure Monitoring

- Server Monitoring

- Log Monitoring

- Application Performance Monitoring (APM)

- Database Monitoring

- IT Alerting

- Remote Monitoring & Management (RMM)

- SIEM (Security Information and Event Management)

Regional and Segment-Based Reports:

- EMEA Regional Grid® Reports

- Small-Business, Mid-Market y Enterprise Grid® Reports

Performance Indexes:

- Usability, Results, Relationship, Implementation, Momentum Grid®

Cybersecurity Focus: 5 Key SIEM Reports

This year, Pandora FMS strengthens its momentum in the cybersecurity space with simultaneous recognition in five key SIEM reports:

- Momentum Grid®

- Enterprise Grid®

- Small-Business Grid®

- EMEA Grid®

- Usability Index for SIEM

This progress reinforces Pandora FMS’s commitment to security-focused monitoring with early threat detection, event correlation, log analysis, and end-to-end visibility, aligned with regulations like NIS2 and DORA, both centered on digital resilience and traceability.

“Thanks to Pandora FMS, we track our construction equipment in real time, including excavators and cranes. It’s been crucial to preventing critical failures.”

— Loring D., Process Engineer

Verified Reviews: High User Satisfaction

User ratings collected on G2 emphasize an outstanding user experience:

- 98% believe the product is headed in the right direction

- 94% highlight the ease of doing business

- 94% say it meets their requirements

Feedback from Verified Users:

“It helps us ensure system uptime and improve incident response from a single console.”

— Henry R., IT Administrator

“What we value most is the customization. We create tailored dashboards and integrate with Slack and Jira with no hassle.”

— Nina S., Mobile Developer

“As an internet provider, we need to ensure the availability of our servers. Pandora FMS covers everything—network, servers, applications, and web services.”

— Christian N., IT Security Supervisor

“A true AIO (All-In-One) software that lets you manage everything from a unified console—with maps, alarms, auto-discovery, and excellent support.”

— Pedro G., Developer

A Modular, Scalable, and Vendor-Neutral Solution

With a modular, cross-platform, and vendor-neutral approach, Pandora FMS adapts to:

- On-premise, cloud, and hybrid environments

- Legacy systems and modern container-based infrastructures

- Monitoring of networks, servers, databases, logs, applications, and business processes

- Integration with ITSM tools, CMDBs, DevOps platforms, and alerting systems

All managed from a single control panel to enhance visibility, reduce incidents, and support better operational decision-making.

“Pandora FMS automates repetitive tasks and enables us to deliver high-quality service in demanding environments.”

— Omar B., Electrical Engineer

Leading Positions in G2® Spring 2025 Reports

With 277 total reports and 167 badges, Pandora FMS has reached an unprecedented level, earning the #1 position in over 25 key reports, outperforming long-established competitors across multiple functional and user experience categories.

Reports Where Pandora FMS Ranks #1:

- Momentum Grid® Reports: APM, Enterprise Monitoring, IT Alerting, Server Monitoring

- Usability Index: Server, Enterprise y Cloud Infrastructure Monitoring, SIEM

- Results Index: SIEM

- Small-Business Grid® Reports: Enterprise Monitoring

- Mid-Market Usability Index: Enterprise Monitoring

- Enterprise Relationship Index: SIEM

Reports Where Pandora FMS Ranks Second or Third:

- Momentum Grid® Report for Cloud Infrastructure Monitoring

- Relationship Index for Database Monitoring

- Usability Index for APM

- Implementation Index for SIEM y Network Monitoring

- Results Index for Cloud Infrastructure Monitoring

- Small-Business Relationship Index for SIEM

These achievements position Pandora FMS as one of the top-rated solutions for technical performance, user experience, scalability, and support.

Spanish Technology with Global Reach

“The G2 results validate our team’s ongoing effort to build a flexible, scalable platform focused on technical users. We continue listening to our customers to evolve alongside them.”

— Kornelia Konstantinova, CMO at Pandora FMS

With over 50,000 active installations in more than 60 countries, Pandora FMS continues to grow as a solid alternative to global vendors, helping organizations of all sizes build more resilient, efficient, and secure infrastructures.

Want to Learn More?

Visit Pandora FMS’s official G2 profile and discover why thousands of users consider it one of the most complete and effective solutions on the market.

Read reviews on G2 →

by Pandora FMS team | Mar 11, 2025 | Pandora FMS, Releases

In the recent NG 781 RRR update, Pandora FMS has significantly enhanced its Discovery system with the powerful NetScan feature, making it even easier to automatically detect and comprehensively monitor technological assets in complex networks.

What’s New with NetScan in Discovery?

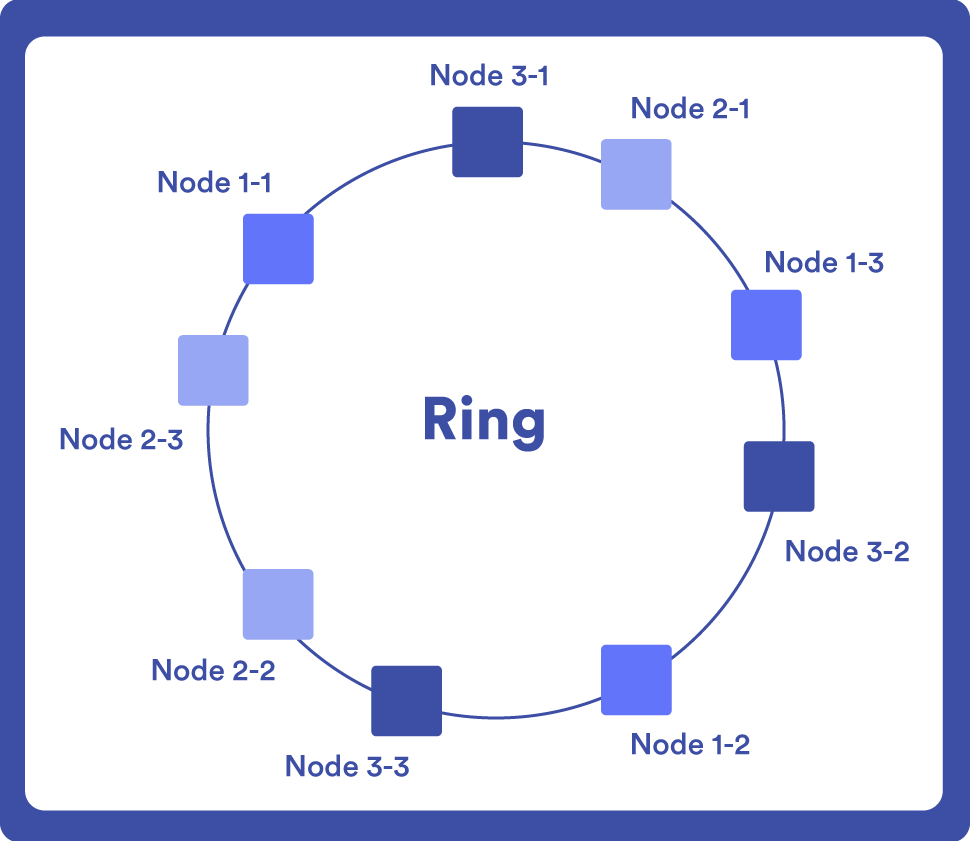

NetScan enables automatic device discovery in networks, determining their topology, and applying monitoring immediately. This feature may be configured in two modes, tailored to different needs:

- Simple Mode: It automatically detects all networks accessible from Pandora FMS server through traceroute to the Internet (8.8.8.8), a review of the local routing table, and direct scanning of connected networks.

- Advanced Mode: It allows detailed parameter customization such as specific networks (CIDR), exclusion of particular IPs, advanced SNMP scanning, WMI, name resolution, and operating system detection.

Key Advantages of the Updated Discovery

- Automation and Speed: It significantly reduces the time spent on manual processes.

- Validation and Control: Manually verify which assets are added after the scan.

- Automatic Network Maps: Instantly visualize the topology of discovered assets.

- Advanced Auto-Configuration Rules: Automatically apply specific policies, execute scripts, or trigger custom events based on detected assets.

Visual and Functional Improvements in Network Maps

Network maps have been redesigned to offer a better visual experience and navigation:

- Intuitive Grid for Relocation and Organization: It simplifies arranging and managing network elements.

- Clear Visible Boundaries: It enables more precise asset management.

- Dynamic and Optimized Generation: It uses methods like spring 1 and radial dynamic for efficient map creation.

These maps are synchronized with Discovery results, updating automatically with each scan execution.

How to Get the Most Out of It

This version is specially conceived for administrators managing large or dynamic environments, optimizing resources through the automation of initial asset discovery and enabling a more proactive and strategic monitoring approach.

The Discovery update in Pandora FMS NG 781 RRR transforms operational management, significantly boosting efficiency from day one.

Explore all the new features in our full release notes and start optimizing your monitoring today!

by Isaac García | Mar 10, 2025 | Pandora FMS

I have a not-so-secret suspicion that the dream of everyone working with technology is the Enterprise computer from Star Trek. Controlling shields, communications, engines, and everything else from a single place—and with voice commands, no less. “One button to rule them all,” as Sauron might whisper. But until that utopia becomes a reality, at least we can implement a hyperconverged infrastructure (HCI) in our organization’s technology stack.

This hyperconverged infrastructure allows unified management of:

- Computing.

- Networking.

- Storage.

It may not be as exciting as warp drives or interstellar navigation, but the real question is: how do we manage such different aspects in a homogeneous way?

With a common solution in technology: creating an abstraction layer. By introducing an intermediary—a software layer that consolidates all these disparate components through virtualization. The hypervisor, as part of this virtualization, acts as the enabler that allows all these resources to be managed from a single location. A sort of universal translator, another Star Trek dream.

Thus, multiple technologies (hyper)converge into a unified control and management system.

This isn’t just a technological evolution—it’s a paradigm shift: switching from managing individual components to orchestrating a cohesive system, where the infrastructure responds as a whole.

Advantages of a Hyperconverged Infrastructure Over a Traditional One

For those dealing with the heterogeneous chaos of a traditional IT infrastructure, the previous explanation may have sparked a few lightbulbs, highlighting the benefits of such a solution, including:

Simplicity. In both infrastructure and management, meaning there is no need to have in-depth knowledge of each individual component, integrate heterogeneous hardware, or track countless firmware updates. Instead, you only need to understand the software that manages everything.

Let us look at a simple comparison to illustrate this advantage:

|

Traditional Infrastructure

|

HCI

|

|

Fragmented Silos: Physical servers, storage (SAN/NAS), and networks managed by separate teams and tools.

|

Unification: Computing, storage, and networking integrated into standardized nodes managed by software.

|

|

Example: A VMware cluster with NetApp storage and standalone Cisco switches.

|

Example: Nutanix or VMware vSAN, where storage is distributed across local disks within nodes.

|

Scalability. Being able to resize modularly or add and remove features as needed, in a faster and more cost-effective manner. For instance:

|

Traditional Infrastructure

|

HCI

|

|

Adding storage requires expanding the SAN, while scaling computing power involves adding new servers.

And that comes with the risk of overprovisioning, as capacity is purchased “just in case.”

|

Adding standardized nodes increases all resources (CPU, storage, and network) simultaneously.

In this case, we scale on demand, without wasted resources (or their associated extra costs, of course).

|

Efficiency. It enables centralized and simplified management, automation, and reduced hardware usage.

|

Traditional Infrastructure

|

HCI

|

|

Multiple consoles: vSphere for VMs, NetApp OnCommand for storage, Cisco Prime for networking…

|

Unified management: A single interface for provisioning, managing, and monitoring the whole infrastructure.

|

|

Slow response times: Coordinating teams to solve incidents.

|

Native automation: Predefined policies (e.g., auto-repair of nodes).

|

When it comes to choosing an HCI solution, each organization must consider its needs and how the different available options align with them. Some of these options include:

- VMware vSAN. Particularly suited for those already familiar with VMware solutions.

- Nutanix. Ideal for organizations heavily relying on cloud and multi-cloud infrastructures.

- Microsoft Azure Stack HCI. Integrated within Azure Local, catering to the widespread Redmond ecosystem in many organizations.

- HPE SimpliVity. Focused on AI-driven management, a growing trend in these solutions.

However, regardless of the chosen solution, they all share a common challenge…

The Challenge of Monitoring Hyperconverged Infrastructures

The biggest advantage of HCI is that everything is in one place. Its biggest challenge is also that everything is in one place.

No, that was not a typo.

The fact that all critical components are centralized presents a major challenge in monitoring them effectively due to the sheer number of different factors to control.

Everything falls under a single umbrella, but that “everything” is vast, and the sheer volume of information and data can be overwhelming. This is where the concept of metric overload comes into play—when faced with an ocean of indicators, identifying the crucial data points becomes essential, but also incredibly complex.

This is where the critical value of a specialized monitoring system comes in. Much like how HCI simplifies infrastructure management, a tool like Pandora FMS makes it easier to monitor multiple key variables efficiently, ensuring visibility and control over the entire system.

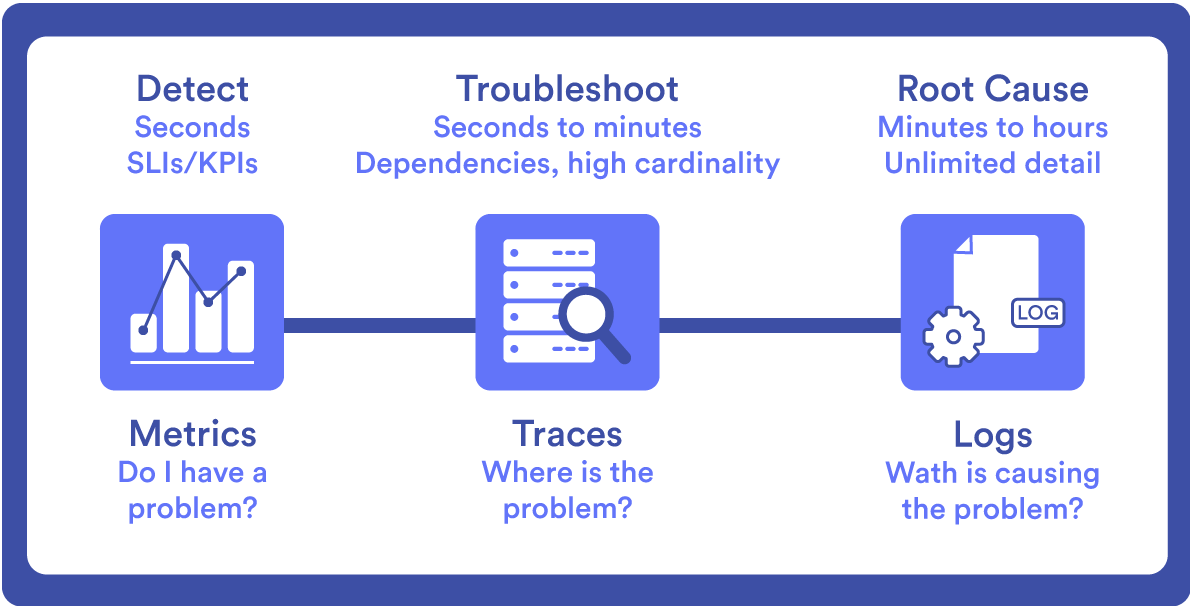

Challenges in HCI Monitoring and Observability

Those working with Hyperconverged Infrastructures (HCI) often encounter common issues due to the nature of the solution, as some critical layers become somewhat more opaque due to integration.

This creates monitoring challenges, such as:

- Lack of visibility into HCI performance. It’s great that everything works in a unified manner, but is it actually performing well? Do we have key performance indicators (KPIs) readily available? Are there automated alerts to notify us immediately when something goes wrong?

- Difficulties in predicting performance degradations. For example, two virtual machines (VMs) competing for storage on the same HCI node can easily degrade the overall infrastructure. But how do we know if this will happen? And more importantly, how can we confirm if this is the actual cause of the performance drop we are experiencing?

- The need to correlate metrics across storage, networking, and virtualization.

- Lack of granular visibility. If we are experiencing latency issues, for instance, how do we determine whether there is a bottleneck in the underlying physical network or a software-related problem?

- The issue of component interdependence. Since a failure in an HCI node simultaneously impacts compute, storage, and networking, it can trigger a domino effect across the entire infrastructure.

- Metric overload. Monitoring not only VMs/containers but also hypervisors, distributed storage pools, East-West internal traffic, or Quality of Service (QoS) policies can quickly overwhelm monitoring systems.

How Does Pandora FMS Help with HCI Monitoring?

Pandora FMS serves as the key to overcoming these challenges because we have experienced them firsthand—and that frustration led us to develop an expert solution. While HCI solutions include built-in monitoring, they often come with common limitations:

- Basic capabilities. Most HCI platforms offer simple monitoring, preconfigured alerts, and limited integrations. These are useful within their own ecosystem, but when external technologies come into play, collaboration becomes impossible.

- Fragmented visibility. Many HCI monitoring tools lack data correlation from other infrastructures. What happens if we cannot avoid that legacy server in the basement—the one nobody knows how it got there, but is still critical and marked with the terrifying sign: “Do not unplug”? Additionally, dashboards remain fragmented in a multi-vendor environment (e.g., Nutanix + Proxmox).

- Limited customization. Many tools fail to provide custom alerts tailored to an organization’s specific operations.

This is where a tool like Pandora FMS, designed for expert monitoring of hyperconverged infrastructures (HCI), provides the ideal solution by enabling:

- Real-time observability. Unified multi-environment metrics, since HCIs rarely exist in isolation. Pandora FMS ensures seamless monitoring across hybrid and multi-cloud environments.

- Virtualization monitoring. Thanks to its native integration with VMware, Proxmox, Nutanix and KVM.

- Storage and network supervision. Tracking latency, availability, and resource usage.

- Anomaly and incident detection. Featuring event correlation and advanced alerts that identify root causes, not just detect symptoms.

- SIEM and log integration. Providing security and event auditability, key for critical industries operating under NIS2 regulations, which require strict compliance and controls. Without proper log unification good luck explaining missing data to an auditor who, for some reason, never blinks.

- Trend analysis and capacity planning. With the implementation of machine learning for optimizing that capability more simple and adequate.

When it comes to something as critical as monitoring hyperconverged infrastructures (HCI), it’s not just about “calling in the specialist”—like Pandora FMS. Its implementation goes even further, as it allows you to centralize all critical information, not only from HCI but also from other key technological elements such as laptops, additional servers or even mobile devices.

This naturally completes the HCI philosophy, because in a way, Pandora FMS enables the hyperconvergence of monitoring itself in a single, unified place.

The long-awaited “One Ring” to monitor them all.

(Pandora FMS does not include a magic ring. Our legal team insists we clarify this.)

Benefits of Using Pandora FMS in HCI Environments

The quality of decisions depends on the quality of the information available to make them. This is one of the key strategic advantages that Pandora FMS provides.

When monitoring Hyperconverged Infrastructures (HCI), this translates into direct benefits such as:

- Faster incident response times.

- Optimized performance and failure prediction.

- Enhanced security through event correlation and suspicious activity monitoring.

- Compatibility with multiple technologies without requiring additional tools.

- Reduced operational costs, through automation and better resource allocation.

- Scalability without impacting operations

And above all, a sense of control, sanity, and peace of mind—because you always know exactly what’s happening.

In an increasingly complex and diverse environment, hyperconverged infrastructures help optimize and simplify. And just like in Inception, the film by Christopher Nolan, we can take one level deeper in this journey toward hyperconvergence—by optimizing and simplifying the monitoring of these infrastructures with Pandora FMS.

Without this, operating efficiently and staying at the forefront of our industry would be impossible.

If you want to learn more about Pandora FMS in Hyperconverged Infrastructures, do not hesitate to contact us, we will prove these words with actions.

by Isaac García | Mar 3, 2025 | Pandora FMS

Endpoints are the primary target of cyberattacks. The most conservative estimates indicate that between 68% and 70% of data breaches begin on these devices. This is why implementing an EDR (Endpoint Detection and Response) solution is crucial to protect them in today’s cyber threat landscape.

An EDR is an advanced security tool installed on the end devices of the technological infrastructure (personal computers, servers, phones…) that monitors their activity in real-time, providing visibility into exactly what is happening on each of these endpoints.

This makes it possible to detect, analyze, and respond to security threats proactively and smartly. In case of an incident, it also allows the response team to have all the necessary information to dig into and solve the issue.

This goes beyond the capabilities of a traditional antivirus, which is normally used to protect some endpoints but falls short in the current security context faced by organizations.

How an EDR Works

The features of an EDR and the level of endpoint security they provide ultimately depend on each manufacturer, but they all rely on three fundamental pillars, which help to understand how they work and the protection they offer:

- Activity monitoring on a steady basis: This includes everything from processes to device connections, collecting data and analyzing it intelligently.

- Threat detection: When the monitoring system detects abnormal behavior, such as lateral movements, malware, phishing attempts, and other malicious actions.

- Automated response to threats: This may involve isolating the compromised device from the rest of the network, blocking suspicious processes, or deleting harmful files.

EDRs differ from traditional antiviruses not only in their detection capabilities (being able to face unknown and sophisticated threats) but also in their response capabilities, such as isolating a device from the network. On the other hand, antivirus usually quarantines or deletes an infected file at best.

For instance, a malicious actor might create a new type of malware conceived to retrieve critical data from an organization, such as credentials or privileged information.

While an antivirus might not recognize malware and allow it to operate unchecked, an EDR can detect malicious file’s activity, such as data leaks. It can then stop the process if it detects an unknown connection and a massive flow of data going to it.

A similar situation could take place if data exfiltration is attempted by a disgruntled employee without any malware involved.

A certain user might try to copy information to an external device. While an antivirus wouldn’t react to this, an EDR could detect the connection of a USB drive or the unusual behavior of a large-volume data transfer, and then take the appropriate action against this suspicious activity.

Differences Between Security Management and Infrastructure Management

To ensure optimal endpoint protection and overall system security, it is key to understand the difference between these two concepts and ensure they are aligned.

Infrastructure management aims to ensure that the technological environment works properly and supports the organization’s goals. However, this objective is compromised if security is not also a key consideration.

On the other hand, security management involves implementing measures and policies to protect the infrastructure, such as integrating SIEM (Security Information and Event Management) and EDR (Endpoint Detection and Response) solutions. However, it is not the same to secure a straw building thrown together haphazardly as it is to protect a well-planned stone castle.

Likewise, an adequately managed technological infrastructure will make the following possible:

- Security management.

- Integrated operation of EDR and SIEM.

- The effectiveness of the blue team, if present.

- Incident response.

Let’s look at an example illustrating the difference between a well-managed infrastructure and an unprotected one.

Imagine an environment with proper network segmentation, strong device access controls, and a consistent patch management policy.

Even if an infrastructure element fails (for instance, a delayed firmware update on an IoT device due to a vulnerability), if that device has been configured with appropriate network and access policies, it will still contribute to overall security. This setup reduces the likelihood that a malicious actor who compromises that endpoint can move laterally to another, more critical part of the network.

Moreover, if this proper infrastructure management is combined with effective security management using an EDR integrated with a SIEM solution, any attempt at unusual lateral movement would be detected, alerted, and mitigated.

Conversely, if that IoT device still uses the default username and password (an all-too-common situation unfortunately) or has unrestricted network access, a malicious actor will have significant opportunities to move through the network to critical systems or compromise the device in other ways, such as spying through a webcam.

Infrastructure Management Approaches to Strengthen Security

Continuing with the previous analogy, how do we build our castle with robust stone and a resilient design?

An effective infrastructure management strategy would involve the following practical approaches:

- Strict update and patching policies: To prevent malware or exploit techniques from taking advantage of vulnerabilities in outdated versions. This includes updating both software and firmware on endpoints.

- Optimal network design: By properly segmenting networks and ensuring that each device has access only to what is strictly necessary for its function—both in terms of data and communication with other devices.

- Implementation of SIEM solutions: To collect data on what is happening within our infrastructure, consolidate that information for the Network Operations Center (NOC) analyze it, and alert on any suspicious activity.

- Log monitoring and analysis policies: To detect anomalies within those logs. Currently, security policies allow companies to meet the highest security standards and certifications, such as ISO 27001 as well as government regulations like the new NIS2, which is being implemented by lots of companies.

With these measures in place, our infrastructure becomes more resilient to attacks while continuing to fulfill its primary purpose: supporting organizational goals and workflows.

There is often talk of having to choose between security and convenience or security and performance, but this is a false dichotomy. Proper infrastructure management supports both security and operability—there is no need to choose between them. While system infrastructures and hybrid environments make it hard to get a unified overview, Pandora FMS unifies data sources and allows centralized management.

How Different EDRs and Antivirus (A/V) Solutions Approach Security

Although we often talk about EDRs and antivirus solutions as two general approaches to endpoint security, not all products are created equal.

Therefore, it is essential to understand the key features of each solution and how they may vary depending on the manufacturer.

|

EDR

|

Antivirus

|

|

Constant activity monitoring on endpoints to detect suspicious behaviors.

|

It scans files and applications looking for known malware brands.

|

|

It uses behavioral analysis to identify unknown threats.

|

It uses file definition databases to identify known malware.

|

|

Some options use predictive AI, such as Pandora FMS, to detect and make decisions.

|

Some manufacturers use heuristics (suspicious behavior predefined rules), an older technology that generates more false positives.

|

|

Sophisticated automated response: it may isolate devices, block suspicious processes and generate advanced alerts (the scope of said response will depend on the features of each manufacturer).

|

Limited automated response to quarantine or infected file deletion.

|

|

Advanced forensics capabilities, logging everything that happened to make audits easier as well as the work of the incident response team.

|

Forensics capabilities limited to logging basic detections.

|

|

Active and reactive protection.

|

Reactive protection based on the definition file.

|

|

High integration capacity with SIEM and the infrastructure in general.

|

Limited integration.

|

This last aspect of SIEM and EDR integration is critical today and the key to security in such a constantly evolving environment.

However, on the other side of the scale, the capabilities of antivirus solutions are much more limited, both in terms of the information they can send to a SIEM and their integration capacity with these systems. Additionally, some antivirus solutions are prone to compatibility issues with the rest of the technological or security infrastructure, leading to conflicts with firewalls or other protection tools.

Advantages and Disadvantages of an EDR Compared to a Traditional Antivirus

The above does not mean that everything is that positive in the case of EDRs, so an impartial analysis should put these advantages on the table, but also the disadvantages and challenges.

Advantages of an EDR Compared to an Antivirus

- Advantages of an EDR Compared to an Antivirus against both known and unknown threats.

- More advanced automated incident response capabilities.

- Enhanced security management through detailed visibility into exactly what is happening on each endpoint.

Disadvantages of an EDR Compared to an Antivirus

- More complex to implement and manage.

- It requires skilled personnel to interpret and respond to incidents, as well as for installation and integration, especially in on-premise solutions.

- Generally higher cost.

Advantages of an Antivirus Compared to an EDR

- Easier and faster to implement.

- Effective against known malware and common threats.

- More affordable than EDRs, and sometimes even free.

Disadvantages of an Antivirus Compared to an EDR

- Insufficient protection in the current cybersecurity landscape, especially for scenarios beyond low-risk individual users.

- It may cause management issues, such as false positives or conflicts with other applications.

- Very limited response capability to security incidents.

Practical Approaches for Endpoint Security in On-Premise Environments

Whether due to legal requirements, such as protecting and managing sensitive data, or due to a strategic technology approach, such as the need for greater control or equipment performance, on-premise solutions are gaining appeal compared to a 100% cloud-based approach.

Therefore, it is important to consider these fundamental strategies for successfully implementing EDR solutions in on-premise environments.

- Analysis and Assessment of Infrastructure Needs. Every truly strategic action, of any kind, begins with this step. It is essential to have a thorough understanding of your network, its critical assets, and the primary threats you face, which will shape a significant part of your specific threat model, differing from that of other organizations.

- Choosing the Right EDR Solution. Based on the conclusions from the previous point and your budget.

- Initiating a Testing Phase. In a controlled environment that allows you to evaluate whether the chosen solution is appropriate.

- Establishing a Gradual Deployment Strategy. Even if tests are successful, it is crucial to proceed gradually to identify and solve any issues and challenges that will inevitably arise.

- Integration with Other Tools. Particularly with SIEM, configuring rules and verifying their effectiveness.

- Setting Up a Robust Monitoring and Auditing Policy. The tool alone is ineffective without a solid process behind it, making it essential to systematize monitoring and control tasks.

- Establishing Contingency Plans. What would happen if everything failed? Security must always consider this question, even when applying best practices, as the probability of unexpected black swan events is never zero. For such scenarios, it is necessary to have a “red button” plan that allows for operation continuation and the restoration of data and infrastructure as quickly as possible.

While the on-premise approach is gaining traction again, nothing is absolute, so a hybrid solution can also be considered.

Therefore, here are the differences between a 100% on-premise implementation, a hybrid one, and a 100% cloud-based solution.

- 100% On-Premise: The security infrastructure is located within the organization’s premises. Its main benefit is complete control over data, devices, and security, as well as potentially better performance and lower latency. However, the challenge is that it is more expensive in terms of economic and human resources. These resources, besides being more numerous, also require higher qualifications and will perform more intensive management tasks. It is worth noting that, often due to ENS or NIS2 requirements, certain pieces of infrastructure must be on-premise.

- Hybrid Implementation: It combines on-premise and cloud elements. The key is to leverage the best of both worlds, for example, by keeping sensitive data locally while managing threat analysis and response in the cloud. A well-planned hybrid approach allows cost reduction and increased flexibility. The biggest challenge is that we will not rely solely on ourselves, as there will be points of failure beyond our control.

- 100% Cloud-Based: Its main benefit is reduced economic and human costs, as well as lower technological complexity, which rests with the cloud provider. The downside is that we place the most critical aspects in the hands of third parties, in whom we must trust. And in case of an incident, we also depend on their response capabilities.

This is no small matter, and the echoes of July 19, 2024, still resonate in every security manager’s mind. On that morning, millions of Windows systems displayed the infamous blue screen of catastrophic failure, caused by a faulty remote update from CrowdStrike, one of the most well-known EDRs.

How Pandora FMS Enhances Endpoint Security

Throughout this journey, we have emphasized that EDR solutions are more advanced but only as effective as the real-time monitoring and threat detection capabilities we have in place.

This is where the next link in the security chain connects: with a flexible monitoring system like Pandora FMS, which complements endpoint security.

How?

- By integrating with Pandora SIEM, which collects and centralizes everything, providing a clear overview of what is happening at all times.

- Through log analysis and audits, which further strengthen endpoint protection. Every company is unique, as are its specific threats. This means that we must have complete visibility into our infrastructure, its unique characteristics, and any suspicious deviations from the norm, which will differ from those of other organizations.

- With advanced security event correlation, to effectively identify anomalies in our specific case and respond appropriately.

- Through seamless integration with network devices and firewalls, ensuring that everything operates smoothly.

- By collecting events from agents on multi-platform endpoints (Windows, macOS or Linux).

As we have seen, for any organization that takes security seriously, using EDR along with a SIEM strategy is essential.

The cyber threat landscape changes frequently and quickly. Attacks are becoming more frequent, and malicious actors are getting more sophisticated. Supported by the emergence of AI, even adversaries with limited technical knowledge can now modify malware to compromise defenses and evade traditional detection systems, such as antivirus solutions. They can even create new malicious programs from scratch.

Therefore, threats that were once exclusive to highly skilled and motivated actors are now within reach of many. This underscores the importance of designing our infrastructure with resilience in mind and integrating security measures capable of anticipating this ever-changing landscape.

Without this approach, we risk facing an increasingly hostile and complex environment unprotected every single day.

by Isaac García | Last updated Feb 26, 2025 | Pandora FMS

The days when an antivirus and common sense were enough to guarantee an organization’s cybersecurity are long gone. Especially if you work in a critical sector. That’s why the NIS2 Directive (2022/2555) of the European Union establishes cybersecurity obligations for these key activities… and the consequences of non-compliance.

These consequences are significant, so let’s analyze the regulation, when it applies, and how to implement it.

What is the NIS2 Directive and What Changes from NIS1?

Increasingly sophisticated malicious actors (state-sponsored or otherwise), the omnipresence of malware, and the proliferation of data breaches make one thing clear:

The European Union must enhance its cybersecurity management in critical sectors, and the NIS1 directive was no longer sufficient.

For that reason, the new NIS2 Directive was approved in November 2022, affecting what are known as essential and important entities. Member states are now implementing it according to the following timeline:

- 16/01/2023. Entry into force.

- 18/10/2024. Repeal of NIS1. Adoption and publication of measures by member states.

- 17/01/2025. National CSIRT (Computer Security Incident Response Team) networks begin operations, and the sanctioning regime is established.

- 17/04/2025. Deadline for compiling the register of essential and important entities. Communication of their number to the Commission and Cooperation Group. Start of evaluations of national cybersecurity strategies (at least every 5 years).

What Changes from NIS1?

- Stricter security requirements.

- Increased compliance control.

- Higher penalties (up to 10 million euros or 2% of revenue for essential entities, and 7 million euros or 1.4% of revenue for important entities).

- Expansion of what is considered an essential or important entity.

This last point is crucial because many organizations that were not covered under NIS1 are now within the scope of NIS2.

Who Is Required to Comply with the NIS2 Directive

The directive generally applies to medium and large companies, whether public or private, that operate in highly critical sectors (as defined in Annex I of the Directive) and other critical sectors (as listed in Annex II).

Therefore, the first factor to consider is the size of the organization:

- A medium-sized company has between 50 and 250 employees and a turnover of up to 50 million euros, or a balance sheet exceeding 43 million euros.

- A large company exceeds these thresholds: more than 250 employees and a turnover or balance sheet of 43 million euros or more.

And which sectors are considered critical and highly critical?

The list is more extensive than that of NIS1, which, for example, classified the energy sector as highly critical. Now, under NIS2, this category has been expanded to include urban heating and hydrogen systems, which were excluded under NIS1.

Annex I establishes that highly critical sectors include:

- Energy

- Transportation

- Healthcare

- Banking

- Drinking and wastewater

- Financial and digital infrastructure (domain providers, cloud services, etc.)

- B2B ICT service management

- Certain public administrations

- Space

Annex II includes as critical sectors:

- Postal and courier services

- Waste management

- Manufacturing, production, and distribution of chemicals and food

- Manufacturing of key products (medical devices, electrical and electronic products, IT equipment, machinery, and transportation)

- Digital service providers (search engines, online marketplaces, and social media providers)

If an organization is medium or large and operates in these sectors, NIS2 should be at the top of its priority list.

Our practical recommendation is to download this guide from INCIBE, which provides a clearer breakdown of these Annexes by activity. That way, you’ll only strain your eyes as much as necessary while navigating the lines of the Directive.

NIS2 Compliance for Small Businesses and Microenterprises

After reviewing the above, the question is clear: “If I am a microenterprise or small business, does this mean I am exempt?”.

The correct answer is the most dreaded one: “It depends.”.

The law states that small businesses and microenterprises that play a key role in society, the economy, or certain types of essential services are also required to comply.

These are considered critical entities (as defined in Article 6 of Directive EU 2022/2557), which provide essential services where “an incident would have significant disruptive effects”. In such cases, even a smaller organization would fall under the directive’s scope.

However, NIS2 itself, in Recital 20, implicitly acknowledges that this definition is complex. For this reason, each EU member state must determine whether a small business is critical and provide it with guidelines and information to ensure compliance.

Practical Recommendation: If there is any suspicion that the above might apply to an organization, no matter how small, it is best to check with INCIBE or a similar agency in the relevant country.

Key Cybersecurity Requirements of NIS2

If an organization is required to comply, the next logical question is: «To what exactly?».

The regulation establishes minimum requirements in Article 21.2. These aim to unify European security standards and cover the entire cybersecurity process: from prevention to incident response, including information system defense, business continuity assurance, and staff awareness and training.

Each EU country must integrate NIS2 into its national laws, and the directive leaves room for interpretation, which creates uncertainty in the daily work of CISOs. This is especially challenging when the directive states that measures must be: proportional to the size, cost, and risk of the activity and take into account the state of the art.

As is always the case with technology, practical guidelines cannot be too specific because, by the time they are written down, they are likely already outdated. Hence the phrase “taking into account the state of the art,” which essentially means staying at the cutting edge of technology.

Moreover, what is considered proportional may be subject to the interpretation of the authority enforcing the regulation. Therefore, it is wise to err on the side of caution with these practical considerations.

System Security According to NIS2

Organizations must demonstrate their capability to defend critical infrastructure, which involves two main aspects.

The first aspect is building a strong infrastructure, essentially a castle with resilient walls, well-managed by the NOC (Network Operations Center), which mainly involves:

- Hardening servers and endpoints, securing each element with best practices.

- Effective access management to those walls, with multi-factor authentication and a strict access and identity policy for both users and devices.

- Encryption systems, backups, redundancies, and other necessary measures for resilience and business continuity as required by NIS2.

The second aspect is that once these robust walls are built, they must be actively defended, which includes:

- Using EDR (Endpoint Detection and Response).

- Implementing Intrusion Detection and Prevention Systems (IPS/IDS) for proper security monitoring.

- Utilizing SIEM (Security Information and Event Management).

Incident Response and Management According to NIS2

This is another key area of the law, requiring:

- Rapid and clear communication of incidents (to the previously mentioned CSIRT) within 24 hours or less from the moment of discovery.

- Proper management of these incidents.

Given the significant penalties imposed by NIS2 for non-compliance in these areas, it is worth exploring this topic in greater depth.

SIEM and IPS/IDS as Key Elements for Complying with the NIS2 Directive

For a critical organization, using SIEM systems and threat detection systems is essential for achieving adequate defense.

When combined with EDRs that protect endpoints, and IDS and IPS that operate at the network and host levels, a system like Pandora SIEM becomes the brain of your security operation, because it:

- Collects logs: From networks, servers, and even the office coffee machine—because someone thought it was a good idea to buy a “smart” one.

- Correlates events: If someone in Bangladesh accesses the server in Barcelona and that “employee” downloads a suspicious file, the SIEM connects the dots and takes action, alerting and mitigating the threat. Pandora’s AI features, for example, make that correlation even more effective.

- Generates automatic reports, so you don’t have to burn your eyes staring at Excel during an audit.

In this way, you ensure that you are always considering “the state of the art” and its ongoing advancements.

Log Collection Requirements Under NIS2

We may excel in security, but the old proverb always holds true: It’s not a question of if an incident will happen, but when it will happen.

The incident response and communication requirements set by NIS2 necessitate proper log collection, storage, and management, which will also be crucial for passing the mandatory reviews and audits.

Yes, Chapter VII (Article 32) is explicit about this, and organizations must be able to pass these audits and reviews—even in the absence of incidents. This means collecting, storing, and easily reviewing logs while ensuring their integrity and authenticity.

For a critical organization, this requires professional tools that make this process seamless.

The temptation to use free applications is strong, but they are insufficient against today’s threats facing key sectors… and they won’t help avoid the auditor raising an eyebrow and reaching for the “non-compliant” stamp.

How Pandora FMS and Pandora SIEM Help Ensure NIS2 Compliance

Anything less than best security practices, supported by advanced tools, is insufficient for a critical organization. It complicates operations and makes legal compliance challenging.

That’s why Pandora SIEM provides:

- Advanced security monitoring with real-time threat detection.

- AI-supported security event correlation, leading the way in best practices and security technology as required by NIS2.

- Audit-ready reporting to demonstrate regulatory compliance during audits and controls.

- Centralized log collection and analysis with long-term retention, enabling clear communication in the event of an incident and easier incident resolution by quickly identifying what happened, how, and where.

NIS2 is Europe’s answer to an increasingly turbulent global cybersecurity landscape. But let’s face it: technology laws often lag behind and can sometimes be ambiguous in scope and interpretation.

This creates nightmares for CISOs and compliance professionals, but the solution is clear: Stay ahead of the legislation.

Lead the way in best practices and tools, so that when the next regulation arrives with its thousand pages of rules, you are already a step ahead—and it doesn’t come crashing down on your head.

This way, you’ll ensure that legal requirements don’t join forces with malicious code to complicate your day-to-day operations.

by Pandora FMS team | Last updated Feb 13, 2025 | ITSM

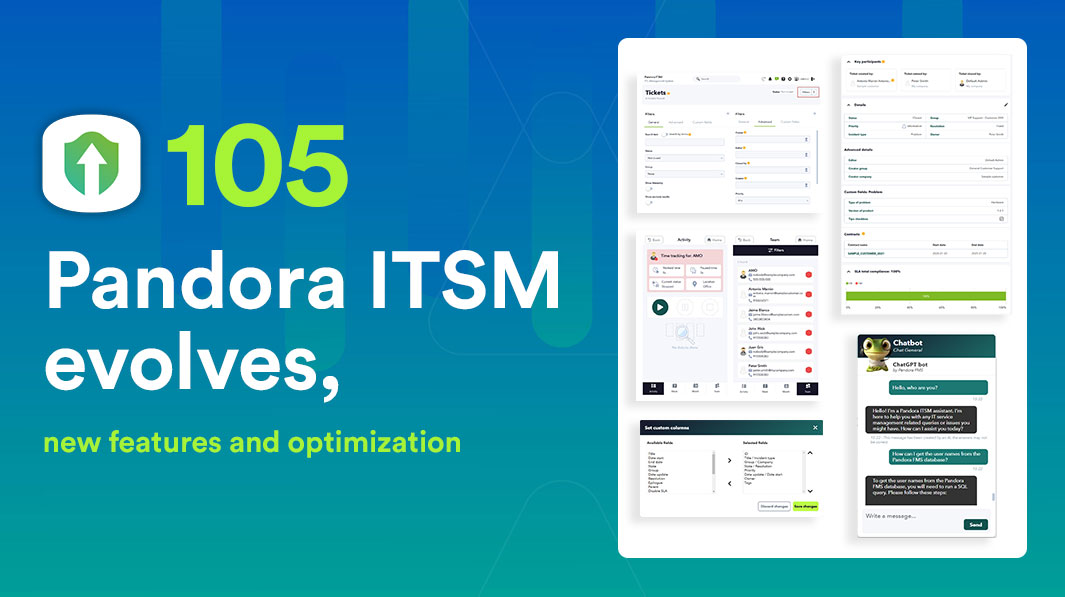

With the new Pandora ITSM version 105, you now have features designed to improve your workflow and optimize ticket and project management.

Key Enhancements in Pandora ITSM 105

New Filtering System

You can now filter and view results more efficiently in Tickets, Users, Project Board, Contracts, and Invoices. This system will expand to more sections in future versions, allowing for greater flexibility in daily management.

Enhanced Ticket View

The layout of fields in tickets has been reorganized to improve visibility and ease of use. Additionally, a new contracts section has been included to streamline access to relevant information, ensuring a better user experience.

Customizable Ticket Design

You can now rearrange, add, or remove fields in the ticket view according to your needs, with real-time editable settings through the new filters. This flexibility allows each team to tailor Pandora ITSM to their specific workflow.

Mobile Timetracker Optimization

The mobile version of the timetracker has been redesigned to be more intuitive and functional. All options available in the web console have been incorporated, ensuring that users can efficiently manage their time anytime and from any device.

ChatGPT Support in Chat

ChatGPT has been integrated into Pandora ITSM’s chat feature, providing quick and accurate responses to technical or support inquiries. This integration enhances user assistance and facilitates real-time issue resolution.

Tags in Tickets and Projects

It is now possible to add tags to tickets and projects, making it easier to categorize and search for relevant information. This feature allows for quicker access to work items and improves internal organization.

New Project Management View

With a visual interface similar to Trello, this new view simplifies task and project management with tags, custom statuses, and greater organizational flexibility. Additionally, it enables easy task movement between columns for more dynamic tracking.

New Workflow for Timetracker

The timetracker workflow has been optimized, allowing the configuration of alerts and automated reminders to improve workday tracking. It is now possible to schedule notifications that warn about work-hour limits or forgotten check-ins.

Improvements in Management and Security

New Licensing System

Starting with this version, clients who update will need to request a new license through the Warp Update > License menu. This change ensures greater security and control over active licenses on the platform.

Database Update

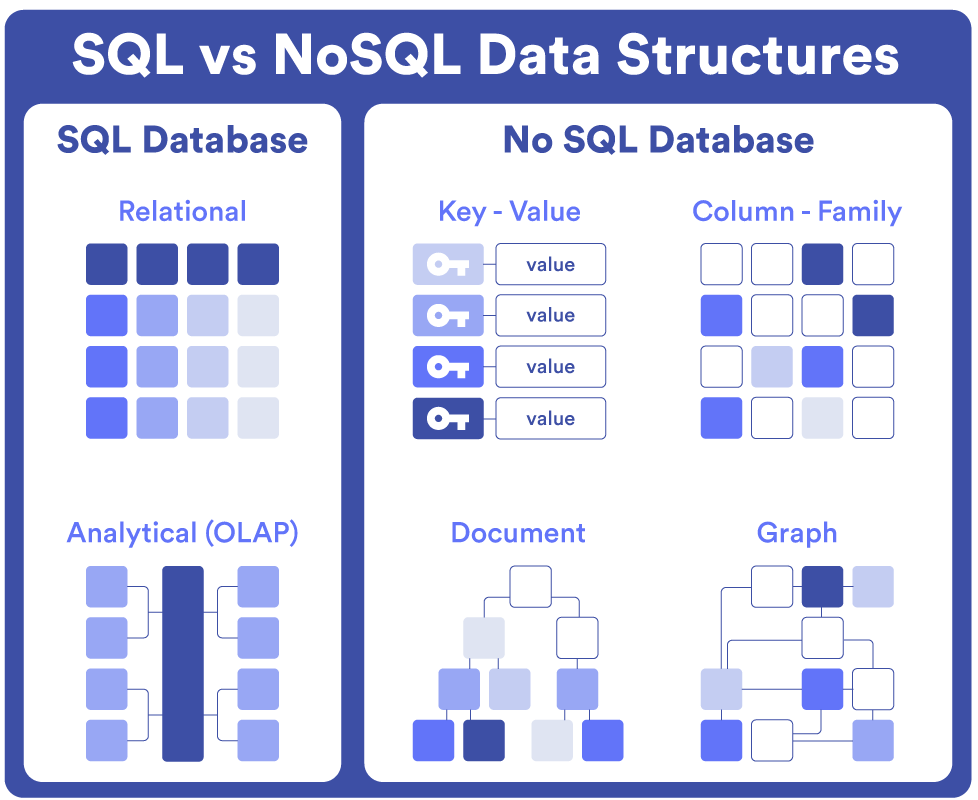

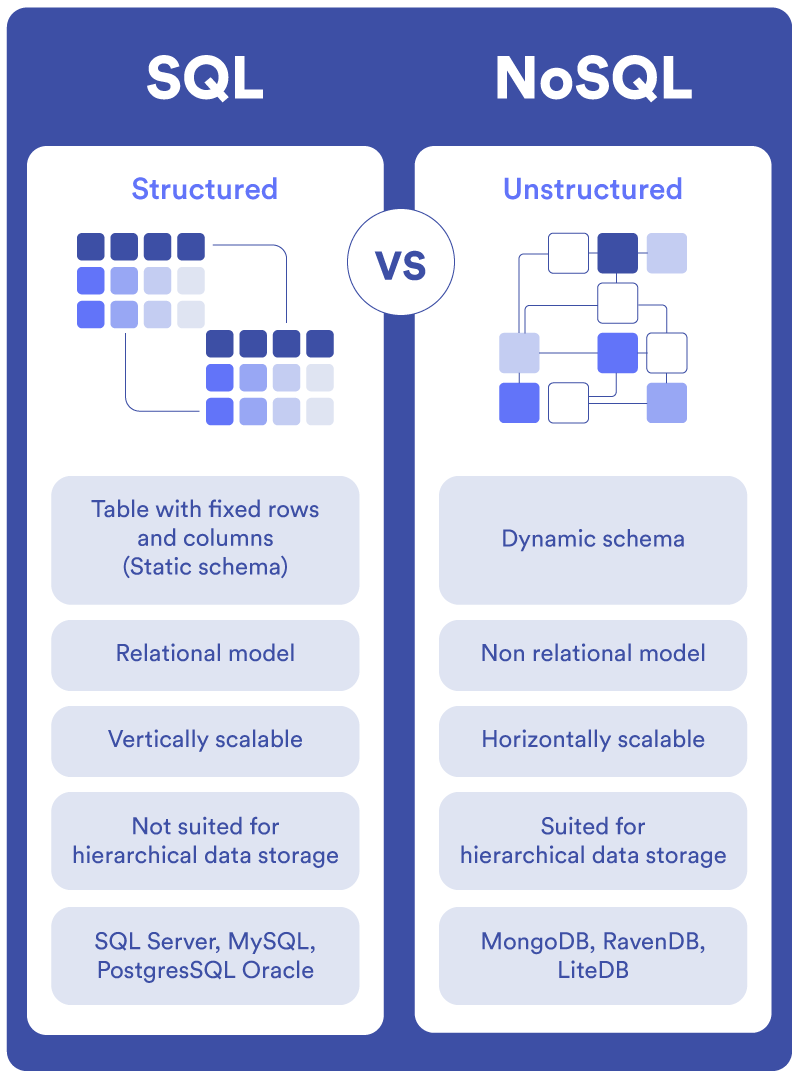

To optimize performance and compatibility, upgrading to Pandora ITSM 105 requires migrating from MySQL 5 to MySQL 8. This update ensures greater stability and performance in data management, improving the overall operation of the platform. Refer to the official documentation for more details.

Other Improvements and Fixes

- A new notification section has been added for super administrators.

- Enhancements in data export and report customization.

- Optimized interface for work unit management.

- Updated integration with third-party systems for better compatibility.

Explore all the new features of Pandora ITSM 105 and optimize your team’s management.

Visit our official Wiki or check the technical documentation for more details on this version.

by Sancho Lerena | Last updated Feb 4, 2025 | Pandora FMS

In the world of infrastructure management and enterprise software, the choice between on-premise and SaaS (Software as a Service) solutions has become a strategic decision for every organization, influencing key areas such as security, flexibility and operational costs.

Both models offer different approaches to software implementation and usage. While SaaS stands out for its accessibility and ease of adoption, the on-premise model provides absolute control over data and infrastructure, making it particularly relevant in industries where security and regulatory compliance are top priorities.

In this article, we analyze the advantages and disadvantages of each model, discussing practical use cases and offering strategic advice to help organizations make informed decisions. Regardless of your needs, understanding these approaches will enable you to optimize your infrastructure management effectively.

What Is the On-Premise Model?

The term “on-premise” refers to a model in which software is installed and operated on an organization’s local servers. Instead of relying on an external provider for hosting and application management, the company purchases a perpetual license and maintains full control over its infrastructure and data. This means that all resources required to operate the system—hardware, storage, network, and IT staff—are under the direct control of the organization.

For example, a financial sector company may choose an on-premise model to ensure that its sensitive data remains entirely within its domain and complies with strict local regulations.

In the field of infrastructure management software, this model is common in tools such as:

- ITIM (IT Infrastructure Management): Monitoring and optimizing critical infrastructures, such as servers and networks.

- ITOM (IT Operations Management): Automating and managing operational tasks in complex IT environments.

- SIEM (Security Information and Event Management): Enabling security monitoring and analysis.

- ITSM (IT Service Management): Managing IT services.

- CMDB (Configuration Management Database): Documenting assets and their relationships with services.

The on-premise model provides a custom and controlled experience, which many companies consider essential, especially in industries where security and regulatory compliance are critical.

What Is the SaaS Model?

The SaaS (Software as a Service) model is based on cloud-hosted applications provided as a service by external vendors. This approach is especially beneficial for companies with distributed teams or those needing to scale quickly, as it allows access to tools from anywhere with an internet connection and the ability to adjust capacity in real time according to needs.

Instead of installing the software locally, companies access it via the Internet through a monthly or annual subscription. This model is common in tools such as:

- RMM (Remote Monitoring and Management): Remote management of devices.

- APM (Application Performance Management): Optimizing application performance.

- ESM (Enterprise Service Management): Extending ITSM capabilities across the organization.

SaaS has gained popularity due to advantages such as:

- Ease of implementation: Enables quick deployment, eliminating the need for complex initial configurations.

- Lower upfront costs: Offers a low initial investment compared to on-premise solutions, with predictable recurring fees.

- Immediate and ubiquitous access: Users can access the software from any location and device with an Internet connection.

However, this model also has limitations that must be considered:

- Vendor dependency: Service interruptions or unilateral changes to contract terms may impact business operations.

- Data security risks: Sensitive information could be exposed due to security breaches or unauthorized access on the provider’s servers.

Despite its benefits, the SaaS model is not always the best choice for organizations that prioritize data sovereignty or require high levels of customization.

Criteria Comparison: On-Premise vs. SaaS

|

Criteria

|

On-Premise

|

SaaS

|

|

Initial Implementation

|

Requires time for local installation and configuration.

|

Quick implementation and immediate access via the cloud.

|

|

Costs

|

High initial investment but predictable in the long run.

|

Low initial costs, but recurring fees that may vary.

|

|

Security

|

Full control over infrastructure and data.

|

Risk of data exposure due to reliance on an external provider.

|

|

Flexibility

|

Complete customization and integration with legacy systems.

|

Limited to the provider’s standard capabilities.

|

|

Vendor Dependency

|

Low, as the organization has full control.

|

High, including support and service continuity.

|

|

Updates

|

The company decides when and how to apply updates.

|

Automatic, but they may be disruptive.

|

|

Scalability

|

Internal control over infrastructure, scaling as needed.

|

Immediate scalability, but subject to provider limitations.

|

|

Regulatory Compliance

|

Ensures data sovereignty and facilitates legal compliance.

|

May be challenging to meet local or industry-specific regulations.

|

|

Performance

|

Minimal latency impact when operating on internal networks.

|

Dependent on the quality of the internet connection.

|

Real Cases: The Hidden Risks of SaaS

Although the SaaS model offers significant benefits, it also presents risks that can severely impact an organization’s operations if proper precautions are not taken. Below are five real cases illustrating the most common challenges associated with this model:

- Critical vendor dependency:

A large retail company experienced a multi-day outage due to a massive failure in its SaaS provider’s servers. The lack of local data backups and an internal contingency system brought operations to a standstill, resulting in multimillion-dollar losses.

- Unexpected pricing policy changes:

A tech startup saw a sudden 40% increase in its SaaS provider’s fees without prior notice. Due to its deep integration with the tool, it was unable to migrate quickly, directly impacting its profit margins.

- Data loss due to provider shutdown:

A small business relying on a niche SaaS solution lost all its data when the provider abruptly ceased operations. Without an external backup plan, the losses were irreparable.

- Exposure to security attacks:

An educational institution suffered a cyberattack that compromised sensitive information stored on the SaaS provider’s servers. This incident led to legal and regulatory issues that damaged its reputation.

- Integration limitations:

A manufacturing company had to invest in costly adaptations to integrate its local ERP system with a SaaS tool that was not natively compatible with its legacy systems.

These examples highlight the importance of conducting a thorough analysis before adopting SaaS solutions. Evaluating factors such as vendor dependency, data security, and compatibility with existing infrastructure is crucial. Additionally, implementing mitigation strategies like local backups and risk assessments can significantly reduce the impact of these challenges.

Why the On-Premise Model Remains Relevant in the 21st Century

In an era dominated by cloud solutions, the on-premise model has evolved to remain a strategic option, particularly for organizations that value data sovereignty, security and complete control over their operations. Below is a detailed analysis of the key advantages of the on-premise model, supported by real-world cases that illustrate its relevance.

Full Control Over Data

With an on-premise model, organizations maintain absolute control over their information, preventing data from being transferred to third parties or stored in locations beyond their reach. This autonomy enhances security and ensures that, in the event of disruptions or disasters, local backups enable a complete and rapid recovery, minimizing any operational impact.

A notable example is Basecamp, which decided to return to local models after facing issues with SaaS solutions. Their decision was driven by concerns over data security and the need for total operational control.

Similarly, Dropbox migrated much of its infrastructure from the public cloud to its own data centers in 2016. This transition not only improved operational efficiency but also strengthened its technological sovereignty by managing critical resources internally. These strategies demonstrate how the on-premise model can be a vital tool for organizations that prioritize the protection of sensitive data.

Advanced Customization and Flexibility

The on-premise model offers a unique level of customization, allowing organizations to tailor their systems entirely to their specific needs. From technical configurations to deep integrations with legacy systems, this approach is ideal for companies with complex operational processes or unique requirements.

A relevant example is Airbus, which chose on-premise solutions to manage its critical IT infrastructure. This decision enabled the company to integrate its legacy aerospace production systems, ensuring operational continuity and optimizing processes without the constraints imposed by SaaS solutions. This level of customization makes on-premise a key tool for industries that require solutions tailored to their operational reality.

Technological Sovereignty

By operating with an on-premise model, companies eliminate dependence on external providers and gain the freedom to define their own technological strategies. This includes controlling update schedules, adjusting configurations according to their needs, and avoiding the risks associated with unilateral changes in policies or pricing.

For instance, in 2016, Dropbox launched the “Magic Pocket” project, migrating a significant portion of its infrastructure from the public cloud to its own data centers. This move not only reduced operational costs but also granted the company absolute control over its critical systems and data. This level of technological independence is essential for businesses that prioritize long-term stability and security.

Guaranteed Regulatory Compliance

In sectors such as banking, healthcare, and government, regulations often require that data remain within national borders or comply with specific security measures. The on-premise model provides a clear advantage by allowing organizations to manage their data within local infrastructures, ensuring complete control over storage, access, and protection.

For public administrations, regulatory compliance is not only a legal obligation but also a matter of trust and sovereignty. This model enables governments and public agencies to ensure that sensitive information, such as citizen data or tax records, is not transferred to third parties or hosted outside the country, minimizing legal and strategic risks.

As a result, many public institutions have chosen to implement on-premise solutions, prioritizing information security and rigorously complying with local and international regulations.

Predictable Long-Term Costs

The on-premise model requires a high initial investment in infrastructure and licenses, but it offers financial predictability that many businesses consider essential. Unlike the SaaS model, which relies on recurring payments and is subject to sudden price changes, the on-premise approach eliminates these variable costs and allows organizations to maintain direct control over operational expenses.

According to an article from Xataka, an increasing number of companies are repatriating their infrastructure from the cloud to on-premise environments due to unexpected and uncontrolled cloud service costs. This phenomenon, known as “cloud repatriation,” highlights the need for many organizations to regain financial and strategic control over their technology systems.

Additionally, a Forbes analysis explains that while cloud services may appear more cost-effective initially, operating expenses tend to increase over time, especially for organizations with intensive workloads. In contrast, the on-premise model enables businesses to plan their technology investments more precisely, without being influenced by external factors that may impact their budgets.

For example, industries such as banking and healthcare, which have stringent regulatory and operational requirements, have found the on-premise model to be a more sustainable solution. These sectors have migrated from the cloud to local infrastructures to avoid cost fluctuations and ensure regulatory compliance. A Leobit study supports this trend, emphasizing that many organizations find the return on investment (ROI) of on-premise solutions superior to cloud solutions when evaluated over a period of more than four years.

Finally, it’s crucial to note that the decision between SaaS and on-premise should not be based solely on annual or monthly cost comparisons. A medium- to long-term strategic analysis shows that on-premise solutions are often significantly more cost-effective for companies seeking financial stability and full control over their technology assets.

Higher Performance in Internal Networks

On-premise solutions, operating directly on a company’s local infrastructure, offer significant advantages in terms of performance, particularly in reducing latency and dependence on an Internet connection. By working on local networks, these solutions ensure faster response times and more consistent performance compared to cloud-based applications.

A notable example mentioned in Puppet is the case of companies like Basecamp, which decided to repatriate their infrastructure from the public cloud to on-premise data centers. This move allowed them to regain control over their infrastructure, enhance security, and reduce vulnerabilities while implementing customized measures to comply with local regulations.

Additionally, according to a report by EETimes, 83% of CIOs plan to repatriate workloads to local infrastructures in 2024. The main reasons for this trend include performance control, reduced operational costs, and improved security for critical data.

An analysis by The New Stack also highlights how companies in sectors such as finance and telecommunications are reevaluating exclusive cloud usage, favoring a hybrid or fully on-premise approach to optimize the performance of critical applications and reduce latency in their operations.

These examples reflect a growing trend toward workload repatriation, where companies seek to balance the advantages of the cloud with the need for total control and greater operational efficiency that on-premise solutions provide.

Compliance with Specific Regulations (Sovereignty and Local Regulations)

On-premise solutions offer significant advantages in terms of regulatory compliance, especially in industries where regulations require that data remain within national borders. This approach allows companies to ensure that sensitive information is not transferred to foreign servers, minimizing legal and strategic risks—an essential factor in sectors such as banking, healthcare and public administration.

A Capgemini analysis indicates that many organizations have chosen to repatriate their data from cloud environments to local infrastructures to comply with strict sovereignty regulations. This move has enabled them to implement more specific and customized measures that ensure the protection of sensitive data against vulnerabilities inherent in shared cloud environments.

In this context, the use of on-premise Security Information and Event Management (SIEM) systems has proven to be a crucial solution for meeting regulatory requirements and ensuring data sovereignty. For example, tools like Pandora SIEM not only offer a highly customizable and reliable platform but also allow data to remain within the company’s infrastructure, ensuring security and regulatory compliance.

Similarly, solutions such as OpenText ArcSight™ Enterprise Security Manager stand out for their ability to facilitate compliance with local regulations while maintaining absolute control over critical information.

These examples underscore how on-premise solutions are essential for companies operating in regulated sectors, providing them with the necessary tools to ensure strict regulatory compliance. Additionally, they reinforce the operational autonomy of organizations, ensuring that sensitive data remains under their direct control.

Custom Scalability

On-premise solutions allow companies to exercise complete control over their technological infrastructure, offering scalability tailored to their specific needs. This level of customization eliminates the limitations often imposed by SaaS providers, giving organizations the flexibility to adjust their resources as their operations evolve.

A well-known industry example is Spotify, which, after migrating to AWS in 2011, decided in 2018 to repatriate some of its streaming services to its own data centers. This move enabled them to regain greater control over the scalability of their systems, ensuring both service quality and operational cost optimization.

Security

On-premise solutions stand out by allowing companies to fully manage the security of their systems, providing absolute control over their technological infrastructure. This approach enables the implementation of customized security controls specifically designed to protect sensitive data, which is crucial in industries with strict security and privacy regulations, such as finance, healthcare, government, and defense.

In response to security incidents in cloud environments, many companies have chosen to migrate to on-premise solutions. This transition has allowed them to strengthen the protection of critical data, implementing tailored security measures that surpass the limitations of shared cloud environments.

Seamless Integration with Legacy Systems

On-premise solutions are particularly well-suited for companies that rely on legacy systems, as they allow for deeper and more controlled integration with existing technological infrastructures. This approach not only facilitates operational continuity but also optimizes the use of prior technology investments, ensuring that current systems can evolve without disruptions or incompatibilities.

By operating in an environment fully managed by the organization, the on-premise model provides the flexibility needed to adapt solutions to the specific requirements of legacy systems. This is essential for companies with critical processes that depend on established technologies and need to minimize risks associated with complex migrations or compatibility failures.

Final Reflections on Software Ownership

The on-premise model grants companies full ownership of software licenses, a crucial advantage for avoiding the risks associated with external control that characterizes SaaS models. This approach is especially valuable in environments where stability and long-term predictability are essential for business operations.

Full Control Over Licenses

Once acquired, an on-premise software license is not subject to unexpected price adjustments imposed by external providers. This control allows companies to plan their technology investments without surprises, ensuring greater financial and operational stability.

Unexpected Price Changes

SaaS models give providers the ability to unilaterally modify pricing, which can negatively impact a company’s operating costs. Additionally, these adjustments are often accompanied by mandatory updates that may not always be relevant or necessary for the customer.

A notable example occurred in 2020, when Adobe increased subscription prices for Creative Cloud, sparking complaints among business users who relied on these tools. Many companies, including small design firms, opted for perpetual software versions or alternative solutions that they could manage internally to avoid these additional costs.

Service Discontinuation

Another risk associated with the SaaS model is the potential discontinuation of service by the provider, which could disrupt critical operations. Companies that rely on these tools are subject to the strategic decisions of providers, putting the continuity of their processes at risk.

For example, in 2019, Google decided to discontinue Google Cloud Print, leaving many organizations without a suitable solution for printing in complex enterprise environments. In response, several of these companies migrated to on-premise solutions, ensuring continuity and full control over their operations.

(Supposed) Advantages of the SaaS Model Over On-Premise

Immediate Access and Global Availability

SaaS allows users to access the solution from anywhere at any time, requiring only an internet connection. This is ideal for organizations with distributed teams or mobility needs.

That is simply not true: While SaaS facilitates access, on-premise solutions can also provide remote access through configurations such as VPNs or secure portals. Moreover, these options are often more secure, as the traffic is controlled and protected by the company’s internal infrastructure, rather than relying on general security measures implemented by a third-party provider.

Low Initial Costs

SaaS does not require a large initial investment in hardware or perpetual licenses, as it is based on a recurring fee. This makes adoption easier for companies with limited budgets.

Caution! An on-premise model does not always mean high initial costs. Companies can reuse existing infrastructure and start with licenses scaled to their current needs. Additionally, trial periods and initial versions of on-premise solutions are often just as accessible as SaaS. In the long run, recurring SaaS costs can far exceed the initial investment in on-premise solutions.

Fast Implementation

SaaS solutions are ready to use almost immediately, without the need for lengthy installation or configuration processes on local servers.

Be careful! While SaaS implementations are often fast, they frequently require “onboarding” processes that involve external consulting and specific adjustments, adding hidden costs and extra time. On the other hand, well-planned on-premise systems can be configured quickly and offer the advantage of being fully adapted to local environments from the start.

Easy Scalability

SaaS makes it simple to increase or decrease capacity based on business needs, paying only for what is used. This allows companies to handle demand spikes without acquiring additional infrastructure.

Keep in mind that many SaaS systems are designed for a specific range of customers, primarily small and medium-sized businesses (SMBs), and may not scale effectively for large enterprises. In contrast, on-premise solutions enable controlled and optimized scalability, free from the technical or commercial limitations imposed by an external provider.

Automatic Updates

Software updates and enhancements are implemented automatically, ensuring that users always have access to the latest version without interruptions or additional costs.

While this is a relevant advantage, on-premise solutions can also include vendor-managed updates through comprehensive support contracts. The key difference is that with on-premise, the company decides when to implement updates, avoiding unwanted disruptions that often occur with automatic SaaS updates.

Reduced IT Workload

Infrastructure management, system maintenance, and technical issue resolution are the provider’s responsibility, freeing up internal IT resources.

Just like the previous point, an on-premise support contract can cover infrastructure management and maintenance, allowing the internal team to focus on other tasks. The key difference is autonomy: with on-premise, the company retains full control over its systems and decisions.

Easier Integration

Many SaaS solutions are designed to integrate quickly with other tools through APIs, simplifying interoperability in complex environments.

However, SaaS integrations are often limited to standard and widely used solutions, excluding proprietary systems or specific technologies that are not prioritized by SaaS developers. On-premise solutions, on the other hand, allow for deep and customized integrations with legacy systems, making them better suited to the unique needs of each company.

Built-in Backup and Disaster Recovery

Most SaaS providers include advanced backup and disaster recovery solutions as part of the service, eliminating the need for internal management of these tasks.

However, this depends entirely on the provider, as data security is fully in their hands. This can be problematic since the provider’s liability is usually limited to the subscription fee paid, which does not reflect the true value of a company’s data. Additionally, many SaaS providers restrict the ability to perform full data backups, effectively tying businesses to the provider’s ecosystem.

Cost Predictability

Subscription models allow for predictable operating expenses, with fixed monthly or annual fees that simplify financial planning.

However, perpetual on-premise licenses also offer cost predictability, as support costs remain stable and updates are optional. In contrast, SaaS providers can unilaterally change their pricing and policies, leaving businesses without viable alternatives.

When calculating costs over four years, it’s nearly impossible to do so accurately with SaaS solutions (as most providers will only offer projections for up to three years). Meanwhile, on-premise projects commonly establish financial plans spanning 10 years, ensuring long-term stability.

Access to Advanced Technologies

SaaS platforms often adopt emerging technologies quickly, such as artificial intelligence or advanced analytics, ensuring that businesses have access to innovations without making additional investments.

While emerging technologies are appealing, SaaS environments tend to focus on the latest trends, often neglecting more mature and well-established technologies that many businesses still rely on. This can lead to compatibility issues and a lack of support for existing systems.

Conclusion

The SaaS model offers clear advantages in terms of accessibility, low initial costs, and reduced maintenance, but these benefits often come with significant limitations. On-premise solutions provide greater control, security, and flexibility, which can outperform SaaS in many scenarios, especially when customization, deep integration, and data sovereignty are critical factors.