Version control system, authoritarianism and freedom to code

Version control is essential for the continuous development of software today: it is the basis for the continuous release and implementation of software.

In a previous article, we described how in Pandora FMS NG we are aware of increasing our flexibility and adding better features (and of course correcting the programming bugs as soon as possible). As we write these lines, we have released version 723… But what do we mean by version control? Let’s find out…

Introduction

As you know, Pandora FMS has two components: the OpenSource side and the enterprise version. The first one is the open source version, fully functional and must be installed even for the business version, although it is much more limited. If it is better suited to your needs, we recommend “Enterprise”). It has consulting and support for customers, and the right features to manage your entire fleet of ships…. we mean, computers!

A brief history

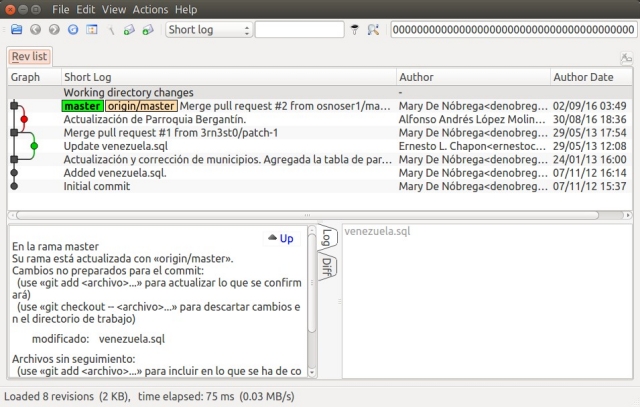

At this point we haven’t told you about version control, but here we go. Our open source version is hosted online, where you can check the different components line by line, command by command, and where we publish frequently the modifications of the Pandora FMS system; that, in fact, is a version control system.

How did we get to this point, this way of working and maintaining our beloved algorithms? Let’s look back and see an overview. Keep your minds open.

The 1980s

Yes, we lived during those days when the use of spreadsheets was the “killer application” that drove the massive purchase of personal computers for small businesses and some homes. If we wanted to keep a “version control” of our documents, we would simply copy them to another floppy disk, making a folder with the corresponding title, accompanied by date and time (also in the name of the directory).

The problem came later; we had to know from which disk we could recover something we had erased or cut. The most experienced ones, they titled an album for each week or month with the name of the owner and the historical period, and as we can see we were already giving shape to a rudimentary version control. If you ask yourselves, the situation was not very different or distant in companies and universities for documents of common purpose.

The 1990s

With the increased power of computers, and the size and affordability of hard disks, we left everything there with different directories, as we mentioned. The Internet was peaking all over the world and with faster processors we had the opportunity to have programs that could compress and decompress files and maintain the structure and hierarchy of the directories that contained it. Some programs, word processors, started to keep an internal version control, optionally, in the same file that contained the document, but it was bulky and slow.

At this time, companies went further and started using proprietary software with the client and server model; we’re not ashamed to say it, but we used the “Microsoft Visual Source Safe®” (GNU/Linux was still in its early stages). The Unix environment is where they were most advanced, but always with the centralized system in mind with the veteran “Concurrent Versions System” or CVS that had been around (since 1986) as free software for several platforms (and no, there was nothing better than that, although in 2000 a slightly better replacement would come along).

At that time we believed that proprietary software was unbeatable, until the “2K” millennium failure came and it made us believe that, in the face of any other similar situation, we were helpless and without access to the source code to correct errors that could cause our systems to collapse.

21st century: 2000 decade

Domestic local area networks were already established and had been used by large and small enterprises and educational institutions for a long time. Sharing networked hard disks was easier and the famous removable memories were used to transport them more quickly, as the Internet was not as fast as it is today (we will say the same in ten years’ time). Nothing seemed to disturb our apparently ideal situation and when we thought that everything had already been invented…

Linus Torvalds got his hands on version control. To shorten the long story, Mr. Torvalds had accumulated a great deal of experience in developing the Linux kernel and knew all the issues involved in managing and operating version control – the equivalent of programming the same kernel. He needed a break, from 1998 to 2002 the community of enthusiasts and followers grew, who had a lot to contribute to the culture and emergence of free software. Although we were not directly part of the process, the beginning of the end of authoritarianism began and the freedom to code began; everyone contributed their proposal through the version control system. They used Subversion, an open source version control program, but it was a great reflection of what we told you about the previous decades: directory and file structure management and centralized administration.

The best option at the time was a proprietary version control program called BitKeeper (ironically, since 2016, released in free software), from the company BitMover and after an agreement (which the Father and Founder, Richard Stallman, never liked) a somewhat leonine agreement or commitment was reached with the ” Linux ” community.

One day – after three years and hundreds of written gigabytes – Mr. Torvalds broke free from all the constraints imposed on him by that corporation: he commissioned Mr. Andrew Tridgell (creator of rsync and Samba Server) and they quickly raised a step and created the “SourcePuller” (which became BitKeeper). That was the last straw: divorce was inevitable.

So, one day in 2005, Mr. Torvalds informed the world not of a new program but of a paradigm and protocol in version control: Git was born.

Git, version control

By taking advantage of rsync’s experience and goodness, it was possible to build on a solid foundation and unify all the concepts, desires, corrections – in short, a “digital jumble”. And excellence means, from all this, to develop a project that is open to all and to make it happen. We affirm what we say: the ideas in this globalized and connected world are endless, the difficult thing is to put work, commitment, time, money and effort into making it happen, and that’s what they did so well.

- The most important thing: decentralization of projects. Moreover, projects as such do not exist; a directory contains a set of files important to us, whether they are related to each other or not. That’s no problem for Git, which has no hierarchy (the SourceForge website, which uses Subversion, still has the need to create a project as a condition for publishing).

- It is focused on performance, without neglecting safety. An inspiring program to develop Git was Monotone, which is stored in a database that is cloned by each of the participants. This means that the files are contained in larger files (databases) that need to be synchronized and if we add up the data integrity checks, we will lose valuable time there.

- Development is not linear (Git didn’t actually invent this, it already existed): it consists of the possibility of taking provisional branches of development from points or photographs in the repository, which we can then integrate into the main branch or simply discard them.

- Fully distributed: each person has a complete copy of the repository. However, Git is still incredibly fast at creating and managing ramifications.

- No transport protocol was attached either: currently no rsync is used, in favor of HTTP, HTTPS, FTP or SSH, and even the one that needs to be implemented, via a web socket. It even provides compatibility to emulate CVS on your transport.

- It also provides control over transfers that are not carried out correctly or are incomplete, which may compromise the integrity of the data.

- Another feature that other programs have also had for a long time is to label each of the sets of modifications uploaded to the Git server, and then request them or simply observe the description of the changes made.

These are just a few relevant features. The topic is complex, but there is a lot of information on the Internet if you want to learn more about it.

Git Server

Nowadays we can store our own repositories, the works we develop (documents, programming, spreadsheets, etc.), in our own Git server. Just take an old computer with a decent hard drive connected to the local area network and install a GNU/Linux Debian operating system, or even better, Ubuntu. In the command terminal we will simply run:

sudo apt-get install git-all

We set up configuration and credentials, and will be ready to manage ourselves in an organized manner. If we want a nice graphical editor to see the changes we can install QGit:

sudo apt-get install qgit

- GitLab Community Edition.

- GitTea.

- GNU Savannah.

- GitBucket.

- Gogs.

Like any new technology, there will always be people who will take advantage of this configuration and hosting job economically , we’ll talk briefly about GitHub®, a company that was recently acquired by the giant Microsoft®.

Git and GitHub® as a Version Control Industry

GitHub came at the right time, to offer us this saving of work and hardware by adding authentication systems on web servers and adapting the Git protocol to your interests. So far, their work scheme is to give free hosting to free software development and charge for private hosting to those who develop proprietary software.

This way of working is often criticized because GitHub® contains a large number of lessons and half code from students of programming languages, as well as study guides, scientific articles and even draft or follow-up legislation. They see this as a waste of resources, but if the GitHub® company doesn’t dislike it, why should it bother us? Other companies use GitHub® as a display case: they don’t actually carry the code there, but reflect what they do on their own Git servers.

“GitHub®’s hit point was to bring the general public closer to major software projects, report incidents and even propose improvements. Freedom to code with fraternity and equality!”

Other alternatives to GitHub

- GitPrep.

- Kallithea.

- Phabricator.

- Tuleap.

Conclusion

This article is not intended to be an opinion leader on the subject; books and books have been written about the control of brainy licensed versions and engineers have written about it. In any case, it has served us as a reminder -or presentation, if you are a new reader- of the existence of Pandora FMS public repositories: the most important thing is flexibility. Leave us your opinion in the comments section at the bottom of this article. We want to hear from you!

Programmer since 1993 at KS7000.net.ve (since 2014 free software solutions for commercial pharmacies in Venezuela). He writes regularly for Pandora FMS and offers advice on the forum . He is also an enthusiastic contributor to Wikipedia and Wikidata. He crushes iron in gyms and when he can, he also exercises cycling. Science fiction fan. Programmer since 1993 in KS7000.net.ve (since 2014 free software solutions for commercial pharmacies in Venezuela). He writes regularly for Pandora FMS and offers advice in the forum. Also an enthusiastic contributor to Wikipedia and Wikidata. He crusher of irons in gyms and when he can he exercises in cycling as well. Science fiction fan.