Случаи с клиентамиИнтересные истории, рассказанные нашими клиентами и партнерами.

Ускорьте цикл продаж и заключайте больше сделок с помощью Pandora FMS.

Разрабатываю программное обеспечение с 2004 года со страстью и талантом.

Познакомьтесь с удивительной командой, создавшей Pandora FMS.

Досье и графические ресурсы Pandora FMS.

Свяжитесь с отделом продаж, запросите коммерческое предложение или задайте вопросы о лицензиях.

Почему именно

Pandora FMS?

Познакомьтесь с одним из самых мощных программных продуктов для мониторинга вашей организации.

Присоединяйтесь к нам на нашем канале Discord.

Узнайте больше об истории и ценностях Pandora FMS.

Более 500 плагинов доступны для загрузки.

Discordで他のユーザーやチームとつながろう。

パンドラFMSの歴史と価値についてもっと知る。

Платформа мониторинга, созданная для гибкости и адаптации к любому типу организаций.

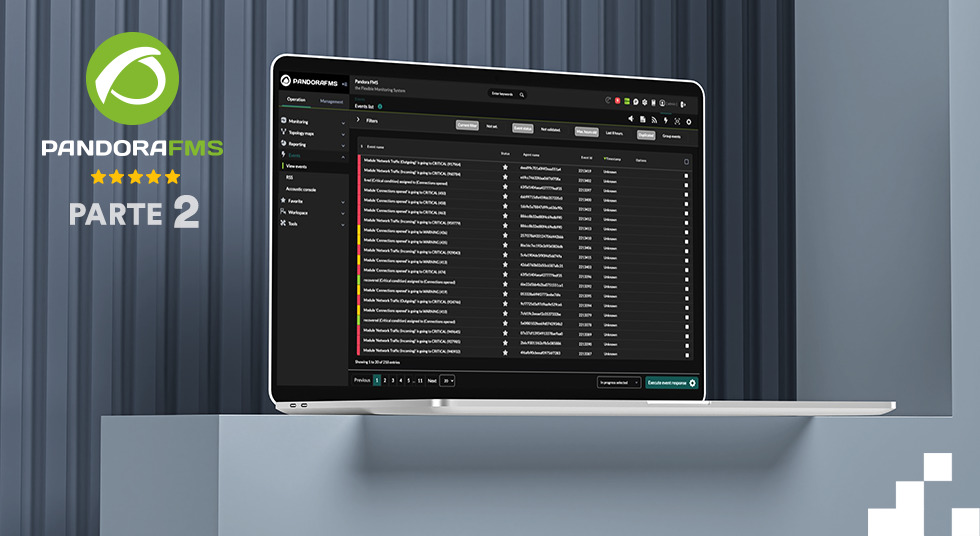

Характеристики

Приборные панели

Отчеты

Оповещения

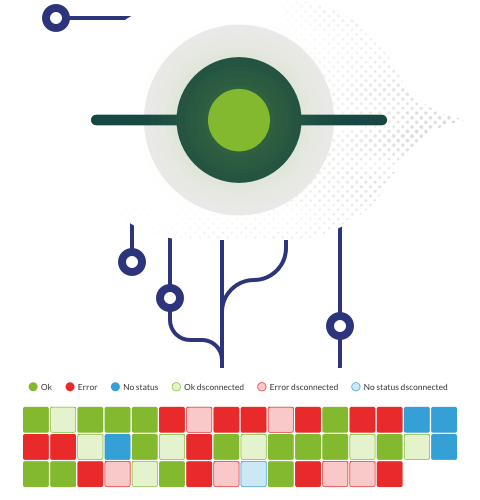

Распределенный мониторинг

Максимальная масштабируемость

Интеграции

API

OEM

По лицензии

On-Premise

SaaS

Открытый исходный код

+500

интеграций

Расширьте возможности вашего мониторинга. Pandora FMS отличается гибкостью и интегрируется с основными платформами и облачными решениями.

Сбор, централизация и консолидация данных журналов и событий из различных систем, приложений и устройств на единой платформе Pandora FMS.

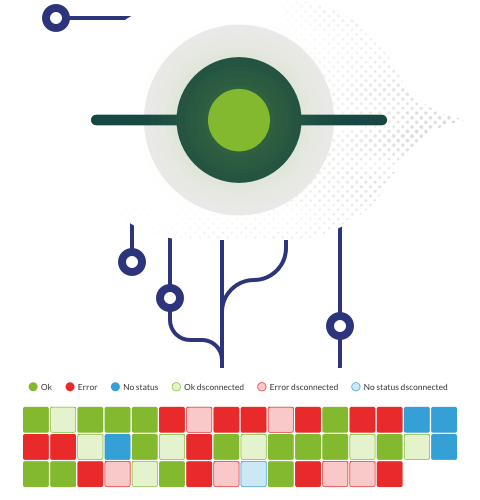

Решение для удаленного мониторинга и управления для проактивного контроля устройств, сетей и приложений. Идеально подходит для поставщиков управляемых услуг (MSP) и ИТ-команд, стремящихся к автоматизации, масштабируемости и контролю в режиме реального времени.

Мощная и гибкая служба поддержки для команд поддержки и обслуживания клиентов, согласованная с процессами библиотеки инфраструктуры информационных технологий (ITIL).

По типу лицензии

On-Premise vs SaaS

Типы ролей пользователей

Начните сегодня! ?

Начните 30-дневную пробную версию Pandora ITSM! Откройте для себя все наши возможности, без необходимости использования кредитной карты.

Программное обеспечение для удаленного управления серверами и ОС Windows, Linux и Mac, ориентированное на системных техников и компании, предоставляющие управляемые услуги (MSP).

По типу лицензии

Всего устройств

Начните работу! ?

Скачайте агенты Pandora RC и начните подключаться к удаленным устройствам в режиме онлайн за 4 простых шага.

デバイス、ネットワーク、アプリケーションをプロアクティブに監視するリモート監視・管理ソリューション。自動化、拡張性、リアルタイム制御を求めるマネージド・サービス・プロバイダー(MSP)やITチームに最適です。

ITIL(Information Technology Infrastructure Library)プロセスに沿った、サポートおよびカスタマーサービスチーム向けの強力で柔軟なヘルプデスク。

ライセンス・タイプ別

オンプレミス vs SaaS

ユーザーの役割の種類

無料トライアル ?

Pandora ITSM の30日間トライアルを開始してください!クレジットカードは不要です。

今日から始めよう →

ライセンスタイプ別

総デバイス数

Des histoires intéressantes racontées par nos clients et partenaires.

Accélérez votre cycle de vente et concluez plus d’affaires avec Pandora FMS.

Développer des logiciels depuis 2004 avec passion et talent.

Rencontrez l’équipe formidable qui est à l’origine de Pandora FMS.

Dossiers et ressources graphiques de Pandora FMS.

Contactez le service des ventes, demandez un devis ou posez des questions sur les licences.

Pourquoi choisir

Pandora FMS ?

Faites connaissance avec l’un des logiciels de surveillance les plus puissants pour votre organisation.

Comparaison détaillée des versions FMS de Pandora.

Plus de 500 plugins disponibles au téléchargement.

Ressources, documents, mises à jour et divers éléments téléchargeables.

Une plateforme de suivi conçue pour être flexible et s’adapter à tout type d’organisation.

Caractéristiques

Tableaux de bord

Rapports

Alertes

Surveillance distribuée

Grande évolutivité

Intégrations

API

OEM

Par licence

On-Premise

SaaS

Open source

+500

Intégrations

Augmentez la puissance de votre surveillance. Pandora FMS s’intègre aux principales plateformes et solutions cloud.

Collectez, centralisez et consolidez les données de journaux et d’événements provenant de différents systèmes, applications et appareils dans la plateforme unique qu’est Pandora FMS.

Solution de surveillance et de gestion à distance pour un contrôle proactif des appareils, des réseaux et des applications. Idéale pour les fournisseurs de services gérés (MSP) et les équipes informatiques à la recherche d’automatisation, d’évolutivité et de contrôle en temps réel.

Caractéristiques

Gestion des correctifs

Installation et mise à jour

Exécution de scripts personnalisés

Helpdesk puissant et flexible pour les équipes d’assistance et de service à la clientèle, aligné sur les processus de la bibliothèque d’infrastructure des technologies de l’information (ITIL).

Par type de licence

On-Premise vs SaaS

Types de rôles des utilisateurs

Essai gratuit ! ?

Commencez votre essai de 30 jours avec Pandora ITSM ! Découvrez toutes nos fonctionnalités, sans carte de crédit.

Logiciel de contrôle à distance pour serveurs et Windows, Linux et Mac, destiné aux techniciens système et aux sociétés de services gérés (MSP).

Par type de licence

Total des dispositifs basés

Commencez ! ?

Téléchargez les agents de Pandora RC et commencez à vous connecter à vos appareils distants en ligne en 4 étapes simples.

Interesting stories told by our customers and partners.

Accelerate your sales cycle and close more business with Pandora FMS.

Dossiers and graphic resources of Pandora FMS.

Contact sales, ask for a quotation or ask questions about licenses.

Why Pandora FMS?

Get to know one of the most powerful monitoring software for your organization.

Resources

An extensive collection from detailed guides that break down complex topics to insightful whitepapers that offer a deep dive into the technology behind our software.

Discover more →

Detailed comparison between Pandora FMS versions.

More than 500 plugins available for download.

Resources, documents, updates and various downloads.

Interesantes historias contadas por nuestros clientes y socios.

A acelerar tu ciclo de ventas y cierra más negocios con Pandora FMS.

Desarrollando software desde 2004 con pasión y talento.

Conoce el increíble equipo que está detrás de Pandora FMS.

Dossieres y recursos gráficos de Pandora FMS.

Contacta con ventas, pide presupuesto o resuelve dudas sobre licencias.

¿Por qué elegir

Pandora FMS?

Conoce uno de los softwares de monitorización más potentes para tu organización.

Comparación detallada entre las versiones de Pandora FMS.

Más de 500 plugins disponibles para descargar en nuestra librería.

Recursos, documentos, actualizaciones y diversos descargables.

Una plataforma de monitorización construida para ser flexible y adaptable a cualquier tipo de organización.

Funcionalidades

Dashboards

Informes

Alertas

Monitorización distribuida

Alta escalabilidad

Integraciones

API

OEM

Por licencia

On-Premise

SaaS

Open source

+500

Integraciones

Expande el poder de tu monitorización. Pandora FMS es flexible y se integra con las principales plataformas y soluciones en la nube.

Recopila, centraliza y consolida los datos de logs y eventos de diferentes sistemas, aplicaciones y dispositivos en una única plataforma. Los datos se integran de forma nativa con Pandora FMS, sin necesidad de herramientas adicionales.

Solución de supervisión y gestión remota para la supervisión proactiva de dispositivos, redes y aplicaciones. Ideal para proveedores de servicios gestionados (MSP) y equipos de TI que buscan automatización, escalabilidad y control en tiempo real.

Potente y Flexible Helpdesk para equipos de soporte y atención al cliente, alineado con los procesos de Biblioteca de Infraestructura de Tecnologías de Información (ITIL).

Licencia

On-Premise vs SaaS

Tipos de usuario

¡Empieza ahora! ?

¡Inicia tu prueba de 30 días con Pandora ITSM! Descubre todas nuestras funciones, sin necesidad de tarjeta de crédito.

Software de control remoto para servidores y Windows, Linux y Mac, orientado a técnicos de sistemas y empresas de servicios gestionados (MSP).

Licencia

Basada en dispositivos totales

¡Toma el control! ?

Descarga los agentes de Pandora RC y empieza a conectarte a tus dispositivos remotos online en 4 sencillos pasos.

A monitoring platform built to be flexible and adaptable to any type of organization.

By license

On-Premise

SaaS

Open source

+500

Integrations

Expand the power of your monitoring. Pandora FMS is flexible and integrates with the main platforms and cloud solutions.

Collect, centralize, and consolidate log and event data from different systems, applications, and devices into a single platform. Data integrates natively with Pandora FMS agents, with no need for additional tools to capture key information.

Remote monitoring and management solution for proactive oversight of devices, networks, and applications. Ideal for managed service providers (MSP) and IT teams seeking automation, scalability, and real-time control.

Powerful and Flexible Helpdesk for support and customer service teams, aligned with Information Technology Infrastructure Library (ITIL) processes.

By license type

On-Premise vs SaaS

Types of users roles

Try it for Free! ?

Start your 30-day trial with Pandora ITSM! Discover all our features, no credit card required.

Remote control software for servers and Windows, Linux and Mac, oriented to system technicians and managed services companies (MSP).

By license type

Total devices based

Get started! ?

Download Pandora RC’s agents and start connecting to your remote devices online in 4 simple steps.