PostgreSQL10 logical replication. Find out all about it here

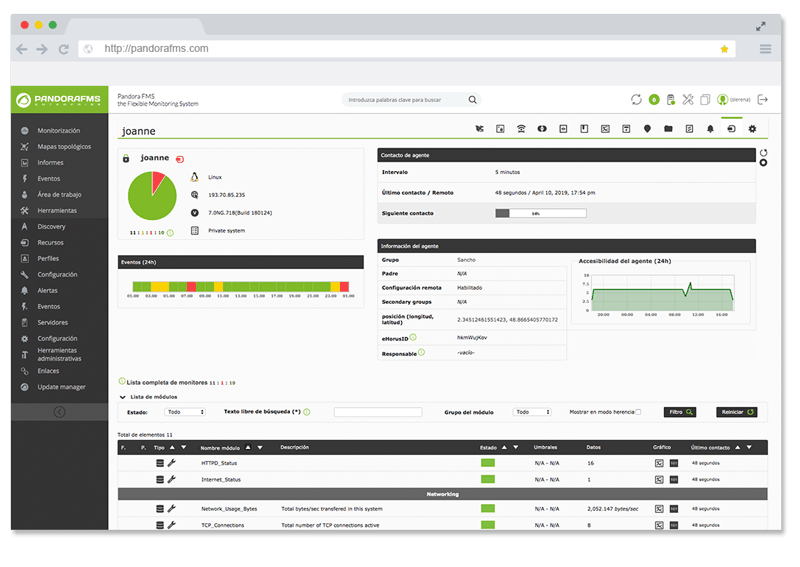

Some time ago, we published a study on PostgreSQL monitoring in a very detailed way. A few days ago, a specialized magazine announced the good news about the launch of a plugin to monitor PostgreSQL10 with Pandora FMS, our monitoring tool. Today we will enrich our knowledge with the new version of PostgreSQL: version 10, let’s go!

Introduction to PostgreSQL10

PostgreSQL is a powerful database which has been mentioned in our other articles as one of the best relational free distribution databases and nowadays this is still true. There, you will find a summary of the articles which have been published in this blog or in the Pandora FMS forum, this way you will be able to broaden your information about PostgreSQL 10 but also about its previous versions. We need to mention that these articles are still fully valid, since the new versions always include a compatibility, thus the software that uses PostgreSQL can be updated to version 10. PostgreSQL is completely written in open source with a license which is similar to BSD and MIT. Essentially the license indicates that we can do anything we want with the source code as long as we don’t have any liability for its use to the University of California.

With this article, we want to continue the other article that we mentioned eariler, where we explained the query of the locks in PostgreSQL with the pg_locks parameter:

SELECT COUNT(*) FROM pg_locks;

When several users accessed the same registry (or several ones) a lock was produced to avoid collisions of versions in the data, which is an important parameter to monitor. In a different study we did on another popular database, we introduced and briefly explained what the ACID is, (Atomicity, Consistency, Isolation and Durability), this way, from the registration of new data and transactions together create locks in a relational database: and yes, this also happens in other storage engines, hence the importance of the new feature of PostgreSQL10!

Do you want to know more about application monitoring?

Pandora FMS Enterprise is capable of monitoring the most popular applications and databases. Click here and find out…

Locks, locks, locks: the nightmare of programmers

We need to explain why locks get in the way with our tasks of Database Monitoring. This happens because of the backup monitoring, their correct execution, storage in a safe place, and databases are not exempted from these locks. In addition to this, we must follow the basic advice in order to optimize the performance of them, this way everything is harmonized with a monitoring system.

This can be carried out with specialized care (for the “Enterprise” version of Pandora FMS, and there are user training plans), in addition to this, the network administrators keep replicas of the databases on other servers in different physical locations.

These replicas represent several advantages:

- These may be physically located outside the company or in their branches, as well as always considering the encrypted communications in order to pass data from one side to another.

- Conceptually it can be considered as “backup copies” which are updated essentially in real time.

- We will be able to perform audit tasks and statistics on the replicas without affecting the performance of the main database.

What is a database replica in PostgreSQL10?

A database replica replicates the data in a very simple way: it copies the files of the master database, bit by bit, byte by byte. PostgreSQL10 saves in the specific files each element of a database and in order to do that it takes a binary record, a kind of log that summarizes the change made in those files when records are added or modified. This way of replicating is also used by other database engines since it is a well-known scheme.

A collateral effect of database replicas is that the slave will not be available while the replicated information is being written. This is not something to be worried about, the most important things is what happens in the master database when there is a lock of records or, even worse, a set of records that belong to a transaction that must be reversed. These should not be copied in the replica, because it is an information that will not be permanently registered and will be deleted (only a summary of the information will be saved).

Let’s look at these previous things with a simple example: two clients of a bank keep accounts and client A wants to transfer money to a client B (in real life this is much more complex, this is only a simplified example). At least two writings must be made in the database: one debiting the amount to customer A and another one crediting the amount to customer B: when both events have been verified the transaction can be completed and the changes become permanent.

What would happen if, client A made this transfer of money to customer B, and then the automatic payment of his credit card were deducted, ending up with no balance for the transfer? Well, the accreditation that would have been made to customer B and the debit made to customer A would not be permanently registered and would be discarded: this is how ACID works, guaranteeing the integrity of the data and thus complicating the replication of the information.

The replication process does not know anything about registers or users that want to record or modify data, the replication process only knows that the files must be the same in both machines and if the origin writes data in any of those files, then it must wait until it finishes so that the file is then available to be read and copied.

What is a logical replication of a database in PostgreSQL10?

The approach is different in PostgreSQL10 and consists of the following: it does not matter the way in which the normal replica makes the process of consulting the binary record, which keeps track of the files that have been modified since the last successful replication. This is what’s new, it translates these changes into information about the records that are already permanently recorded in the database, these records are then read and added in the replica. This way, blocks are ignored because we don’t know how these will end up (either permanent or discarded), which is a very practical and ingenious solution and also gives us additional benefits.

How are logical replications possible in PostgreSQL10?

Thanks to this new version 10 we can install a PostgreSQL extension called pglogical from the software house 2ndQuadrant and they have added logical features to PostgreSQL since version 9.4.

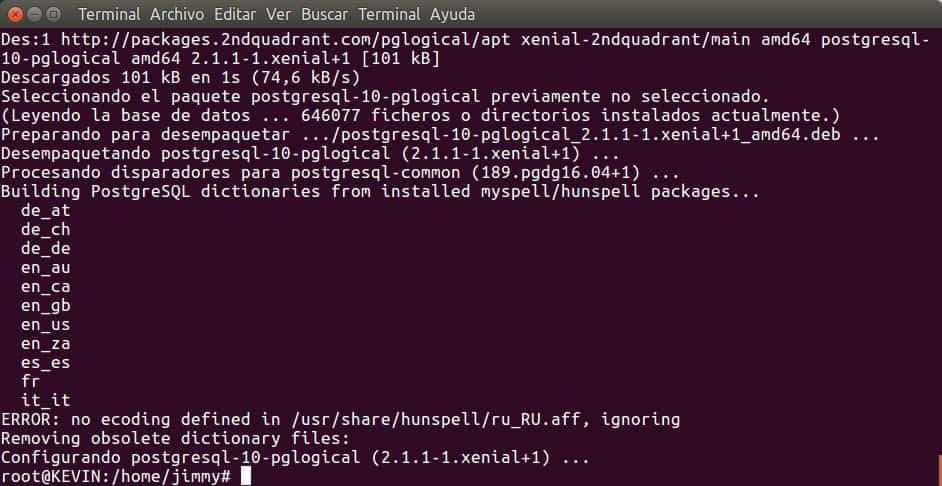

pglogical is available as free software under the same licensing conditions of PostgreSQL10 and in order to install it we must follow the following steps that we will explain in a practical way when using GNU/Linux Debian and its derivatives:

-

- First we must add the repository in our computer from the PostgreSQL website, in the case of Ubuntu we have version 9.5 and we need version 10.

- We must insert the key of the repository which will guarantee us that whatever we download , will be legit according to what is published in the PostgreSQL page (all the details in this link).

- We will do the same process with pglogical, we will add the repository from the 2ndQuadrant website in order to get the latest version available.

- We must also add the respective key of the 2ndQuadrant repository (all the details in this link).

- Once we have configured the repositories, we command apt-get update and then apt-upgrade.

- Finally, we need to install PostgreSQL10 with apt-get install postgresql-10 pgadmin3 and pslogical with apt-get install postgresql-10-pglogical.

- We tested these processes on a 64-bit Ubuntu 16.04 machine (in fact the database offered during the installation of Ubuntu Server is indeed PostgreSQL) and the only problem we had, was with the Russian-language dictionary regarding the Hunspell spell checker.

If we want to experience the latest development version of pglogical we can bring the source code straight from its repository on GitHub. In the website of 2ndQuadrant , they inform that the version of pglogical must always match the version of PostgreSQL installed and different machines can work with different versions (and replicate between them) as long as the above is respected, so depending the database administrator, this is how it should be done.

Extending the utility of logical replication in PostgreSQL10

The logical replication overcomes certain technical limitations of normal replication thus fulfilling our goal of data backup but we can go further: we already know that the logical replication is NOT a true and faithful copy, byte by byte, of the master database. Therefore the information that we are copying from the master database arrives at the replica as if it were an “independent” database and will write in its own files, depending on the type of hardware and the operating system installed etc…

A register X is copied in the replica and is identical byte to byte to its original, but the way in which this is written on the hard disk will be different in both machines. In order to finish this we must point out that with a logical copy we can extract statistical or audit information from it without having to wait for the replication to be written (for example, every five minutes or a gigabyte, whichever comes first).

The fact of extracting statistical or audit information from a replica database implies that we must write the queries (or even modify indexes) which, of course, do not exist in the master database, but we need to give back the information: when writing these queries (even if they are temporary) the database is no longer a faithful and exact copy of the master database, which causes problems when replicating files one by one.

With the logical replication we will not have that problem since it is guaranteed that all the original records are copied in the replica, which guarantees (because it is a machine for replicating) that these cannot be modified or deleted but they can be read and consulted.

Extending the utility of logical replication leads us to practical examples, for example, the Credit Card Department of a bank must keep record of clients in real time without impacting the main database with their work: we will be able to install a logical replication server that will only copy the data of the clients who have credit cards. These data could be personal data, bank accounts and of course data of credit cards. It is not necessary to replicate all the customers of the bank, only a part of them; Likewise, the Credit Card Department can even create additional tables to analyze bank movements thus being able to approve an increase in the customer credit limit and thus other things which mean income of money for the company.

Configuring PostgreSQL10 for logical replications

When installing PostgreSQL10, this defaults in the WAL configuration by default. This configuration allows recovering from unexpected shutdowns or failures that have prevented the data from being recorded on the hard disk.

In the case of replications, logical replication is a new feature that many people do not yet because it is disabled by default. First, we need to have exclusive access to the database (we will be connected with the proper credentials) and second we need to change the value of the wal_level parameter to ‘logical‘. In order to know the location of the postgresql.conf file, we just need to execute in a psql console the command: show config_file and edit the file thus modifying: set wal_level = logical and save the file. Then we will need to restart the service, which is not a problem since we are the only ones who are connected.

This change will tell PostgreSQL10 that it will have to add the corresponding registers in order to translate the binary catalogs into record catalogs, hence the need to momentarily stop the database and restart it. PostgreSQL10 has the ability to host scripts in the Python language, so what we describe will depend on each database administrator this way working together with the network administrator(s) in order to take advantage of night time or early morning to do the work without impacting the normal daily work of the company.

Creating publications in the master database

PostgreSQL10 is configured to work with publications that we must define in the master database. If we are connected with the appropriate credentials by a terminal window , we will create a publication for our example of the Credit Card Department of our imaginary bank , it will be something like this:

CREATE PUBLICATION dpto_tc FOR TABLE clients, bank_accounts, credit_card;

This will create a publication called dpto_tc for the tables called clients, bank_accounts, credit_card for the logical replication

If we need to add all the tables to a single publication, we should write the following:

CREATE PUBLICATION all_the_tables FOR ALL TABLES;

We must emphasize that by default for the publications that we add, the data of those tables will be copied to the logical replication in its entirety, however there is the option to copy only the data that have been added after the creation of the publication.

Preparing the logical replication

Once we have defined the publications, we will proceed to carry out the work that may involve greater thought and decision from us: we will have to create the data structure of each and every one of the tables that each publications and if we use the order ” FOR ALL TABLES “in at least one of the publications, we will have to make an identical copy of the entire structure of the database.

That is why we recommend to advance work and always create a complete copy of the entire structure of the database since pglogical will never do this work for us and when replicating, it will only return a ‘table not found’ error (which will lead us to the monitoring of the work of logical replication, so Pandora FMS, get ready to monitor!).

Creating subscriptions

Once the data structure is ready to receive the logical replica, we must create a subscription using the same names of the publications created. Once we are properly connected to the machine that will contain the logical replica, the syntax will be the following (we will use the same bank example):

CREATE SUBSCRIPTION dpto_tc CONNECTION 'host=bd_maestra dbname=mi_credenciales ...' PUBLICATION dpto_tc;

To make it easier, the subscription will have the same name as the publication and regarding the connection data we must include the values according to our network structure and configuration: waiting and expiration time of the connection attempt, the port, etc… everything according to the RFC3986 standard.

Modifying the publications

With the ALTER PUBLICATION command in the master database we can add new tables, delete, change users or even rename the publication, among other options.

Keeping subscriptions up to date

We can automate the maintenance of subscriptions in the slave database with the following order:

ALTER SUBSCRIPTION dpto_tc REFRESH PUBLICATION;

This will update the tables that we have added, so that’s why talked about copying the complete structure of all the tables in the database but we need to dwell on this: if we create a new table in the origin and add it to the publication, we must also create this structure of table in the destination and then update the subscription.

Monitoring logical replication in PostgreSQL10

Just like normal replication, which we can extract its state with pg_stat_replication in the logical replicas we will use pg_stat_subscription as follows:

SELECT * FROM pg_stat_subscription;

We can also select specific fields of subscriptions:

- application_name: the name of the subscription

- backend_start: specific date and time of the start of logical replication.

- state: if it is working we will see “streaming” or transmitting.

- sent_location: hexadecimal value for binary audit purposes.

- write_location: previous idem.

- flush_location: previous idem.

- sync_state : it returns asynchronous value, which is executed independently or in the background.

To finish this article, now programmers will need to create a script to connects both databases in read only mode and compare record by record to see if the information matches in both origin and destination. This process, could be carried out at dawn or on weekends and the results should be stored in a third database or in log files, in order to be monitored with Pandora FMS so that we configure the respective alerts in an appropriate way.

Conclusion

We have hardly gone through the logical replication since there are still many other features such as:

- Row-level filtering (registration): just like the CHECK command, we can only replicate those that meet a certain rule.

- Column-level Filtering (field): if a table contains many fields that are not relevant for the credit card department (as in our practical example), we will only replicate the ones that we want.

- pglogical has a parameter that is unique to this add-on and that consists in delaying replications according to the period of time we need: we might need replication to start at night when the employees are gone. This feature is not “embedded” in PostgreSQL10.

We think that these concepts will become quite common in the future in other data management environments, if you have any question or comment, don’t forget to write it down here! Thank you !

About Pandora FMS

Pandora FMS is a flexible monitoring system, capable of monitoring devices, infrastructures, applications, services and business processes.

Of course, one of the things that Pandora FMS can control is the hard disks of your computers.

Would you like to know more about what Pandora FMS can offer you? Discover it by entering here: https://pandorafms.com

If you have more than 100 devices to monitor, you can contact us through the following form: https://pandorafms.com/en/contact/

Also, remember that if your monitoring needs are more limited you have at your disposal the OpenSource version of Pandora FMS. Find more information here: https://pandorafms.org

Do not hesitate to send us your queries. The Pandora FMS team will be happy to assist you!

Programmer since 1993 at KS7000.net.ve (since 2014 free software solutions for commercial pharmacies in Venezuela). He writes regularly for Pandora FMS and offers advice on the forum . He is also an enthusiastic contributor to Wikipedia and Wikidata. He crushes iron in gyms and when he can, he also exercises cycling. Science fiction fan. Programmer since 1993 in KS7000.net.ve (since 2014 free software solutions for commercial pharmacies in Venezuela). He writes regularly for Pandora FMS and offers advice in the forum. Also an enthusiastic contributor to Wikipedia and Wikidata. He crusher of irons in gyms and when he can he exercises in cycling as well. Science fiction fan.