Distributed systems allow projects to be implemented more efficiently and at a lower cost, but require complex processing due to the fact that several nodes are used to process one or more tasks with greater performance in different network sites. To understand this complexity, let’s first look at its fundamentals.

- Understanding the Complexity of Distributed Systems

- The Fundamentals of Distributed Systems

- Collaboration between Nodes: The Symphony of Distribution

- Designing for Scalability: Key Principles

- The Crucial Role of Stability Monitoring

- Business Resilience: Proactive Monitoring

- Optimizing Performance: Effective Monitoring Strategies

- Monitoring with Pandora FMS in Distributed Environments

- Conclusion

The Fundamentals of Distributed Systems

What are distributed systems?

A distributed system is a computing environment that spans multiple devices, coordinating their efforts to complete a job much more efficiently than if it were with a single device. This offers many advantages over traditional computing environments, such as greater scalability, reliability improvements, and lower risk by avoiding a single point vulnerable to failure or cyberattack.

In modern architecture, distributed systems become more relevant by being able to distribute the workload among several computers, servers, devices in Edge Computing, etc. (nodes), so that tasks are executed reliably and faster, especially nowadays when continuous availability, speed and high performance are demanded by users and infrastructures extend beyond the organization (not only in other geographies, but also in the Internet of Things, Edge Computing, etc.).

Types and Example of Distributed Systems:

There are several models and architectures of distributed systems:

- Client-server systems: are the most traditional and simple type of distributed system, in which several networked computers interact with a central server to store data, process it or perform any other common purpose.

- Mobile networks: They are an advanced type of distributed system that share workloads between terminals, switching systems, and Internet-based devices.

- Peer-to-peer networks: They distribute workloads among hundreds or thousands of computers running the same software.

- Cloud-based virtual server instances: They are the most common forms of distributed systems in enterprises today, as they transfer workloads to dozens of cloud-based virtual server instances that are created as needed and terminated when the task is completed.

Examples of distributed systems can be seen in a computer network within the same organization, on-premises or cloud storage systems and database systems distributed in a business consortium. Also, several systems can interact with each other, not only from the organization but with other companies, as we can see in the following example:

From home, one can buy a product (customer at home) and it triggers the process with the distributor’s server and this in turn with the supplier’s server to supply the product, also connecting to the bank’s network to carry out the financial transaction (connecting to the bank’s regional mainframe, then connecting to the bank’s mainframe). Or, in-store, customers pay at the supermarket checkout terminal, which in turn connects to the business server and bank network to record and confirm the financial transaction. As it can be seen, there are several nodes (terminals, computers, devices, etc.) that connect and interact. To understand how tuning is possible in distributed systems, let’s look at how nodes collaborate with each other.

Collaboration between Nodes: The Symphony of Distribution

- How nodes interact in distributed systems: Distributed systems use specific software to be able to communicate and share resources between different machines or devices, in addition to orchestrating activities or tasks. To do this, protocols and algorithms are used to coordinate actions and data exchange. Following the example above, the computer or the store cashier is the customer from which a service is requested from a server (business server), which in turn requests the service from the bank’s network, which carries out the task of recording the payment and returns the results to the customer (the store cashier) that the payment has been successful.

- The most common challenges are being able to coordinate tasks of interconnected nodes, ensuring consistency of data being exchanged between nodes, and managing the security and privacy of nodes and data traveling in a distributed environment.

- To maintain consistency across distributed systems, asynchronous communication or messaging services, distributed file systems for shared storage, and node and/or cluster management platforms are required to manage resources.

Designing for Scalability: Key Principles

- The importance of scalability in distributed environments: Scalability is the ability to grow as the workload size increases, which is achieved by adding additional processing units or nodes to the network as needed.

- Design Principles to Encourage Scalability: scalability has become vital to support increased user demand for agility and efficiency, in addition to the growing volume of data. Architectural design, hardware and software upgrades should be combined to ensure performance and reliability, based on:

- Horizontal scalability: adding more nodes (servers) to the existing resource pool, allowing the system to handle higher workloads by distributing the load across multiple servers.

- Load balancing: to achieve technical scalability, incoming requests are distributed evenly across multiple servers, so that no server is overwhelmed.

- Automated scaling: using algorithms and tools to dynamically and automatically adjust resources based on demand. This helps maintain performance during peak traffic and reduce costs during periods of low demand. Cloud platforms usually offer auto-scaling features.

- Caching: by storing frequently accessed data or results of previous responses, improving responsiveness and reducing network latency rather than making repeated requests to the database.

- Geographic scalability: adding new nodes in a physical space without affecting communication time between nodes, ensuring distributed systems can handle global traffic efficiently.

- Administrative scalability: managing new nodes added to the system, minimizing administrative overload.

Distributed tracking is a method for monitoring applications built on a microservices architecture that are routinely deployed in distributed systems. Tracking monitors the process step by step, helping developers discover bugs, bottlenecks, latency, or other issues with the application. The importance of monitoring on distributed systems lies in the fact that multiple applications and processes can be tracked simultaneously across multiple concurrent computing nodes and environments, which have become commonplace in today’s system architectures (on-premises, in the cloud, or hybrid environments), which also demand stability and reliability in their services.

The Crucial Role of Stability Monitoring

To optimize IT system administration and achieve efficiency in IT service delivery, appropriate system monitoring is indispensable, since data in monitoring systems and logs allow detecting possible problems as well as analyzing incidents to not only react but be more proactive.

Essential Tools and Best Practices

An essential tool is a monitoring system focused on processes, memory, storage and network connections, with the objectives of:

- Making the most of a company’s hardware resources.

- Reporting potential issues.

- Preventing incidents and detecting problems.

- Reducing costs and system implementation times.

- Improving user experience and customer service satisfaction.

In addition to the monitoring system, best practices should be implemented which covers an incident resolution protocol, which will make a big difference when solving problems or simply reacting, based on:

- Prediction and prevention. The right monitoring tools not only enable timely action but also analysis to prevent issues impacting IT services.

- Customize alerts and reports that are really needed and that allow you the best status and performance display of the network and equipment.

- Rely on automation, taking advantage of tools that have some predefined rules.

- Document changes (and their follow-up) in system monitoring tools, which make their interpretation and audit easier (who made changes and when).

Finally, it is recommended to choose the right tool according to the IT environment and expertise of the organization, critical business processes and their geographical dispersion.

Business Resilience: Proactive Monitoring

Real-time access to find out the state of critical IT systems and assets for the company allows detecting the source of incidents. However, resilience through proactive monitoring is achieved from action protocols to effectively solve problems when it is clear what and how to do, in addition to having data to take proactive actions and alerts against hard disk filling, limits on memory use and possible vulnerabilities to disk access, etc., before they become a possible problem, also saving costs and time for IT staff to solve issues. Let’s look at some case studies that highlight quick problem solving.

- Cajasol case: We needed a system that had a very large production plant available, in which different architectures and applications coexisted, which it is necessary to have controlled and be transparent and proactive.

- Fripozo case: It was necessary to know in time of failures and correct them as soon as possible, as this resulted in worse system department service to the rest of the company.

Optimizing Performance: Effective Monitoring Strategies

Permanent system monitoring allows to manage the challenges in their performance, since it allows to identify the problems before they become a suspension or the total failure that prevents business continuity, based on:

- Collecting data on system performance and health.

- Metric display to detect anomalies and performance patterns of computers, networks and applications.

- Generation of custom alerts, which allow action to be taken in a timely manner.

- Integration with other management and automation platforms and tools.

Monitoring with Pandora FMS in Distributed Environments

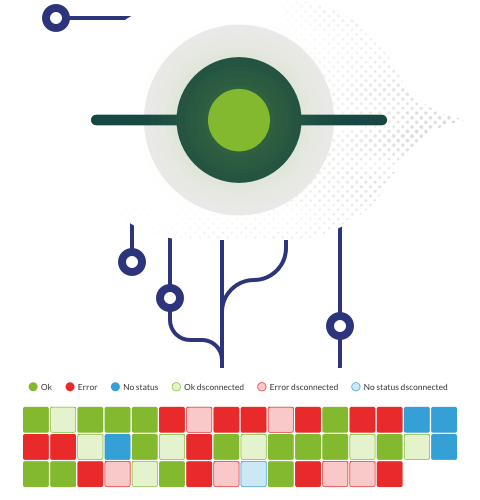

Monitoring with agents

Agent monitoring is one of the most effective ways to get detailed information about distributed systems. Lightweight software is installed on operating systems that continuously collects data from the system on which it is installed. Pandora FMS uses agents to access deeper information than network checks, allowing applications and services to be monitored “from the inside” on a server. Information commonly collected through agent monitoring includes:

- CPU and memory usage.

- Disk capacity.

- Running processes.

- Active services.

Internal application monitoring

Remote Checks with Agents – Broker Mode

In scenarios where a remote machine needs to be monitored and cannot be reached directly from Pandora FMS central server, the broker mode of agents installed on local systems is used. The broker agent runs remote checks on external systems and sends the information to the central server, acting as an intermediary.

Remote Network Monitoring with Agent Proxy – Proxy Mode

When you wish to monitor an entire subnet and Pandora FMS central server cannot reach it directly, the proxy mode is used. This mode allows agents on remote systems to forward their XML data to a proxy agent, which then transmits it to the central server. It is useful when only one machine can communicate with the central server.

Multi-Server Distributed Monitoring

In situations where a large number of devices need to be monitored and a single server is not enough, multiple Pandora FMS servers can be installed. All these servers are connected to the same database, making it possible to distribute the load and handle different subnets independently.

Delegate Distributed Monitoring – Export Server

When providing monitoring services to multiple clients, each with their own independent Pandora FMS installation, the Export Server feature can be used. This export server allows you to have a consolidated view of the monitoring of all customers from a central Pandora FMS installation, with the ability to set custom alerts and thresholds.

Remote Network Monitoring with Local and Network Checks – Satellite Server

When an external DMZ network needs to be monitored and both remote checks and agent monitoring are required, the Satellite Server is used. This Satellite server is installed in the DMZ and performs remote checks, receives data from agents and forwards it to Pandora FMS central server. It is particularly useful when the central server cannot open direct connections to the internal network database.

Secure Isolated Network Monitoring – Sync Server

In environments where security prevents opening communications from certain locations, such as datacenters in different countries, the Sync Server can be used. This component, added in version 7 “Next Generation” of Pandora FMS, allows the central server to initiate communications to isolated environments, where a Satellite server and several agents are installed for monitoring.

Distributed monitoring with Pandora FMS offers flexible and efficient solutions to adapt to different network topologies in distributed environments.

Conclusion

Undertaking best practices for deploying distributed systems are critical to building organizations’ resilience in IT infrastructures and services that are more complex to manage, requiring adaptation and proactivity to organizations’ needs for performance, scalability, security, and cost optimization. IT strategists must rely on more robust, informed and reliable systems monitoring, especially when in organizations today and into the future, systems will be increasingly decentralized (no longer all in one or several data centers but also in different clouds) and extending beyond their walls, with data centers closer to their customers or end users and more edge computing. To give an example, according to Global Interconnection Index 2023 (GXI) from Equinix, organizations are interconnecting edge infrastructure 20% faster than core. In addition, the same index indicates that 30% of the digital infrastructure has been moved to Edge Computing. Another trend is that companies are increasingly aware of the data to know about their operation, their processes and interactions with customers, seeking a better interconnection with their ecosystem, directly with their suppliers or partners to offer digital services. On the side of user and customer experience there will always be the need for IT services with immediate, stable and reliable responses 24 hours a day, 365 days a year.

If you were interested in this article, you can also read: Network topology and distributed monitoring

EN: Market analyst and writer with +30 years in the IT market for demand generation, ranking and relationships with end customers, as well as corporate communication and industry analysis.

ES: Analista de mercado y escritora con más de 30 años en el mercado TIC en áreas de generación de demanda, posicionamiento y relaciones con usuarios finales, así como comunicación corporativa y análisis de la industria.

FR: Analyste du marché et écrivaine avec plus de 30 ans d’expérience dans le domaine informatique, particulièrement la demande, positionnement et relations avec les utilisateurs finaux, la communication corporative et l’anayse de l’indutrie.