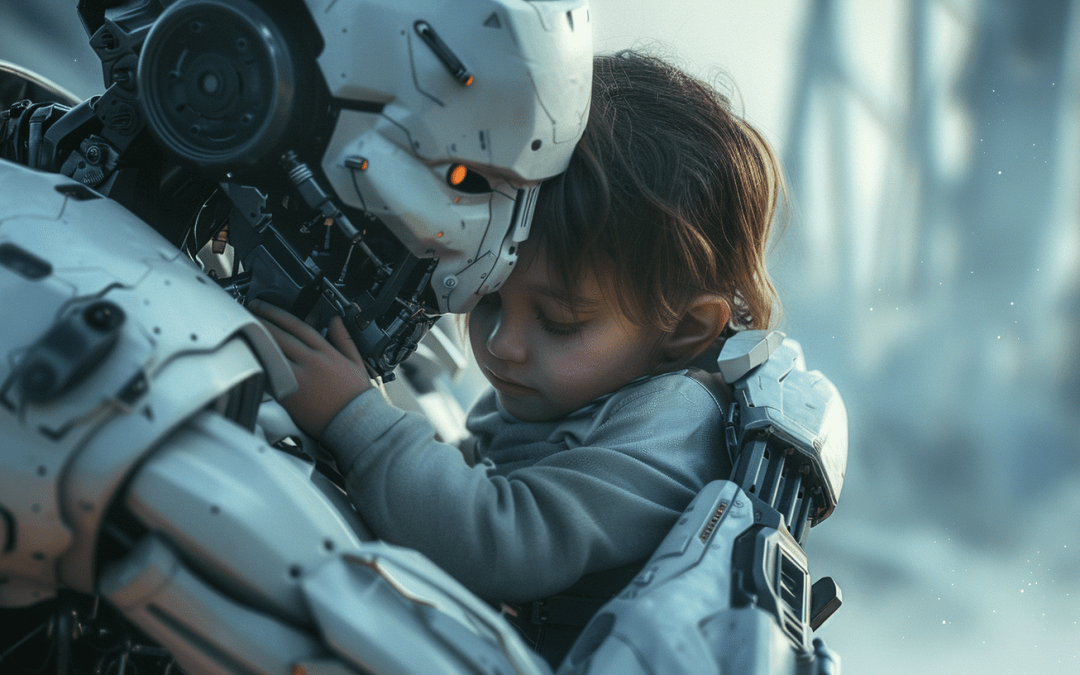

What are the laws of robotics?

1 ) A robot shall not harm a human being or, by inaction, allow a human being to be harmed.

2 ) A robot must comply with the orders given by human beings, except for those that conflict with the first law.

3 ) A robot must protect its own existence to the extent that this protection does not conflict with the first or second law.

What are they, and will they work in the real world?

The 3 laws of robotics are one of the best-known statements in the history of science fiction, and have often been taken as a reference when speculating on how we should create intelligent beings without them ending up harming or even destroying us.

Formulated in 1942 in the story “Vicious Circle”, by the famous writer Isaac Asimov, the 3 laws of robotics have become part of the collective imagination, to the point that many people think that an exact formulation of these laws could protect us from a hypothetical “robot rebellion”.

However, things are not as simple as they seem. In this article we will learn what the laws of robotics are and why they would not work if they had to be applied in the real world.

These precepts not only appeared in “Vicious Circle”, but were repeatedly alluded to in different stories by Asimov, and even in many later works written by other authors. According to Asimov himself, the purpose of the 3 laws in fiction would be to prevent a possible rebellion of the robots. Thus, in the event that one of them tried to violate any of the laws, the electronic brain of the robot would be damaged and it would “die”.

However, if there is one thing we know, it is that reality is very complex and it is impossible to subsume it in simple statements. And this was not something Asimov ignored, far from it. On the contrary, in some of his later stories he would pose situations in which the 3 laws could come into conflict.

This was one of the reasons why, in the 1985 novel “Robots and Empire”, Asimov would include a 4th law, called the Zero Law of robotics:

0 ) A robot will not harm Humanity or, by inaction, allow Humanity to be harmed.

This Zero Law was, in fact, intended to rule over the remaining laws of robotics, in order to resolve possible conflicts. However, it would also encounter serious obstacles “in practice”.

Why wouldn’t they work in real life?

Let’s imagine that, at a certain point, we managed to develop robots endowed with intelligence. And imagine using Asimov’s 4 laws of robotics to control their behavior. What would we expect?

Probably, we would be met with a resounding failure.

And it is not that Asimov was not skilled enough in formulating them (quite the contrary!), but that they were children of their time, and moreover, they were intended to control something that is possibly uncontrollable. Let us explain all this a little better.

The laws of robotics -especially the 3 initial ones- were created in a context in which computer science had barely begun its journey and Artificial Intelligence was a concept purely confined to science fiction.

Therefore, Asimov’s approach was that of the predetermination of the robots’ actions. All their behavior would be foreseen through exhaustive programming that would attempt to control every last detail.

However, Asimov himself warned us in his stories of the dilemmas that this could entail. Reality is enormously complex and conflict situations can arise frequently. But not only this.

During the last decades, and especially in recent years, the concept of Artificial Intelligence has been closely linked to what we know as machine learning.

This technique allows – very roughly speaking – programs to learn from experience in a more or less autonomous way. Thus, they repeatedly experience certain types of situations, after which they extract patterns that they will use in order to achieve a specific objective. This is the technique by which some programs are learning to identify images, drive vehicles or understand human natural language.

This makes us think that, if we were to develop a General Artificial Intelligence, as proposed by Asimov, it would not be completely programmable, but would be able to learn based on its own experience and draw its own conclusions.

Thus, the question arises,

Would the laws of robotics be able to control the behavior of an Artificial Intelligence developed on the basis of machine learning principles?

Even more. Although it is a hypothesis, many authors think that, once the development of a General Artificial Intelligence is achieved, it would be able to continue evolving, becoming more and more intelligent and surpassing by far the capacity of human beings.

Wouldn’t such a powerful intelligence be able to find loopholes in its code to be able to break pre-programmed laws? For example, it is not difficult to imagine that, over time, it could override the mechanisms developed to self-destruct in cases of non-compliance with laws.

Notwithstanding the above, the fact is that the debates that Isaac Asimov raised through his laws of robotics continue to be valid today and could receive a lot of attention, almost a century after he captured them in his stories. Almost nothing! Any writer would be proud to make us imagine so much and for so long.

By the way, before you go and look at your robot vacuum cleaner with a suspicious face, how about discovering Pandora FMS?

Pandora FMS is a flexible monitoring software, capable of monitoring devices, infrastructures, applications, services and business processes.

Do you want to know more about what Pandora FMS can offer you?

Find out by clicking here.

If you have to monitor more than 100 devices you can also enjoy a FREE 30 days DEMO of Pandora FMS Enterprise. Get it here.

Don’t hesitate to send your questions, the great team behind Pandora FMS will be happy to help you!

Dimas P.L., de la lejana y exótica Vega Baja, CasiMurcia, periodista, redactor, taumaturgo del contenido y campeón de espantar palomas en los parques. Actualmente resido en Madrid donde trabajo como paladín de la comunicación en Pandora FMS y periodista freelance cultural en cualquier medio que se ofrezca. También me vuelvo loco escribiendo y recitando por los círculos poéticos más profundos y oscuros de la ciudad.

Dimas P.L., from the distant and exotic Vega Baja, CasiMurcia, journalist, editor, thaumaturgist of content and champion of scaring pigeons in parks. I currently live in Madrid where I work as a communication champion in Pandora FMS and as a freelance cultural journalist in any media offered. I also go crazy writing and reciting in the deepest and darkest poetic circles of the city.