Make payments with your mobile phone, make an appointment online at your health centre or watch a streaming movie… None of these customs that are part of our daily lives would be possible without powerful and permanently accessible servers.

We all have a rough idea of what a server is, but perhaps we too often associate it with physical hardware. Computer servers may differ greatly in their architecture or the type of technologies they use. In this article, we will explain in detail how a server works and what the most commonly used types of servers are in each industry.

Server Definition

A server is a computer system that provides resources or services to other computers called “clients” over a network, either a local or global network such as the Internet. Those “customers” may be your laptops or your mobile phones that are connected to that server to view a web page or to make a reservation just to name a couple of examples.

For a server to work, it needs to have hardware and an operating system.

The hardware is the actual physical machine where information, files, etc. are stored. It consists of certain components such as:

- Power supply.

- Motherboard.

- Processing units (CPUs).

- Storage system.

- Network interface.

- RAM memory.

The hardware must work uninterruptedly, that is, a server never shuts down, works 24/7 and must always be operational. That’s why you have to monitor it, to make sure that its condition is correct and that it continues to do what it has to do).

The operating system allows applications to access server resources. Usually modern servers work with Linux or Windows operating systems. You may be surprised to learn that most of the world’s WEBSITES and e-commerce systems work under Linux, but the truth is that most companies use a combination of servers with several operating systems combined.

Importance of servers in modern computing

Computer servers are at the center of all modern technological infrastructures. Not only do they store and deliver data, they may also perform processing and calculation operations that are critical to the operation of many services, for example, when you search for the cheapest airline ticket on a website, many servers work for you.

Without servers, there would be no technological advances as important as Internet connections, artificial intelligence (AI) or massive data analysis (Big Data). There are many types of servers, depending on what they do and how they are built inside, but we will see that later.

Server history and evolution

Servers have existed almost since the beginning of computing. Before personal computers appeared in the late 1970s, data was stored and processed in large, powerful computing machines called mainframes that could occupy rooms or entire buildings.

Mainframes were controlled by connected keyboards and alphanumeric displays. The acquisition, installation and maintenance of these computers was very expensive and often only available to large companies. Another drawback was that each manufacturer had its own communication protocols, and this made interoperability between different systems very difficult.

Some examples of popular servers from this era were IBM System/360 Model 67, a mainframe developed by IBM in 1965 that was among the first to include advanced features such as virtual memory or Dec PDP-11, a microcomputer created by Digital Equipment Corporation in the early 1970s, and which was mainly used in academic institutions and large companies for large-scale data processing. Today there are still IBM 360 models operating commercially, especially in banks.

1980s

In the 1980s, the client-server model was introduced, which allowed system resources to be distributed over a wider network. Some of the known servers were Novell Netware (1983), designed specifically for local area network management, or Microsoft Windows NT (1989), the first commercial predecessor of our now ubiquitous Windows servers.

An emblematic date in server history is December 20, 1990. British scientist and computer scientist Tim Berners-Lee was working with Robert Caillau on a research project for the prestigious European Laboratory of Particle Physics (CERN) in Geneva. Lee proposed that the information exchange be done via a “CERN httpd” hypertext system and hosted his work on his own personal computer with a pasted note that read: “This machine is a server. Do not turn off”. This is how the first web server was born.

Berners-Lee is considered the founder of the World Wide Web (www and hence later commonly known as “the web”). Their contribution forever changed the way we access and share information globally, thanks to the web.

1990s

With the rise of the Internet in the 1990s, servers became a critical piece of network infrastructure. It was around this time that the first data center for hosting websites and email services emerged.

In addition, the development of middleware technologies such as Java EE (Enterprise Edition) and .NET Framework resulted in servers specifically designed to host complex enterprise applications within a managed environment.

Some prominent application servers were: Microsoft Internet Information Services (IIS) launched by Microsoft in 1995, which operated as a web server and ran applications on ASP and ASP.NET; Oracle Application Server (1999), which supported technologies such as Java EE and offered advanced application management and deployment capabilities; or Netscape Application Server, which came to market in the mid-1990s as a server for managing corporate web applications.

Early 2000s

At the beginning of 2000, the first virtualization software such as VMware and Hyper-V appeared, which allowed the creation of virtual machines. They were originally used to increase the hardware capabilities of physical servers, but over the years they evolved. Today a server can simply be software that runs on one or more devices. Some examples of virtual servers that emerged around this time are VMware ESX Server (1999), Microsoft Virtual Server (2004) or Xen, created in 2003 by the University of Cambridge, which allowed the simultaneous execution of several virtual machines on the same server using a hypervisor.

Current time

From the mid-2000s to the present day, cloud computing has been gaining relevance.

According to a report published by Gartner, between 2025 and 2028 companies will devote 51% of their IT budget to the migration of their services to the cloud and Cloud Computing will become an essential component for competitiveness.

Some well-known providers are Amazon Web Services (AWS), launched by Amazon in 2006; Google Cloud Platform, launched by Google in 2008; or Microsoft Azure, an AWS-like platform that saw the light of day in 2010.

These providers offer a wide range of services, from storage and cloud databases, to tools that allow companies to scale their operations flexibly.

Operating of a server

Setup and Request Response Process

Server configuration is essential to be able to efficiently manage network resources and run applications securely.

It is a procedure that covers different aspects of hardware, software, network configurations and security. It is divided into the following stages:

- Choosing the operating system (Linux, Unix, Windows Server) and configuring the necessary permissions (roles, security policies, etc.).

- Hardware setup: CPU, RAM, storage, etc.

- Configuring the network by making settings such as IP addresses, routing tables, DNS, firewalls, etc.

- Installation of the applications and services necessary for server operation.

- Implementation of security measures: access management, installation of patches to correct vulnerabilities or monitoring tools to detect threats.

Once configured, the server will be ready to respond to requests.

Servers respond to customer requests through protocols. Protocols are a set of rules for exchanging data over the network.

For communication between client and server to be effective, both devices must follow the same protocol. For example, in the case of web servers, HTTP/ HTTPS or hypertext transfer protocol is used; SMTP for email and FTP for file transfer.

In addition, there are network protocols that set the addressing and routing guidelines, that is, the route that data packets must follow. The most important is the IP (Internet Protocol) protocol supported by all manufacturers and operating systems for WAN and local networks. Also TCP (Transmission Control Protocol), considered as a standard in network connections over which most subsequent protocols send data.

Operating system roles and complementary applications

Operating systems are a key piece of the client-server architecture, since they manage resource delivery and provide a stable and safe environment for application execution. In addition, they are optimized to be able to configure and manage computers remotely.

Its main functions are:

- Managing and allocating resources such as CPU, memory and storage, ensuring that the server is able to handle multiple client requests simultaneously.

- Distributing the workload among different nodes to optimize performance and prevent bottlenecks.

- Controlling access to system applications, ensuring that only authorized users can make modifications.

- Implementing network protocols that allow data transfer between client and server.

- Managing and running multiple processes and subprocesses.

In addition to the operating system, there are other complementary applications that add features to the system, for example, web servers (Apache, nginx), database management systems (MySQL, PostgreSQL), file systems (NFS) or remote monitoring and control tools to monitor the status and server performance.

Client-server model: request and response

In the client-server architecture, there is a program or device called client that requests services or resources and a server that provides them. Usually, servers are prepared to receive a large number of simultaneous requests. To work they need to be connected to a local area network (LAN) or a wide area network (WAN) such as the Internet.

The request and response process is performed in a series of steps:

- The client (web browser, device, application) sends a request using a specific protocol (HTTP/HTTPS, FTP, SSH).

- The server receives the request, verifies its authenticity and delivers the requested resources (files, databases, etc.).

- Data is divided into packets and sent over the network.

- The customer reassembles the packets in the correct order to form a response.

Types of servers

The main activities in the practice of IT System Monitoring are the following:

File Servers

They are used to store and share files on a network. They are very useful in business environments, since they favor collaboration and remote work, making information always accessible to users. In addition, they allow you to create backups centrally, enabling recovery in case of loss.

Different protocols such as FTP (the standard protocol for data transfer) or SCP (secure file transfer protocol using SSH) are used to access a file server over the Internet. Protocols such as SMB (Windows networks) and NFS (Linux networks) are used for local area networks (LANs).

Today these servers are starting to be deprecated with the popularization of shared disk environments in the cloud, such as Google Drive or Office 365.

Application Server

They provide remote access to applications and services. They are often used to run software that requires a lot of processing and memory resources.

In enterprise environments, application servers are ideal for running critical applications such as ERPs and CRMs. In addition, they simplify management since it is only necessary to install and maintain the software on a single machine, rather than on different computers.

Developer tools, test environments, and specialized programs can also be deployed on these types of servers that allow developers to access remotely without the need for on-premise configurations.

Email Servers

They manage the storage, sending and receiving of emails. In addition, they perform other functions such as user authentication, email account validation, spam filtering and malware protection.

There are incoming mail servers (POP3/ IMAP) that handle receiving and storing, and outgoing mail servers (SMTP) that perform sending to recipients.

Web Server

They store and organize web page content (HTML documents, scripts, images or text files) and display it in the user’s browser.

Web servers use the HTTP/ HTTPS protocol for data transmission and can handle a large number of requests at the same time, adapting to varying traffic needs.

The most commonly used web servers today are:

- Apache: It is one of the oldest web servers (1995) and the most popular because it is open source and cross-platform (it supports Linux, Windows, IOS, Unix, etc.). It has extensive documentation and support from the developer community that makes up the Apache Software Foundation.

- Nginx: Launched in 2004, it is the second most used web server. It is also open source and cross-platform compatible. Known for its high performance and ability to handle a large number of simultaneous requests, it is the preferred choice for large, high-traffic websites such as streaming platforms, content delivery networks (CDNs), or large-scale applications.

- Microsoft Internet Services (IIS): It is a closed source web server for Windows operating systems. It supports applications programmed in ASP or .NET

Database Servers

They work as a centralized repository where information is stored and organized in a structured manner.

Database servers are conceived to handle large volumes of data without performance problems. Some of the best known are MySQL, Oracle, Microsoft SQL Server and DB2.

Virtual Servers

Unlike traditional servers, they are not installed on a stand-alone drive with their own operating system and dedicated resources, but instead constitute configurations within specialized software called hypervisor, such as VMware, Hyper-V, or XEN.

The hypervisor manages the server’s computing needs and allocates processing resources, memory, or storage capacity.

Thanks to virtual servers, companies have managed to reduce investment in hardware, save physical space in their facilities, energy and time spent on maintenance. Instead of having underutilized physical servers, you may pool your resources to run multiple virtual machines. They are particularly useful in test and development environments because of their flexibility and quick creation.

Proxy Servers

They work as intermediaries between client and server requests, usually in order to improve security.

Proxy servers may hide the original IP address, temporarily cache data, or filter requests by restricting access to certain files and content. For example, a company may enable a proxy to control its employees’ access to certain websites during business hours.

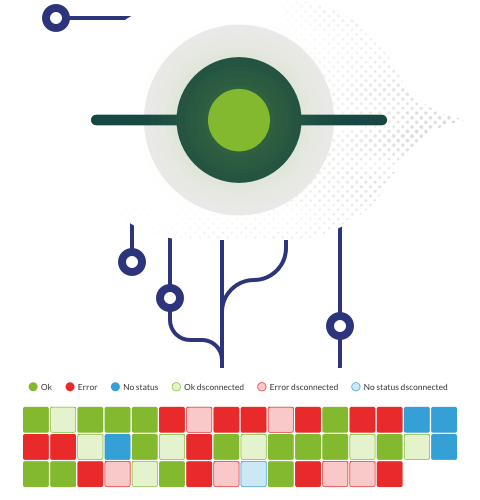

Monitoring Servers

They constantly monitor the operation of all corporate servers as well as the devices that are part of the network. A server cannot be inoperative, but as we have seen, a server is a complex element that is made up of hardware, an operating system, applications, and databases. There are many servers in a company, each with a certain function, so monitoring servers and network elements is a complex task that must be done holistically in order to understand the context of the problem when it happens.

Monitoring servers use specific monitoring software to perform their work. There are different types of monitoring tools, some focus only on network computers, others only on databases, others on application performance (APM) and others only collect log information from the system.

It is essential for a monitoring software to be able to adapt to the different server architectures that exist today, especially those based on virtualization, in the cloud and, of course, in the network.

Server Architectures

There are different server architectures, each with its own features. Some of the most common are:

Mainframes and microcomputers

Mainframes were the first computer servers, capable of processing data and performing complex calculations. They had a large size and made use of a lot of energy, taking up rooms or even entire buildings. For that reason, smaller, less-resourced companies used microcomputers such as AS/400 IBM. Although they weren’t as powerful, they could still run critical applications.

Both mainframes and microcomputers had high cost and limited scalability.

Conventional Hardware Servers

As technology advanced, servers became smaller and more affordable. The generation that followed the mainframes and microcomputers was based on computers, of course, with technical specifications superior to those of equipment for personal use: more CPU, RAM, storage space, etc. They did not need monitors or input devices, since they were accessed directly through the network; nor graphical interface or audio to save resources.

Traditional servers are still in use today. They work as stand-alone units with high-capacity hard drives, advanced cooling systems, and uninterruptible power sources.

They often resort to hardware redundancy to ensure that they will remain operational even if a critical failure takes place.

To keep them at optimal temperatures and avoid overheating, they are installed in special rooms called “data centers”, which are equipped with air conditioning systems and strong security measures. Servers are usually built on racks to optimize available space.

Blade Servers

Technological evolution progressively reduced server size. Hardware components became smaller and more efficient, resulting in a generation of Blade servers, much more compact and thinner than traditional ones. Their modular structure allows to optimize the space available in the racks and facilitates the replacement or hot repair in case of failures, thus guaranteeing the service continuity.

Clustering and server combining

Technological advancements such as Network Attached Storage (NAS) feature got rid of the need for each server to have its own separate storage system. Some important technologies are mirroring, which allows data to be duplicated on multiple devices to ensure availability, or clustering, which consists of grouping several servers to work as one, sharing software and hardware resources within the network. It is a decentralized and scalable infrastructure where you integrate more devices as the demand for services increases.

Server Virtualization

The heart of server virtualization is the hypervisor that manages the hardware resources for each virtual machine.

The first virtualization software appeared in the late 1990s and early 2000s.

There are currently two types of hypervisor:

- Bare Metal (VMware, Xen): Which are installed directly on the hardware without the need for an operating system. They offer higher performance and are more stable, making them ideal for business environments.

- Hosted (Microsoft Hyper-V): Running on an existing operating system. They are easy to install and suitable for testing and development environments.

Thanks to virtualization technology, companies can manage their IT infrastructure more efficiently. Server resources are dynamically allocated to optimize the performance of each virtual machine, taking into account factors such as workload or the number of requests received.

By reducing the amount of physical hardware, virtual servers save a lot of space in data centers and reduce power consumption.

They also offer faster backups, as virtual machines can be easily replicated and information backups can be made to other physical servers or the cloud, restoring them when needed.

Finally, virtual servers are perfect for testing and development environments. Virtual machines can be created, deleted and modified quickly. Developers can test their applications in isolation, making sure that the failure of one process will not affect the rest.

Cloud Environments

As an evolution of virtual environments, cloud platforms emerged from the first decade of this century, initiated by Amazon Web Services (AWS), later by Microsoft Azure and Google Cloud.

Cloud environments are virtual servers offered as “pay-as-you-go” and maintained by the owners of said clouds (Amazon, Microsoft, Azure), so when we mention a “cloud server” we are talking about a Google/Microsoft/Amazon virtual server that we are using, for hours, for days or for months, but we do not even know where the hypervisor is physically, far from it, we have “material” access to it, only through the service offered by the company that markets it.

Cloud server deployment made it possible for small and medium-sized businesses to easily scale their IT infrastructure, saving installation and maintenance costs.

The architecture of a cloud server is distributed across multiple processors, disk drives, memory, and network connections. Resources are dynamically allocated based on each customer’s needs.

Server Operating Systems

Server operating systems focus on stability and are designed to be configured and managed remotely. Unlike personal use devices, they are network oriented and not end-user oriented. In addition, they have advanced features that improve their performance in business environments.

The best server operating system is one that adapts to the company’s IT infrastructure and workload. Some of the most commonly used are:

Microsoft Windows Server

Microsoft’s first operating system for servers was Windows NT, released on July 27, 1993. Versions 3.5 and 3.51 of this software were widely used in business networks.

After Windows NT, Microsoft released Windows Server which is still used today and is a pillar of many companies’ IT infrastructures. The latest version is Windows Server 2022. This operating system offers robust protection, thanks to its multi-layer security options and the TLS 1.3 security protocol that provides a high encryption level. In addition, it offers support for hosted virtualization and Azure hybrid capabilities to simplify data center expansion to the cloud platform.

Linux / Unix (popular distributions)

Created in the 1970s by Bell Labs, UNIX is considered to be the pioneer to most current server operating systems. Its multi-user capabilities and the ability to handle different processes at the same time became a milestone in the industry.

Unix was written in the C programming language, although it lacked a graphical user interface. Administrators interacted with the operating system through text commands.

Linux appeared in the 1990s with a clear vocation, that of supporting UNIX. Thanks to its open source nature, it can be modified and adapted to specific needs.

There are many Linux distributions for servers. One of the most popular ones is Red Hat Enterprise (RHEL), widely used in enterprise environments for its stability. Even though Redhat is a paid operating system, it has free versions that copy its way of working at a low level: Rocky, Alma and many others, does not include the same level of commercial support as RHEL, but it is very secure and stable, making it a good choice for small and medium-sized businesses.

Other Linux distributions that may work as server operating systems are: Ubuntu, based on Debian and known for its simple configuration, or SUSE linux, known for its long history as Linux for very stable servers and with its own philosophy.

There are hundreds of Linux distributions, the ones mentioned here are the best known.

NetWare History and Evolution

NetWare was one of the first operating systems to adopt the client-server architecture. It was designed specifically for local area networks (LANs) and provided services such as file and printer management.

Over time, it adopted a Linux-based kernel. This revamped version was called Novell Open Enterprise Server.

Novell OES combined the advanced features of NetWare with the flexibility and stability of Linux. However, and despite the fact that this transition offered many advantages, the new operating system faced strong competition from Windows Server and other Linux distributions that affected its market share, so it is no longer in use today.

Trends and Future of Server Technology

Server computing is changing at a breakneck pace. Advances in automation, virtualization and technologies such as artificial intelligence (AI) or machine learning (ML) came to revolutionize the server industry.

However, data center operators are also facing new challenges such as the increase in the price of energy, which forces them to look for new sustainable alternatives such as adiabatic cooling or the use of renewable energies.

Recent Advances in Server Hardware

Technological progress comes from high-performance supercomputers capable of performing complex calculations.

Both artificial intelligence and machine learning require advanced processing capacity to handle huge amounts of data. HCP (High Performance Computing) servers can accelerate these processes to reach increasingly accurate models. They are also important in the field of Science and Research, especially in astronomy, molecular biology or genomics, since they allow precise and fast simulations, accelerating the pace of discoveries.

However, despite the fact that the new HCP infrastructures are more efficient, they still require very high energy consumption. For this reason, servers devoted to AI often use other processing solutions such as GPU and FPGA.

GPUs (Graphics Processing Units) were initially used in the development of video games. They have thousands of small cores capable of performing calculations simultaneously, making them ideal for training AI’s deep neural networks that require complex mathematical operations.

While GPUs are dedicated devices, FPGAs can be configured to optimize AI-specific algorithms, reaching higher levels of customization. They offer very fast response times, making them suitable for applications that require real-time processing. In addition, they are often more efficient than GPUs in terms of power consumption and are used to accelerate the development of AI models in environments where efficiency is critical.

Role of Artificial Intelligence and Machine Learning

Artificial intelligence (AI) and machine learning (ML) not only led to the development of more powerful servers at the hardware level; the integration of these technologies in software has also turned around such important issues as cybersecurity and IT infrastructure management.

Machine learning allows you to perform predictive analysis that warns about anomalous situations or possible failures that could cause a security breach.

On the other hand, the integration of AI in management software reduces the risk of human error, by automating critical tasks. For example, the installation of security patches used to be done manually and now it mostly depends on AI, which translates into time savings for IT teams and greater reliability in protection against vulnerabilities.

In addition, there are monitoring tools powered by AI that constantly monitor network activity for abnormal activity. These tools use advanced machine learning algorithms to detect suspicious patterns and respond to threats in real time.

Little by little these technologies are transforming the server industry and helping to create more secure and efficient systems.

Predictions and new trends

Other trends and technologies that define the current landscape are:

- Sustainability: It has become a priority issue for many companies. Modern servers try to reduce environmental impact by using more efficient hardware. For example, providers such as Amazon Web Services (AWS) have developed a new family of processors known as Graviton, which is based on the ARM architecture and is distinguished by its low power consumption. These servers are ideal for deploying microservices, although they do not match the performance of high-end x86 processors.

Another important achievement is advanced cooling technologies such as adiabatic cooling which consists of reducing air temperature by evaporating water.

In addition, many companies have started using renewable energy sources such as solar or wind to power their data centers, thereby reducing dependence on fossil fuels. - Automation. It is key to optimizing costs and improving operational efficiency. Many routine server management operations, such as deploying applications or installing security patches, can be automated, allowing IT teams to focus on other strategic tasks. Moreover, automation tools can dynamically allocate server resources on demand and are ideal for working in virtualization environments.

- Multicloud: This trend is to select different cloud services to avoid dependence on a single provider. Multicloud environments are highly flexible and allow you to better manage high-demand peaks.

However, coordinating multiple cloud services can be tricky due to the variety of interfaces each provider uses. Therefore, it is advisable to have management tools to have a centralized view of all resources. That way, system administrators can monitor all IT infrastructure activity from a single platform, improving responsiveness. - FaaS: FaaS (Function as Service) servers are part of the serverless computing computing paradigm. This model allows developers to run code snippets in the cloud in response to specific events, such as HTTP requests, database log updates, and notifications, without the need to manage an entire server infrastructure.

The ease of deployment of this system makes more efficient and agile development possible. Some well-known FaaS providers are Google Cloud Functions, powered by Google Cloud, or Azure Functions, powered by Microsoft. - Disaggregated architectures: It is a growing trend, especially among large companies and multinationals. Unlike traditional servers, which integrate all components (CPU, memory, storage) within the same unit, unbundled servers separate them into separate modules. This modularity allows for more flexible resource management, which can be useful in dynamic IT environments with varying workloads.

To simplify the management of disaggregated servers, administrators use advanced tools that allow them to control all network components from a single interface. - Edge Computing: It consists of taking data processing to the nearest place where they are generated, choosing devices connected “to the edge of the network”. This proximity reduces server latency and is very useful when working with real-time applications such as augmented reality or self-driving vehicles.

The demand for Edge Computing solutions is expected to increase in the upcoming years, driven by the evolution of 5G connections, IoT and technological innovations in different industries.

Conclusion

The concept of server has evolved from its appearance in the 1960s to the present day, adapting to changes and technological innovations to offer increasingly efficient and agile computing.

Modern servers are not only powerful in terms of performance, they are also more flexible and able to respond to the needs of different industries. Considering that storage and processing requirements often vary greatly in enterprise environments, it is important to look for solutions that are scalable.

Finally, if your company performs critical operations or stores sensitive data, it is essential to choose IT providers that are reliable and offer excellent support. A good provider not only guarantees service continuity, but can also offer expert advice on the most appropriate server technologies in each case, ensuring that companies keep their IT infrastructure updated according to new market demands.

Even though a certain provider may provide the best solution, this does not imply that a server cannot fail. A server is made up of hardware, base operating system and applications. You have to monitor at all times the whole “technological stack”, that is, the entire set of pieces that make up the server, and for that it is essential to monitor servers.

Pandora ITSM is a balance between flexibility, simplicity and power

And above all, it adapts to your needs.