HA Environments with Percona XtraDB Cluster

We are working on the translation of the Pandora FMS documentation. Sorry for any inconvenience.

Introduction

Pandora FMS is based on a MySQL database to configure and store data. We know that to scale (grow) “horizontally” there is the Pandora FMS Metaconsole for monitoring all the necessary devices. However, there are certain scenarios that may remain too small for the use of the Metaconsole, with the difficulty that it causes in its management, as well as they may be too large, such as storing all the data in a single database.

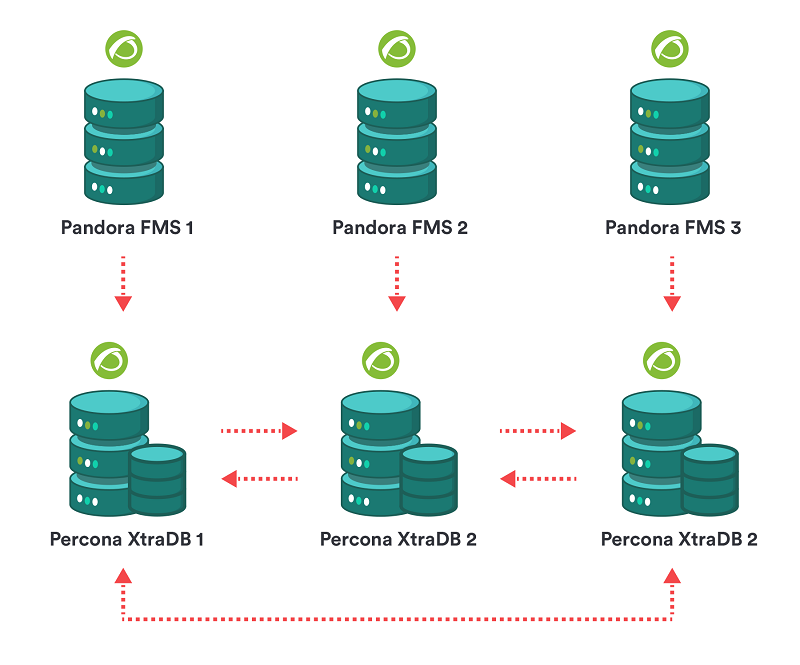

To solve this problem, we rely on Percona XtraDB Cluster, which offers the possibility of using it in multimaster mode (several main servers) for the reading and writing tasks of said servers. In this way, the load is distributed among the different servers where this cluster is hosted, giving more processing capacity to the database than a standalone node (single node) can offer. This way you can increase the number of metrics to monitor in a single Pandora FMS node.

The representation of this scheme offers the possibility of installing on the same server, a replication node of Percona XtraDB Cluster together with the Pandora FMS server and the Web Console or separate the installation of the same. It will suffice to point each of the Pandora FMS servers or web consoles to the node where it needs to write or read, being able to distribute the load of the environment as is most convenient in each environment.

The minimum number of nodes for the cluster to work is 3 nodes and there must always be 2 of them active for the database to be operational.

Percona XtraDB Cluster Installation

In order to perform a successful Percona XtraDB Cluster installation, the Percona XtraDB Cluster server and client must first be installed on all nodes.

The data of the nodes to be used in the example will be the following:

NODE 1:

pandora001 192.168.80.111

NODE 2:

pandora002 192.168.80.112

NODE 3:

pandora003 192.168.80.113

Execution on all nodes

# yum install Percona-XtraDB-Cluster-server-57 Percona-XtraDB-Cluster-client-57

Once installed on all nodes, start the service on each of them and configure a password that is common for the root user, as well as a user (in this case sstuser) that will be used to replication between all nodes. run:

# systemctl start mysqld

Obtain a temporary password for root and log into the MySQL server with it (remember that it must be run on all nodes):

# mysql -uroot -p$(grep "temporary password" /var/log/mysqld.log | \ rev | cut -d' ' -f1 | rev)> SET PASSWORD FOR 'root'@'localhost' = PASSWORD(' Pandor4!');

> UNINSTALL PLUGIN validate_password;

> SET PASSWORD FOR 'root'@'localhost' = PASSWORD('pandora');

Now proceed to create the user sstuser:

mysql> create user sstuser@'%' identified by 'pandora';> grant all on *.* to sstuser@'%'; > flush privileges; > quit

Stop the mysql service on all nodes after completing this configuration:

# systemctl stop mysqld

Once you have stopped all the servers, you must start one of them in bootstrap mode, so that it acts as the main server to perform the replication between the other two nodes.

To do this, you have to add a specific configuration in the node you choose as main, in this case the pandora001 node.

Modify the /etc/my.cnf file of the pandora001 node by correctly adding the parameters indicated between the two lines # Modified for PFMS:

[mysqld] # Modified for PFMS\ server-id=1 # Modified for PFMS/ datadir=/var/lib/mysql character-set-server=utf8 skip-character-set-client-handshake # Add configuration for Percona XtraDB Cluster. # Cache size depends on the server resources. Recommended 1G - 10 GB. # Modified for PFMS\ wsrep_provider_options="gcache.size=10G; gcache.page_size=10G" wsrep_provider=/usr/lib64/galera3/libgalera_smm.so wsrep_cluster_name=pandora-cluster wsrep_cluster_address=gcomm: wsrep_node_name=pandora001 wsrep_node_address=192.168.80.111 wsrep_sst_method=xtrabackup-v2 wsrep_sst_auth=sstuser:pandora # Modified for PFMS/ pxc_strict_mode=PERMISSIVE # Mysql optimizations for Pandora FMS node with 4G RAM max_allowed_packet = 64M innodb_buffer_pool_size = 1G innodb_lock_wait_timeout = 90 innodb_file_per_table innodb_flush_log_at_trx_commit = 0 innodb_flush_method = O_DIRECT innodb_log_file_size = 64M innodb_log_buffer_size = 16M innodb_io_capacity = 100 innodb_autoinc_lock_mode=2 thread_cache_size = 8192 thread_stack =256K max_connections = 8192 wait_timeout = 900 key_buffer_size=4M read_buffer_size=128K read_rnd_buffer_size=128K sort_buffer_size=128K join_buffer_size=4M query_cache_type = 0 query_cache_size = 0 sql_mode="" binlog_format=ROW expire_logs_days=1

Start the mysql service on node pandora001 with this configuration, in bootstrap mode:

# systemctl start mysql@bootstrap

In the pandora002 and pandora003 nodes, modify the /etc/my.cnf file, indicating the following parameters between the two lines # Modified for PFMS:

- Node

pandora002:

[mysqld] server-id=2 datadir=/var/lib/mysql character-set-server=utf8 skip-character-set-client-handshake # add configuration for Percona XtraDB Cluster. # Cache size depends on the server resources. Recommended 1G - 10 GB. # Modified for PFMS\ wsrep_provider_options="gcache.size=10G; gcache.page_size=10G" wsrep_provider=/usr/lib64/galera3/libgalera_smm.so wsrep_cluster_name=pandora-cluster wsrep_cluster_address=gcomm:pandora001,pandora003 wsrep_node_name=pandora002 wsrep_node_address=192.168.80.112 wsrep_sst_method=xtrabackup-v2 wsrep_sst_auth=sstuser:pandora pxc_strict_mode=PERMISSIVE # Modified for PFMS/ # Mysql optimizations for Pandora FMS max_allowed_packet = 64M innodb_buffer_pool_size = 1G innodb_lock_wait_timeout = 90 innodb_file_per_table innodb_flush_log_at_trx_commit = 0 innodb_flush_method = O_DIRECT innodb_log_file_size = 64M innodb_log_buffer_size = 16M innodb_io_capacity = 100 innodb_autoinc_lock_mode=2 thread_cache_size = 8192 thread_stack = 256K max_connections = 8192 wait_timeout = 900 key_buffer_size=4M read_buffer_size=128K read_rnd_buffer_size=128K sort_buffer_size=128K join_buffer_size=4M query_cache_type = 0 query_cache_size = 0 sql_mode="" binlog_format=ROW expire_logs_days=1

- Node

pandora003:

[mysqld] server-id=3 datadir=/var/lib/mysql character-set-server=utf8 skip-character-set-client-handshake # add configuration for Percona XtraDB Cluster. # Cache size depends on the server resources. Recommended 1G - 10 GB. # Modified for PFMS\ wsrep_provider_options="gcache.size=10G; gcache.page_size=10G" wsrep_provider=/usr/lib64/galera3/libgalera_smm.so wsrep_cluster_name=pandora-cluster wsrep_cluster_address=gcomm:pandora001,pandora003 wsrep_node_name=pandora002 wsrep_node_address=192.168.80.112 wsrep_sst_method=xtrabackup-v2 wsrep_sst_auth=sstuser:pandora pxc_strict_mode=PERMISSIVE # Modified for PFMS/ # Mysql optimizations for Pandora FMS max_allowed_packet = 64M innodb_buffer_pool_size = 1G innodb_lock_wait_timeout = 90 innodb_file_per_table innodb_flush_log_at_trx_commit = 0 innodb_flush_method = O_DIRECT innodb_log_file_size = 64M innodb_log_buffer_size = 16M innodb_io_capacity = 100 innodb_autoinc_lock_mode=2 thread_cache_size = 8192 thread_stack = 256K max_connections = 8192 wait_timeout = 900 key_buffer_size=4M read_buffer_size=128K read_rnd_buffer_size=128K sort_buffer_size=128K join_buffer_size=4M query_cache_type = 0 query_cache_size = 0 sql_mode="" binlog_format=ROW expire_logs_days=1

Start the mysqld service on the nodes pandora002 and pandora003 to start synchronization:

#systemctl start mysqld

At this point you can install the Pandora FMS database on node 1 and observe how it replicates correctly between the other two servers.

Once the correct operation of the replication has been verified, stop the node that you have started in bootstrap mode (in this case the pandora001 node):

(NODE1) # systemctl stop mysql@bootstrap

Change the parameter wsrep_cluster_address within the my.cnf file of NODE 1 to add the other 2 nodes of the cluster to it, it should be as follows:

wsrep_cluster_address=gcomm:pandora002,pandora003

Start the mysqld service again on the pandora001 node, but this time in normal mode, so that it synchronizes with the other 2 already active nodes.

systemctl start mysqld

Changes to the Pandora FMS scheme for its correct operation

To avoid problems with deadlocks (locks of records) due to constant readings and writings that occur in parallel, it is necessary to modify the following Pandora FMS tables with the following modifications:

alter table tagent_access add column `id_tagent_access` bigint(20) unsigned NOT NULL; alter table tagent_access add primary key (id_tagent_access); alter table tagent_access modify id_tagent_access bigint(20) auto_increment; alter table data_tagent add column `data_tag_id` bigint(20) unsigned NOT NULL; alter table data_tagent add primary key (data_tag_id); alter table data_tagent modify data_tag_id bigint(20) auto_increment; alter table tagent_data_inc add column `id_tagente_datos_inc` bigint(20) unsigned NOT NULL; alter table tagent_data_inc add primary key (id_tagent_data_inc); alter table inc_data_tagent modify inc_data_tag_id bigint(20) auto_increment; alter table taggent_data_string add column `taggent_id_data_string` bigint(20) unsigned NOT NULL; alter table tagente_datos_string add primary key (id_tagente_datos_string); alter table tagent_data_string modify id_tagent_data_string bigint(20) auto_increment; alter table inventory_data_tagent add column `inventory_data_tag_id` bigint(20) unsigned NOT NULL; alter table tagente_datos_inventory add primary key (id_tagente_datos_inventory); alter table inventory_data_tagent modify inventory_data_tag_id bigint(20) auto_increment; alter table torigen add primary key(`origin`); alter table user add primary key (`id_user`);

For its correct operation, these changes must be applied with the database without data, only with the schema.

Apply changes in an environment with previous data

Given this scenario, it is necessary to rebuild the tables where the necessary modifications have been added to avoid deadlocks in the environment. The process would be as follows:

1.- Make DUMP (export data) of all the tables involved separately, excluding the DROP and create TABLE fields:

#mysqldump -u root -p pandora tagent_access --skip-add-drop-table --complete-insert --no-create-info> tagent_access.sql #mysqldump -u root -p pandora data_tagent --skip-add-drop-table --complete-insert --no-create-info> data_tagent.sql #mysqldump -u root -p pandora tagente_datos_inc --skip-add-drop-table --complete-insert --no-create-info> tagente_datos_inc.sql #mysqldump -u root -p pandora tagente_datos_string --skip-add-drop-table --complete-insert --no-create-info> tagente_datos_string.sql #mysqldump -u root -p pandora tagente_datos_inventory --skip-add-drop-table --complete-insert --no-create-info> tagente_datos_inventory.sql

2.- Eliminate the affected tables:

mysql>drop table tagent_access; mysql>drop table tagent_data; mysql>drop table tagent_data_inc; mysql>drop table tagent_data_string; mysql>drop table tagente_datos_inventory;

3.- Create again all the tables with all the ALTER and with all the new columns so that the environment works without problems. To create the tables again it is necessary to use the create table of the file /var/www/html/pandora_console/pandoradb.sql of the tables tagent_access, tagente_datos_inc, ' 'tagente_data_string, tagente_data_inventory.

4.- Incorporate with source all the DUMP'' data created in step 1.

#mysql -u root -p pandora mysql> source tagent_access.sql: mysql> source tagent_data.sql: mysql> source tagent_data_inc; mysql> source tagent_data_string; mysql> source tagent_data_inventory;

At the end of the process, all the tables will have been left with the incremental ids created from 0 in all of them.

Home

Home