Sections

- What is Availability Monitoring and Why is it Essential?

- Key Uptime Monitoring Techniques

- SLA, SLO, SLI, and Their Relationship to Uptime Monitoring

- Comparison of Uptime Monitoring Tools

- The Limitations of Measuring Only Uptime: The Role of User Experience (UX/WUX)

- Criteria for Choosing the Best Uptime Monitoring Solution

Today, everything is faster than ever, but nothing is quicker than user impatience, and nothing feels as endless as an hour without documents, chats, or work applications, while watching profits go down the drain.

Errors will always happen. The difference lies in mitigation, which starts by instantly detecting any anomaly. That’s why here is a complete guide on uptime monitoring, including what it is, techniques, tools, examples, strategies, criteria for choosing a good solution, and much more.

What is Availability Monitoring and Why is it Essential?

Uptime monitoring is a supervision system that ensures our digital services—ranging from websites to APIs, servers, or Industrial Control Systems—are always operational. Technically, it is a set of protocols and tools that automatically and continuously verify the health of IT infrastructure components.

This allows organizations to:

- Detect failures in real-time before users notice them.

- Prevent service downtime through proactive alerts.

- Ensure compliance with our Service Level Agreement (SLAs), such as maintaining 99.99% uptime.

In practice, this involves establishing scheduled checks to evaluate:

- Network connectivity. Is the server responding to pings?

- Service functionality. Is the API returning the expected data when queried?

- Application accessibility and proper functioning, such as ensuring the website’s SSL certificate is valid.

For example, if a company uses Kubernetes, uptime monitoring doesn’t just verify that nodes are active but also ensures that critical pods, like an authentication service, are running correctly.

The Impact of Downtime: More Than Just Numbers

The cost of a service outage goes beyond direct financial losses. For instance:

- British Airways paid £183 million in fines in 2017 after three days of service downtime, in addition to the operational losses from not being able to operate.

- In 2020, an AWS failure disrupted services and websites just before Black Friday, indirectly affecting hospitals and logistics as well.

However, service interruptions add another critical cost that is difficult to quantify: the loss of customer trust. According to data, 47% of users abandon a website if it takes more than 2 seconds to load, and 80% don’t return after a bad experience.

In this context, uptime monitoring is no longer optional—it’s the minimum requirement to ensure continuity whenever possible.

How Do We Start?

The key is to combine basic techniques, like pinging the service, with more advanced strategies such as network monitoring and user experience (UX) and web user experience (WUX) monitoring to create a multilayered safety net.

Let’s explore the main techniques available to build this framework.

Key Uptime Monitoring Techniques

You can be alive, but barely hanging on. That’s why uptime monitoring isn’t limited to checking whether a server responds. We must apply techniques that, when combined, detect failures across various layers of infrastructure, starting with the basics.

Ping Monitoring (ICMP): The Foundation of Monitoring

This involves sending ICMP packets to a device to verify basic connectivity. It identifies total outages or network cuts if the ping does not receive a response.

But what if our WordPress site cannot connect to its database due to a configuration error? The ping will tell us the page is alive, but not that it’s failing. That’s why we need to implement other techniques.

Otherwise, relying solely on ping monitoring could turn into the meme of the dog sitting with a cup of coffee while everything around it burns. The server says, “Everything is fine” when asked, but chaos reigns all around.

HTTP/S Monitoring: The Next Step

This technique not only ensures that the web service responds but also that it returns an HTTP 200 OK code and has valid SSL certificates. As long as this is the case, we can ensure that end-users can access and use the website or API.

This approach is especially useful when, for instance, your WooCommerce store throws a 500 error on Black Friday—because Finagle’s law always applies: “Anything that can go wrong, will go wrong at the worst possible moment.”

In such cases, it’s also advisable to combine this with synthetic monitoring, simulating real transactions to verify their functionality and optimize user experience.

DNS Monitoring: Ensuring Domain Resolution

This technique monitors DNS resolution and propagation times, preventing your domain from becoming unreachable due to misconfigurations, DNS poisoning attacks, and more.

Imagine a bank that has recently merged with another. As part of this process, DNS updates are required. However, an error in the MX records could block customers from accessing their online banking services.

Tools like DNS monitoring, which include alerts for unauthorized changes or resolution failures, would notify the team immediately about such issues.

TCP/UDP Monitoring, monitoring key ports

This technique checks the accessibility and performance of specific ports on a network. This ensures that services dependent on TCP protocols (HTTP, SMTP, FTP…) and UDP (video streaming, VoIP…) are operational.

For example, a hospital securely accesses medical records through port 443, but the intern (it’s always the intern) has reconfigured the firewall and accidentally blocked the port. A TCP/UDP monitor would detect this instantly with an alert, not later, through the doctors’ shouting.

This is where we see the importance of solutions that integrate IT infrastructure monitoring.

SNMP Monitoring: The Network’s Watchdog

Another powerful technique is monitoring through the Simple Network Management Protocol (SNMP), which keeps an eye on the network and alerts to any issues or unusual behavior. It works by collecting data from routers, switches, or IoT devices.

Is the office printer suddenly sending gigabytes of data to an unknown server? Sure, that’s “completely normal” (no, it’s definitely not). But SNMP monitoring would catch such anomalies immediately. Implementing this approach helps avoid major headaches—here’s a guide to setting up SNMP.

A robust uptime monitoring strategy must combine these techniques based on your organization’s operational and technical needs. This ensures you won’t be stuck in a situation where a ping test says “everything’s fine,” while your online transaction API is down—and no one knows.

The ultimate goal is to ensure compliance with Service Level Agreements (SLA). Therefore, it’s worth diving deeper into this concept and related terms.

SLA, SLO, SLI, and Their Relationship to Uptime Monitoring

Although we work with machines, we ultimately serve people, and they require service guarantees considering how catastrophic every second of downtime can be.

This is where these key acronyms come into play, and why it’s essential to be familiar with them:

- SLA (Service Level Agreement).This defines the minimum service level we guarantee to a client. For example, ensuring that a website has 99.00% uptime. If this isn’t met, compensation may be required for the breach. Here’s an SLA guide to learn all the necessary details.

- SLO (Service Level Objective): While the SLA is the external commitment to clients, the SLO is our internal efficiency goal. Naturally, the SLO should be more ambitious than the SLA, ensuring we meet the latter with a safety margin. For instance, if the SLA promises 99.00% uptime, we can set an SLO of 99.90%, triggering action if our uptime monitoring system indicates we’re falling short. This proactive approach guarantees SLA compliance while offering a valuable buffer to address issues.

- SLI (Service Level Indicator): This is the metric that measures whether we’re meeting our SLO. In the case of uptime, the SLI typically reflects the percentage of operational time.

How these concepts interconnect:

SLI measures SLO, and SLO in turn, which is internal and more strict than SLA, ensures the compliance of the latter.

How Uptime Monitoring Impacts SLAs

There’s a fascinating, award-winning documentary titled Man on Wire (2008). It recreates the incredible feat of French tightrope walker Philippe Petit, who, in 1974, illegally strung a wire between New York’s Twin Towers… and walked across it. Each step forward was a challenge, and even the slightest mistake or unexpected event could have meant the end. The walk was nerve-wracking—45 minutes to cross the 400 meters eight times.

Fascinating, seriously, but here’s the important part: when it comes to SLAs, we’re in a similar situation. Progress is slow and tough, scraping for every fraction of improvement in SLAs and SLOs to move those indicators upward. But falling short is easy. Just one incident of downtime can pull us off the wire.

Uptime monitoring acts as the safety net, verifying compliance and alerting us immediately to mitigate any fall. Without uptime monitoring:

- We will not be able to prove that we’ve met the promised uptime.

- We risk fines or contractual penalties, just like British Airways faced.

- And most importantly, we lose credibility with clients or partners, something as fragile as fine porcelain, easy to shatter and almost impossible to piece back together once broken.

Key Examples of Uptime and SLA Metrics

There’s no such thing as a perfect metric—only the most suitable ones for an organization based on its activity. However, some metrics are fundamental for uptime monitoring and SLA compliance, including the following:

|

Indicator |

How it is measured |

Impact on SLA |

|

Uptime (%) |

(Operating time / Total time) × 100 |

Primary indicator for availability SLAs. |

|

MTTR (Mean Time to Repair) |

Average time to resolve incidents. |

A high MTTR = Greater penalties for downtime. |

|

Maximum Latency |

Service response time (e.g., <500 ms). |

If exceeded, it can be considered “functional downtime” by rendering a service unusable. |

For a deeper understanding of this topic, check out our Definitive Guide to SLA, SLO, and SLI.

Comparison of Uptime Monitoring Tools

When selecting the right solution, it’s essential to consider whether you simply need a basic alert system for a small website or a comprehensive command and control center to meet strict SLAs with clients.

The type of tool you choose will depend on this, so let’s explore some popular options:

Specialized Tools (Focused solely on uptime)

|

Tool |

Advantages |

Disadvantages |

Suitable for |

|

Uptime Robot |

– Free up to 50 monitors. – Alerts via email, SMS, and basic integrations. |

– No advanced metrics (e.g., WUX), only basic ones (ping, HTTPS, etc.). |

Personal projects and startups. |

|

Uptime.com |

– Basic and advanced monitoring, such as transactional (e.g., login flows). – Good reporting. |

– Limited in some integrations, such as ticketing. |

Companies with strict SLAs. |

|

Pingdom |

– Advanced monitoring (synthetic and real user). – Simple API. |

– The cost/performance ratio is slightly higher than other alternatives. |

Online stores with global traffic. |

If we require a command and control center, we should consider integrated solutions that combine uptime monitoring with other essential metrics. This is especially important for large-scale organizations or those with a strong technology focus.

Let us explore some options.

Integrated Solutions (Combining Uptime with Other Metrics)

|

Tool |

Advantages |

Disadvantages |

Suitable for |

|

Better Stack |

– Customizable status pages. – Ideal for DevOps teams (REST APIs). |

– Few options for on-premise networks. – Becomes expensive quickly with multiple websites. |

Webmasters and tech startups with cloud infrastructure. |

|

ManageEngine |

– Integrated SNMP and network monitoring. – Machine learning-based alerts. |

– Steep learning curve. – Implementation is not very intuitive. |

Companies with hybrid networks. |

|

Datadog |

– 500+ integrations (AWS, Kubernetes, etc.). – Synthetic and real user monitoring (RUM). |

– The cost per host per month can increase easily. – Overkill for simple needs. |

Corporations with multicloud infrastructure. |

|

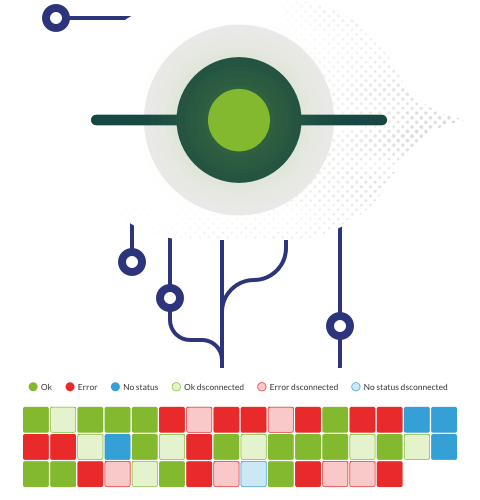

Pandora FMS |

–All-in-one: uptime, WUX, SNMP, log analysis, etc. – Scalable from SMEs to large enterprises. |

– Requires detailed initial configuration. |

Complex environments (e.g., network monitoring + WUX). |

As we can see, there are key differences between specialized uptime monitoring tools and integrated solutions like Pandora FMS, which can be easily visualized.

|

Specialized Tools |

Integral Solutions (Pandora FMS) |

|

|

Scope |

They check “if it’s online.” |

They analyze «how it works» (ex: API performance, bottlenecks…). |

|

Integrations |

Basic APIs. |

They connect also with CRM, ERP, Business Intelligence tools, logs… |

|

Cost |

Inexpensive at first, but scale poorly. |

Higher initial investment, but later savings by avoiding multiple tools. |

|

Use cases |

Blog personal, landing page… |

Any organization: online stores, hospitals, banks, etc. |

In summary, an uptime tool is like buying a thermometer to check the health of our infrastructure—it alerts you to a “fever” but does little beyond that, leaving the root cause unknown. Solutions like Pandora FMS act as a comprehensive diagnostic system—a “hospital” that reveals the causes, not just the symptoms, to resolve any issue that could jeopardize SLAs.

The Limitations of Measuring Only Uptime: The Role of User Experience (UX/WUX)

An uptime of 99.95% may seem impressive, but imagine a medical center with that metric where every patient record takes 30 seconds to load. Or a partial error in an online store where the shopping cart fails to load.

This shows that uptime falls short in real-world scenarios, making user experience monitoring (User Experience o UX and Web User Experience or WUX) essential.

Measuring it is a broad and human-centric concept, going beyond mere numbers, but it can be applied through techniques like:

- Synthetic Testing: Conducted with scripts that mimic user actions, such as booking a flight or making a purchase. These can be measured using features like Pandora FMS’s synthetic monitoring.

- Proactive Alerts: For example, measuring load times for those medical records and notifying the IT team before the angry support tickets start flooding in.

Technical indicators are nothing compared to human experiences—which is why, if we want to succeed, uptime monitoring is the minimum requirement, but it’s only the first step in a much longer journey.

Criteria for Choosing the Best Uptime Monitoring Solution

As we have discussed, there are no perfect solutions, only those that best adapt to our needs and IT infrastructure. That’s why it all starts with an analysis of these two key areas:

- Needs. What SLAs do we need to meet? What do we need to guarantee to ensure a delightful user experience?

- Infrastructure. Which services must remain available to maintain that experience? Which of the solutions we’ve reviewed are compatible with our IT setup? How do they integrate with other monitoring systems? Can we manage everything from one place with a single tool like Pandora FMS (the ideal scenario)?

As we can see, the answer will differ for each organization, and best practices always begin by stepping into the shoes of those we serve (clients and end-users). Only then can we choose the technology that delivers the optimal experience.

In doing so, we’ll build a layered uptime monitoring system that ensures everything runs like clockwork—and that the support ticket system stays quiet (but not because it’s down and we failed to detect it!).

Parlez à l'équipe de vente, demandez un devis ou posez vos questions sur nos licences