Chatbot and Artificial Intelligence

Introduction

Pandora ITSM chatbot is made up of two services: a conversation or chat server (with its WEB client) and a conversational artificial intelligence engine (optional).

The artificial intelligence engine can be used to learn from the information entered into Pandora ITSM knowledge base to provide quick answers to user questions.

- Automatic: It uses machine learning models to automatically provide answers to users based on prior learning.

- Manual: A Pandora ITSM operator responds to users through the chat interface.

- Mixed: Pandora ITSM assists the operator by showing possible answers to users' questions.

The architecture consists of three key elements:

- The chat client, with which the user interacts.

- The chat server, with which the operator interacts.

- The prediction engine, which offers answers based on users' questions.

Both the client and the chat server are part of Pandora ITSM installation.

To add predictive capabilities to Hybrid Helpdesk you will need to install and activate the Prediction Engine as described in the following sections.

Chat server installation

By default it is installed when using the cloud installation method. To activate it, go to the chat Setup → Setup → ChatBot → Enable chat option:

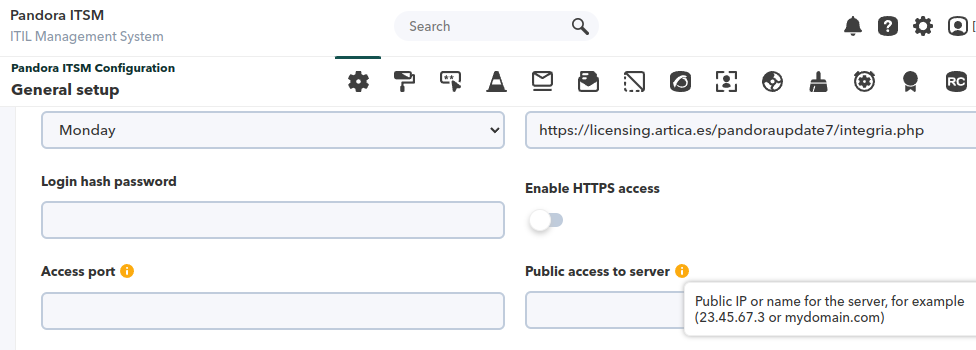

The web service URL must be configured with the public URL of your Pandora ITSM installation, as configured in the main section of Setup:

In case it does not work as expected, reproduce the Node.js installation steps.

If AI is enabled (see “AI configuration”), the AI options section with the Update KB model and Update conversational model buttons will also appear.

HTTPS configuration for the ChatBot

Activate Enable SSL, by default it has the following values:

Replace your own certificates with the ones configured by default. Save by clicking Update and check the green color in Check chat server to know if it works properly.

Node.js installation

curl -sL https://rpm.nodesource.com/setup_12.x | sh yum install -y nodejs npm i -g pm2 cd /var/www/html/pandoraitsm/extras/chat_server npm update pm2 start server.js

Edit the file /etc/systemd/system/integria-chat.service so that the service starts automatically every time the operating system boots, adding the following:

[Unit] Description=Integria-Chat-Server After=network.target [Service] Type=simple ExecStart=/usr/bin/node server Restart=always # Consider creating a dedicated user for Wiki.js here: User=root Environment=NODE_ENV=production WorkingDirectory=/var/www/html/pandoraitsm/extras/chat_server [Install] WantedBy=multi-user.target

Save file and run:

systemctl daemon-reload

systemctl start integria-chat.service

systemctl enable integria-chat

Access to the chat server database must be configured, edit the file /var/www/html/pandoraitsm/extras/chat_server/config/config.js and modify the required database parameters. The one by default must be changed should you have a custom installation.

Installation of the artificial intelligence engine

Run the following commands as root (super user):

yum install python3 python36-Cython wget http://xxxxxx/prediction_engine-latest.tgz tar xvzf prediction_engine-latest.tgz cd prediction_engine ./install.sh service prediction_engine.service restart

This will start the service on port 6000/tcp.

AI Settings

Support → Chat → Channel manage menu.

The AI is configured separately in each channel.

- IA url: URL where the webservice, the artificial intelligence engine, listens. It must be in format:

HTTP://DIR_IP

- IA Port: By default

6000. It should not be changed unless you have had to do a port forwarding somewhere in between. - Initial response time: Seconds in which the AI will answer the first user request.

- Response time between conversations: Response time between questions once the conversation has started.

- Progress bar timeout: The time the system will allow the operator to choose an answer from those provided, before automatically answering the best option.

- Certainly threshold: The degree of uncertainty supported by the AI when choosing an option from those offered, the value should be between

0and1. An initial value of0.5(50 %) is recommended and adjust as necessary.

From version 105 onwards, the option to use ChatGPT® (OpenAI) as an alternative AI will be available.

- API Key: Token to authorize connection to

https://api.openai.com/v1/chat/completions. - Model: Selector to choose between

gpt-4(default value) orgpt-3.5-turbo. - Max tokens: Maximum number of “tokens” (default 2000) that can be generated in the response.

- Context time: The time over which the context of the conversation is maintained. In each call it is necessary to send the history of the messages sent by the

userand those sent by theAI assistant, plus one at the beginningsystem(see in Context). Default:01:00. - Context: At the beginning of each request, this message is added in

system. It provides guidelines that affect how the model will respond. To this context the sentenceAlways answer me in ${responseLanguage}, regardless of the language in which I write to you.is added, whereresponseLanguagewill be the user's language if it is available among the channel languages, otherwise it will use the default language of the channel. By default:You are a Pandora ITSM assistant. - Temperature: It controls the randomness or creativity of the generated answers. It admits values between

0and1, the closer to0, the more predictable and coherent the answers are, the closer to1, the more creative the answers will be. By default value:0.3. - Avatar: Image to be displayed in the chat when interacting with ChatGPT®. By default:

moustache1.png. - Title: Title that will be displayed in the chat when talking with ChatGPT®. By default:

ChatBot. - Subtitle: Subtitle to be displayed in the chat when chatting with ChatGPT®. By default:

Default Bot.

Backup of custom learning models

- Before carrying out any customization, it is recommended to back up the current models:

cp -r /opt/prediction_engine/models /opt/prediction_engine/models.bak

- To restore models from the backup, run:

cp -f /opt/prediction_engine/models.bak/\* /opt/prediction_engine/models/

Questions and Answers (Knowledge Base)

This type of modification is advanced, by default the Update KB Model and Update Conversational Model buttons must be used from PITSM Web Console.

The question and answer template is generated from a CSV file with the following format:

Language code;Question;Answer

Example:

“en”;“question 1”;“answer 1” “en”;“question 2”;“answer 2” …

It can be obtained automatically from Pandora ITSM database with the following commands:

rm -f /opt/prediction_engine/data/integria_kb.zip 2>/dev/null echo "SELECT id_language AS lang, title as question, data as answer FROM integria.tkb_data INTO OUTFILE '/opt/prediction_engine/data/integria_kb.csv' FIELDS TERMINATED BY ';' ENCLOSED BY '\"' LINES TERMINATED BY '\n';" | mysql -u integria -p integria && zip -j /opt/prediction_engine/data/integria_kb.zip /opt/prediction_engine/data/integria_kb.csv

To update the models:

cd /opt/prediction_engine/src python kb_train.pyc

Dialogues (conversational)

Menu Support → Chat → Browse → Data management.

In Pandora ITSM Web Console you will be able to add conversational items classified by categories and languages.

To search for a specific conversational item, enter a keyword found in the title and/or answer and select the category and language it belongs to.

Advanced configuration

This type of modification is advanced, by default the Update KB Model and Update Conversational Model buttons must be used from PITSM Web Console.

The conversational model from YAML files with the following structure:

categories: - category 1 - category 2 -... conversations: - - text 1 - text 2 -... - - text 1 - text 2 - text 3 -... …

The .yaml files (the file name is not important) should be entered in the directory:

/opt/prediction_engine/data/chat_xx

Being xx the ISO code of the language to be updated (es for the Spanish language, en for English language).

To update the models run:

cd /opt/prediction_engine/src python chat_train.pyc

How to use the chat

Pandora ITSM chatbot uses channels to define different places to have conversations between operators (Pandora ITSM users with special permissions to manage conversations) and normal users (normal Pandora ITSM users or simple anonymous visitors, if the chat is used from outside of Pandora ITSM).

Manage a channel

Add operators to the channel

The channel must have at least one operator, who will answer the users' chat requests. You will be able to add any user with chat operator permissions and assign them a description, an avatar (different from the one in their user file) and the languages in which they can answer chats.

Click Edit users of the corresponding channel to add a user and then click Add User:

Use the screen to define an operator, example:

Once added other users will be able to start chat.

Channel operators

In order for operators to receive audible notifications when users open a chat, they must be on the chat control screen. It is accessed through the menu Support → Chat → View chat.

The operator will enter one of those chats and interact with the other party:

In the event that the chat is opened by an internal user, it will indicate which user it is.

Using the chat outside of Pandora ITSM

Support → Chat → Channel Manage → Generate Bundle menu, in the corresponding channel.

To use the chat outside Pandora ITSM interface, for example on a web page or in another application, click the star icon to show the snippet of JavaScript code to be embedded into the application.

Note that the URL included in the code is the URL that is defined as the public URL in chat server options. Said URL must be accessible from where users access it. In most cases, this means that it must be a public Internet URL.

Home

Home