Monitorización de eventos

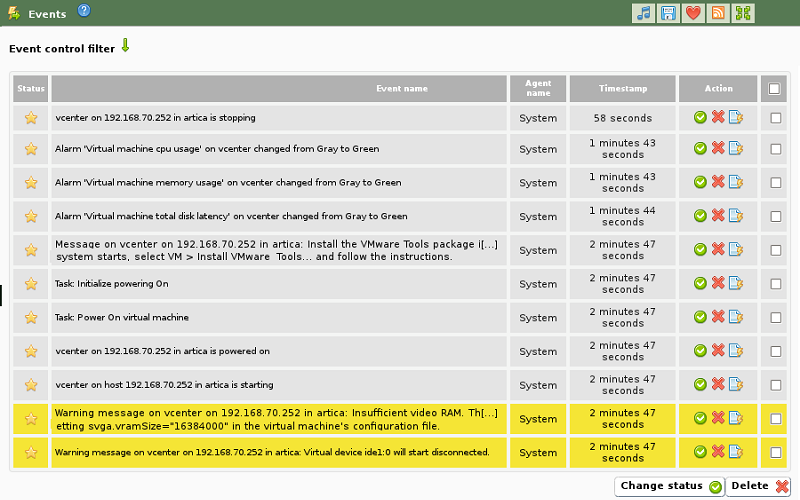

Esta funcionalidad realiza una copia de los eventos presentes en el vCenter de VMware® a la lista de eventos de Pandora FMS.

Estos eventos pasan a formar parte del flujo de eventos normales de Pandora FMS y quedan asociados de forma automática al Agente que representa el vCenter del que provienen (si el Agente existe en el momento de creación del evento).

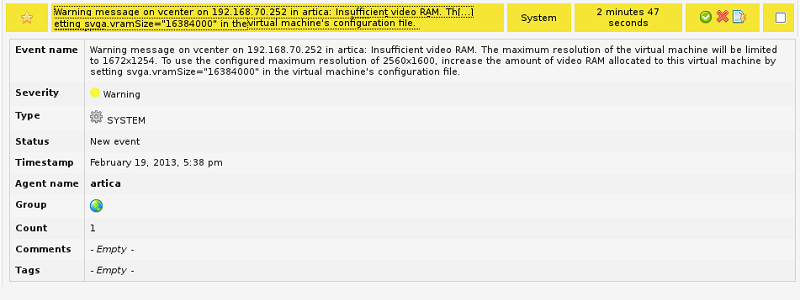

En el proceso de volcado de eventos se respeta la información y severidad que VMware® indica en la creación del evento, de tal forma que los eventos con un nivel de severidad crítico, advertencia o informativo conservarán estos niveles en Pandora FMS. La siguiente imagen muestra un ejemplo de la información detallada de un evento volcado de VMware a Pandora FMS.

Con todos los eventos presentes en Pandora FMS podrá realizar todas las acciones disponibles para la gestión de eventos, como por ejemplo: creación de alertas, configuración de filtros, apertura de incidencias, etc.

Tabla de eventos

| Evento | Severidad | Tipo de evento | Grupo |

|---|---|---|---|

| An account was created on host {host.name} | Informational | System | All |

| Account {account} was removed on host {host.name} | Informational | System | All |

| An account was updated on host {host.name} | Informational | System | All |

| The default password for the root user on the host {host.name} has not been changed | Informational | System | All |

| Alarm '{alarm.name}' on {entity.name} triggered an action | Informational | System | All |

| Created alarm '{alarm.name}' on {entity.name} | Informational | System | All |

| Alarm '{alarm.name}' on {entity.name} sent email to {to} | Informational | System | All |

| Alarm '{alarm.name}' on {entity.name} cannot send email to {to} | Critical | System | All |

| Reconfigured alarm '{alarm.name}' on {entity.name} | Informational | System | All |

| Removed alarm '{alarm.name}' on {entity.name} | Informational | System | All |

| Alarm '{alarm.name}' on {entity.name} ran script {script} | Informational | System | All |

| Alarm '{alarm.name}' on {entity.name} did not complete script: {reason.msg} | Critical | System | All |

| Alarm '{alarm.name}': an SNMP trap for entity {entity.name} was sent | Informational | System | All |

| Alarm '{alarm.name}' on entity {entity.name} did not send SNMP trap: {reason.msg} | Critical | System | All |

| Alarm '{alarm.name}' on {entity.name} changed from {[email protected]} to {[email protected]} | Informational | System | All |

| All running virtual machines are licensed | Informational | System | All |

| User cannot logon since the user is already logged on | Informational | System | All |

| Cannot login {userName}@{ipAddress} | Critical | System | All |

| The operation performed on host {host.name} in {datacenter.name} was canceled | Informational | System | All |

| Changed ownership of file name {filename} from {oldOwner} to {newOwner} on {host.name} in {datacenter.name}. | Informational | System | All |

| Cannot change ownership of file name {filename} from {owner} to {attemptedOwner} on {host.name} in {datacenter.name}. | Critical | System | All |

| Checked cluster for compliance | Informational | System | All |

| Created cluster {computeResource.name} in {datacenter.name} | Informational | System | All |

| Removed cluster {computeResource.name} in datacenter {datacenter.name} | Informational | System | All |

| Insufficient capacity in cluster {computeResource.name} to satisfy resource configuration in {datacenter.name} | Critical | System | All |

| Reconfigured cluster {computeResource.name} in datacenter {datacenter.name} | Informational | System | All |

| Configuration status on cluster {computeResource.name} changed from {[email protected]} to {[email protected]} in {datacenter.name} | Informational | System | All |

| Created new custom field definition {name} | Informational | System | All |

| Removed field definition {name} | Informational | System | All |

| Renamed field definition from {name} to {newName} | Informational | System | All |

| Changed custom field {name} on {entity.name} in {datacenter.name} to {value} | Informational | System | All |

| Cannot complete customization of VM {vm.name}. See customization log at {logLocation} on the guest OS for details. | Informational | System | All |

| An error occurred while setting up Linux identity. See log file '{logLocation}' on guest OS for details. | Critical | System | All |

| An error occurred while setting up network properties of the guest OS. See the log file {logLocation} in the guest OS for details. | Critical | System | All |

| Started customization of VM {vm.name}. Customization log located at {logLocation} in the guest OS. | Informational | System | All |

| Customization of VM {vm.name} succeeded. Customization log located at {logLocation} in the guest OS. | Informational | System | All |

| The version of Sysprep {sysprepVersion} provided for customizing VM {vm.name} does not match the version of guest OS {systemVersion}. See the log file {logLocation} in the guest OS for more information. | Critical | System | All |

| An error occurred while customizing VM {vm.name}. For details reference the log file {logLocation} in the guest OS. | Critical | System | All |

| dvPort group {net.name} in {datacenter.name} was added to switch {dvs.name}. | Informational | System | All |

| dvPort group {net.name} in {datacenter.name} was deleted. | Informational | System | All |

| Informational | System | All | |

| dvPort group {net.name} in {datacenter.name} was reconfigured. | Informational | System | All |

| dvPort group {oldName} in {datacenter.name} was renamed to {newName} | Informational | System | All |

| HA admission control disabled on cluster {computeResource.name} in {datacenter.name} | Informational | System | All |

| HA admission control enabled on cluster {computeResource.name} in {datacenter.name} | Informational | System | All |

| Re-established contact with a primary host in this HA cluster | Informational | System | All |

| Unable to contact a primary HA agent in cluster {computeResource.name} in {datacenter.name} | Critical | System | All |

| All hosts in the HA cluster {computeResource.name} in {datacenter.name} were isolated from the network. Check the network configuration for proper network redundancy in the management network. | Critical | System | All |

| HA disabled on cluster {computeResource.name} in {datacenter.name} | Informational | System | All |

| HA enabled on cluster {computeResource.name} in {datacenter.name} | Informational | System | All |

| A possible host failure has been detected by HA on {failedHost.name} in cluster {computeResource.name} in {datacenter.name} | Critical | System | All |

| Host {isolatedHost.name} has been isolated from cluster {computeResource.name} in {datacenter.name} | Warning | System | All |

| Created datacenter {datacenter.name} in folder {parent.name} | Informational | System | All |

| Renamed datacenter from {oldName} to {newName} | Informational | System | All |

| Datastore {datastore.name} increased in capacity from {oldCapacity} bytes to {newCapacity} bytes in {datacenter.name} | Informational | System | All |

| Removed unconfigured datastore {datastore.name} | Informational | System | All |

| Discovered datastore {datastore.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Multiple datastores named {datastore} detected on host {host.name} in {datacenter.name} | Critical | System | All |

| <internal> | Informational | System | All |

| File or directory {sourceFile} copied from {sourceDatastore.name} to {datastore.name} as {targetFile} | Informational | System | All |

| File or directory {targetFile} deleted from {datastore.name} | Informational | System | All |

| File or directory {sourceFile} moved from {sourceDatastore.name} to {datastore.name} as {targetFile} | Informational | System | All |

| Reconfigured Storage I/O Control on datastore {datastore.name} | Informational | System | All |

| Configured datastore principal {datastorePrincipal} on host {host.name} in {datacenter.name} | Informational | System | All |

| Removed datastore {datastore.name} from {host.name} in {datacenter.name} | Informational | System | All |

| Renamed datastore from {oldName} to {newName} in {datacenter.name} | Informational | System | All |

| Renamed datastore from {oldName} to {newName} in {datacenter.name} | Informational | System | All |

| Disabled DRS on cluster {computeResource.name} in datacenter {datacenter.name} | Informational | System | All |

| Enabled DRS on {computeResource.name} with automation level {behavior} in {datacenter.name} | Informational | System | All |

| DRS put {host.name} into standby mode | Informational | System | All |

| DRS is putting {host.name} into standby mode | Informational | System | All |

| DRS cannot move {host.name} out of standby mode | Critical | System | All |

| DRS moved {host.name} out of standby mode | Informational | System | All |

| DRS is moving {host.name} out of standby mode | Informational | System | All |

| DRS invocation not completed | Critical | System | All |

| DRS has recovered from the failure | Informational | System | All |

| Unable to apply DRS resource settings on host {host.name} in {datacenter.name}. {reason.msg}. This can significantly reduce the effectiveness of DRS. | Critical | System | All |

| Resource configuration specification returns to synchronization from previous failure on host '{host.name}' in {datacenter.name} | Informational | System | All |

| {vm.name} on {host.name} in {datacenter.name} is now compliant with DRS VM-Host affinity rules | Informational | System | All |

| {vm.name} on {host.name} in {datacenter.name} is violating a DRS VM-Host affinity rule | Informational | System | All |

| DRS migrated {vm.name} from {sourceHost.name} to {host.name} in cluster {computeResource.name} in {datacenter.name} | Informational | System | All |

| DRS powered On {vm.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Virtual machine {macAddress} on host {host.name} has a duplicate IP {duplicateIP} | Informational | System | All |

| A vNetwork Distributed Switch {dvs.name} was created in {datacenter.name}. | Informational | System | All |

| vNetwork Distributed Switch {dvs.name} in {datacenter.name} was deleted. | Informational | System | All |

| vNetwork Distributed Switch event | Informational | System | All |

| The vNetwork Distributed Switch {dvs.name} configuration on the host was synchronized with that of the vCenter Server. | Informational | System | All |

| The host {hostJoined.name} joined the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| The host {hostLeft.name} left the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| The host {hostMember.name} changed status on the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| The vNetwork Distributed Switch {dvs.name} configuration on the host differed from that of the vCenter Server. | Warning | System | All |

| vNetwork Distributed Switch {srcDvs.name} was merged into {dstDvs.name} in {datacenter.name}. | Informational | System | All |

| dvPort {portKey} was blocked in the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| The port {portKey} was connected in the vNetwork Distributed Switch {dvs.name} in {datacenter.name} | Informational | System | All |

| New ports were created in the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| Deleted ports in the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| The dvPort {portKey} was disconnected in the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| dvPort {portKey} entered passthrough mode in the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| dvPort {portKey} exited passthrough mode in the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| dvPort {portKey} was moved into the dvPort group {portgroupName} in {datacenter.name}. | Informational | System | All |

| dvPort {portKey} was moved out of the dvPort group {portgroupName} in {datacenter.name}. | Informational | System | All |

| The port {portKey} link was down in the vNetwork Distributed Switch {dvs.name} in {datacenter.name} | Informational | System | All |

| The port {portKey} link was up in the vNetwork Distributed Switch {dvs.name} in {datacenter.name} | Informational | System | All |

| Reconfigured ports in the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| dvPort {portKey} was unblocked in the vNetwork Distributed Switch {dvs.name} in {datacenter.name}. | Informational | System | All |

| The vNetwork Distributed Switch {dvs.name} in {datacenter.name} was reconfigured. | Informational | System | All |

| The vNetwork Distributed Switch {oldName} in {datacenter.name} was renamed to {newName}. | Informational | System | All |

| An upgrade for the vNetwork Distributed Switch {dvs.name} in datacenter {datacenter.name} is available. | Informational | System | All |

| An upgrade for the vNetwork Distributed Switch {dvs.name} in datacenter {datacenter.name} is in progress. | Informational | System | All |

| Cannot complete an upgrade for the vNetwork Distributed Switch {dvs.name} in datacenter {datacenter.name} | Informational | System | All |

| vNetwork Distributed Switch {dvs.name} in datacenter {datacenter.name} was upgraded. | Informational | System | All |

| Host {host.name} in {datacenter.name} has entered maintenance mode | Informational | System | All |

| The host {host.name} is in standby mode | Informational | System | All |

| Host {host.name} in {datacenter.name} has started to enter maintenance mode | Informational | System | All |

| The host {host.name} is entering standby mode | Informational | System | All |

| {message} | Critical | System | All |

| Host {host.name} in {datacenter.name} has exited maintenance mode | Informational | System | All |

| The host {host.name} could not exit standby mode | Critical | System | All |

| The host {host.name} is no longer in standby mode | Informational | System | All |

| The host {host.name} is exiting standby mode | Informational | System | All |

| Sufficient resources are available to satisfy HA failover level in cluster {computeResource.name} in {datacenter.name} | Informational | System | All |

| General event: {message} | Informational | System | All |

| Error detected on {host.name} in {datacenter.name}: {message} | Critical | System | All |

| Issue detected on {host.name} in {datacenter.name}: {message} | Informational | System | All |

| Issue detected on {host.name} in {datacenter.name}: {message} | Warning | System | All |

| User logged event: {message} | Informational | System | All |

| Error detected for {vm.name} on {host.name} in {datacenter.name}: {message} | Critical | System | All |

| Issue detected for {vm.name} on {host.name} in {datacenter.name}: {message} | Informational | System | All |

| Issue detected for {vm.name} on {host.name} in {datacenter.name}: {message} | Warning | System | All |

| The vNetwork Distributed Switch corresponding to the proxy switches {switchUuid} on the host {host.name} does not exist in vCenter Server or does not contain this host. | Informational | System | All |

| A ghost proxy switch {switchUuid} on the host {host.name} was resolved. | Informational | System | All |

| The message changed: {message} | Informational | System | All |

| {componentName} status changed from {oldStatus} to {newStatus} | Informational | System | All |

| Cannot add host {hostname} to datacenter {datacenter.name} | Critical | System | All |

| Added host {host.name} to datacenter {datacenter.name} | Informational | System | All |

| Administrator access to the host {host.name} is disabled | Warning | System | All |

| Administrator access to the host {host.name} has been restored | Warning | System | All |

| Cannot connect {host.name} in {datacenter.name}: cannot configure management account | Critical | System | All |

| Cannot connect {host.name} in {datacenter.name}: already managed by {serverName} | Critical | System | All |

| Cannot connect host {host.name} in {datacenter.name} : server agent is not responding | Critical | System | All |

| Cannot connect {host.name} in {datacenter.name}: incorrect user name or password | Critical | System | All |

| Cannot connect {host.name} in {datacenter.name}: incompatible version | Critical | System | All |

| Cannot connect host {host.name} in {datacenter.name}. Did not install or upgrade vCenter agent service. | Critical | System | All |

| Cannot connect {host.name} in {datacenter.name}: error connecting to host | Critical | System | All |

| Cannot connect {host.name} in {datacenter.name}: network error | Critical | System | All |

| Cannot connect host {host.name} in {datacenter.name}: account has insufficient privileges | Critical | System | All |

| Cannot connect host {host.name} in {datacenter.name} | Critical | System | All |

| Cannot connect {host.name} in {datacenter.name}: not enough CPU licenses | Critical | System | All |

| Cannot connect {host.name} in {datacenter.name}: incorrect host name | Critical | System | All |

| Cannot connect {host.name} in {datacenter.name}: time-out waiting for host response | Critical | System | All |

| Host {host.name} checked for compliance. | Informational | System | All |

| Host {host.name} is in compliance with the attached profile | Informational | System | All |

| Host configuration changes applied. | Informational | System | All |

| Connected to {host.name} in {datacenter.name} | Informational | System | All |

| Host {host.name} in {datacenter.name} is not responding | Critical | System | All |

| dvPort connected to host {host.name} in {datacenter.name} changed status | Informational | System | All |

| HA agent disabled on {host.name} in cluster {computeResource.name} in {datacenter.name} | Informational | System | All |

| HA is being disabled on {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} | Informational | System | All |

| HA agent enabled on {host.name} in cluster {computeResource.name} in {datacenter.name} | Informational | System | All |

| Enabling HA agent on {host.name} in cluster {computeResource.name} in {datacenter.name} | Warning | System | All |

| HA agent on {host.name} in cluster {computeResource.name} in {datacenter.name} has an error {message}: {[email protected]} | Critical | System | All |

| HA agent on host {host.name} in cluster {computeResource.name} in {datacenter.name} is configured correctly | Informational | System | All |

| Disconnected from {host.name} in {datacenter.name}. Reason: {[email protected]} | Informational | System | All |

| Cannot restore some administrator permissions to the host {host.name} | Critical | System | All |

| Host {host.name} has the following extra networks not used by other hosts for HA communication:{ips}. Consider using HA advanced option das.allowNetwork to control network usage | Critical | System | All |

| Cannot complete command 'hostname -s' on host {host.name} or returned incorrect name format | Critical | System | All |

| Maximum ({capacity}) number of hosts allowed for this edition of vCenter Server has been reached | Critical | System | All |

| The virtual machine inventory file on host {host.name} is damaged or unreadable. | Informational | System | All |

| IP address of the host {host.name} changed from {oldIP} to {newIP} | Informational | System | All |

| Configuration of host IP address is inconsistent on host {host.name}: address resolved to {ipAddress} and {ipAddress2} | Critical | System | All |

| Cannot resolve IP address to short name on host {host.name} | Critical | System | All |

| Host {host.name} could not reach isolation address: {isolationIp} | Critical | System | All |

| A host license for {host.name} has expired | Critical | System | All |

| Host {host.name} does not have the following networks used by other hosts for HA communication:{ips}. Consider using HA advanced option das.allowNetwork to control network usage | Critical | System | All |

| Host monitoring state in {computeResource.name} in {datacenter.name} changed to {[email protected]} | Informational | System | All |

| Host {host.name} currently has no available networks for HA Communication. The following networks are currently used by HA: {ips} | Critical | System | All |

| Host {host.name} has no port groups enabled for HA communication. | Critical | System | All |

| Host {host.name} currently has no management network redundancy | Critical | System | All |

| Host {host.name} is not in compliance with the attached profile | Critical | System | All |

| Host {host.name} is not a cluster member in {datacenter.name} | Critical | System | All |

| Insufficient capacity in host {computeResource.name} to satisfy resource configuration in {datacenter.name} | Critical | System | All |

| Primary agent {primaryAgent} was not specified as a short name to host {host.name} | Critical | System | All |

| Profile is applied on the host {host.name} | Informational | System | All |

| Cannot reconnect to {host.name} in {datacenter.name} | Critical | System | All |

| Removed host {host.name} in {datacenter.name} | Informational | System | All |

| Host names {shortName} and {shortName2} both resolved to the same IP address. Check the host's network configuration and DNS entries | Critical | System | All |

| Cannot resolve short name {shortName} to IP address on host {host.name} | Critical | System | All |

| Shut down of {host.name} in {datacenter.name}: {reason} | Informational | System | All |

| Configuration status on host {computeResource.name} changed from {[email protected]} to {[email protected]} in {datacenter.name} | Informational | System | All |

| Cannot synchronize host {host.name}. {reason.msg} | Critical | System | All |

| Cannot install or upgrade vCenter agent service on {host.name} in {datacenter.name} | Critical | System | All |

| The userworld swap is not enabled on the host {host.name} | Warning | System | All |

| Host {host.name} vNIC {vnic.vnic} was reconfigured to use dvPort {vnic.port.portKey} with port level configuration, which might be different from the dvPort group. | Informational | System | All |

| WWNs are changed for {host.name} | Warning | System | All |

| The WWN ({wwn}) of {host.name} conflicts with the currently registered WWN | Critical | System | All |

| Host {host.name} did not provide the information needed to acquire the correct set of licenses | Critical | System | All |

| {message} | Informational | System | All |

| Insufficient resources to satisfy HA failover level on cluster {computeResource.name} in {datacenter.name} | Critical | System | All |

| The license edition '{feature}' is invalid | Critical | System | All |

| License {feature.featureName} has expired | Critical | System | All |

| License inventory is not compliant. Licenses are overused | Critical | System | All |

| Unable to acquire licenses due to a restriction in the option file on the license server. | Critical | System | All |

| License server {licenseServer} is available | Informational | System | All |

| License server {licenseServer} is unavailable | Critical | System | All |

| Created local datastore {datastore.name} on {host.name} in {datacenter.name} | Informational | System | All |

| The Local Tech Support Mode for the host {host.name} has been enabled | Informational | System | All |

| Datastore {datastore} which is configured to back the locker does not exist | Warning | System | All |

| Locker was reconfigured from {oldDatastore} to {newDatastore} datastore | Informational | System | All |

| Unable to migrate {vm.name} from {host.name} in {datacenter.name}: {fault.msg} | Critical | System | All |

| Unable to migrate {vm.name} from {host.name} to {dstHost.name} in {datacenter.name}: {fault.msg} | Critical | System | All |

| Migration of {vm.name} from {host.name} to {dstHost.name} in {datacenter.name}: {fault.msg} | Warning | System | All |

| Cannot migrate {vm.name} from {host.name} to {dstHost.name} and resource pool {dstPool.name} in {datacenter.name}: {fault.msg} | Critical | System | All |

| Migration of {vm.name} from {host.name} to {dstHost.name} and resource pool {dstPool.name} in {datacenter.name}: {fault.msg} | Warning | System | All |

| Migration of {vm.name} from {host.name} in {datacenter.name}: {fault.msg} | Warning | System | All |

| Created NAS datastore {datastore.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Cannot login user {userName}@{ipAddress}: no permission | Critical | System | All |

| No datastores have been configured on the host {host.name} | Informational | System | All |

| A required license {feature.featureName} is not reserved | Critical | System | All |

| Unable to automatically migrate {vm.name} from {host.name} | Informational | System | All |

| Non-VI workload detected on datastore {datastore.name} | Critical | System | All |

| Not enough resources to failover {vm.name} in {computeResource.name} in {datacenter.name} | Informational | System | All |

| The vNetwork Distributed Switch configuration on some hosts differed from that of the vCenter Server. | Warning | System | All |

| Permission created for {principal} on {entity.name}, role is {role.name}, propagation is {[email protected]} | Informational | System | All |

| Permission rule removed for {principal} on {entity.name} | Informational | System | All |

| Permission changed for {principal} on {entity.name}, role is {role.name}, propagation is {[email protected]} | Informational | System | All |

| Profile {profile.name} attached. | Informational | System | All |

| Profile {profile.name} was changed. | Informational | System | All |

| Profile is created. | Informational | System | All |

| Profile {profile.name} detached. | Informational | System | All |

| Profile {profile.name} reference host changed. | Informational | System | All |

| Profile was removed. | Informational | System | All |

| Remote Tech Support Mode (SSH) for the host {host.name} has been enabled | Informational | System | All |

| Created resource pool {resourcePool.name} in compute-resource {computeResource.name} in {datacenter.name} | Informational | System | All |

| Removed resource pool {resourcePool.name} on {computeResource.name} in {datacenter.name} | Informational | System | All |

| Moved resource pool {resourcePool.name} from {oldParent.name} to {newParent.name} on {computeResource.name} in {datacenter.name} | Informational | System | All |

| Updated configuration for {resourcePool.name} in compute-resource {computeResource.name} in {datacenter.name} | Informational | System | All |

| Resource usage exceeds configuration for resource pool {resourcePool.name} in compute-resource {computeResource.name} in {datacenter.name} | Critical | System | All |

| New role {role.name} created | Informational | System | All |

| Role {role.name} removed | Informational | System | All |

| Modifed role {role.name} | Informational | System | All |

| Task {scheduledTask.name} on {entity.name} in {datacenter.name} completed successfully | Informational | System | All |

| Created task {scheduledTask.name} on {entity.name} in {datacenter.name} | Informational | System | All |

| Task {scheduledTask.name} on {entity.name} in {datacenter.name} sent email to {to} | Informational | System | All |

| Task {scheduledTask.name} on {entity.name} in {datacenter.name} cannot send email to {to}: {reason.msg} | Critical | System | All |

| Task {scheduledTask.name} on {entity.name} in {datacenter.name} cannot be completed: {reason.msg} | Critical | System | All |

| Reconfigured task {scheduledTask.name} on {entity.name} in {datacenter.name} | Informational | System | All |

| Removed task {scheduledTask.name} on {entity.name} in {datacenter.name} | Informational | System | All |

| Running task {scheduledTask.name} on {entity.name} in {datacenter.name} | Informational | System | All |

| A vCenter Server license has expired | Critical | System | All |

| vCenter started | Informational | System | All |

| A session for user '{terminatedUsername}' has stopped | Informational | System | All |

| Task: {info.descriptionId} | Informational | System | All |

| Task: {info.descriptionId} time-out | Informational | System | All |

| Upgrading template {legacyTemplate} | Informational | System | All |

| Cannot upgrade template {legacyTemplate} due to: {reason.msg} | Informational | System | All |

| Template {legacyTemplate} upgrade completed | Informational | System | All |

| The operation performed on {host.name} in {datacenter.name} timed out | Warning | System | All |

| There are {unlicensed} unlicensed virtual machines on host {host} - there are only {available} licenses available | Informational | System | All |

| {unlicensed} unlicensed virtual machines found on host {host} | Informational | System | All |

| The agent on host {host.name} is updated and will soon restart | Informational | System | All |

| User {userLogin} was added to group {group} | Informational | System | All |

| User {userName}@{ipAddress} logged in | Informational | System | All |

| User {userName} logged out | Informational | System | All |

| Password was changed for account {userLogin} on host {host.name} | Informational | System | All |

| User {userLogin} removed from group {group} | Informational | System | All |

| {message} | Informational | System | All |

| Created VMFS datastore {datastore.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Expanded VMFS datastore {datastore.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Extended VMFS datastore {datastore.name} on {host.name} in {datacenter.name} | Informational | System | All |

| A vMotion license for {host.name} has expired | Critical | System | All |

| Cannot uninstall vCenter agent from {host.name} in {datacenter.name}. {[email protected]} | Critical | System | All |

| vCenter agent has been uninstalled from {host.name} in {datacenter.name} | Informational | System | All |

| Cannot upgrade vCenter agent on {host.name} in {datacenter.name}. {[email protected]} | Critical | System | All |

| vCenter agent has been upgraded on {host.name} in {datacenter.name} | Informational | System | All |

| VIM account password was changed on host {host.name} | Informational | System | All |

| Remote console to {vm.name} on {host.name} in {datacenter.name} has been opened | Informational | System | All |

| A ticket for {vm.name} of type {ticketType} on {host.name} in {datacenter.name} has been acquired | Informational | System | All |

| Invalid name for {vm.name} on {host.name} in {datacenter.name}. Renamed from {oldName} to {newName} | Informational | System | All |

| Cloning {vm.name} on host {host.name} in {datacenter.name} to {destName} on host {destHost.name} | Informational | System | All |

| Cloning {vm.name} on host {host.name} in {datacenter.name} to {destName} on host {destHost.name} | Informational | System | All |

| Creating {vm.name} on host {host.name} in {datacenter.name} | Informational | System | All |

| Deploying {vm.name} on host {host.name} in {datacenter.name} from template {srcTemplate.name} | Informational | System | All |

| Migrating {vm.name} from {host.name} to {destHost.name} in {datacenter.name} | Informational | System | All |

| Relocating {vm.name} from {host.name} to {destHost.name} in {datacenter.name} | Informational | System | All |

| Relocating {vm.name} in {datacenter.name} from {host.name} to {destHost.name} | Informational | System | All |

| Cannot clone {vm.name}: {reason.msg} | Critical | System | All |

| Clone of {sourceVm.name} completed | Informational | System | All |

| Configuration file for {vm.name} on {host.name} in {datacenter.name} cannot be found | Informational | System | All |

| Virtual machine {vm.name} is connected | Informational | System | All |

| Created virtual machine {vm.name} on {host.name} in {datacenter.name} | Informational | System | All |

| dvPort connected to VM {vm.name} on {host.name} in {datacenter.name} changed status | Informational | System | All |

| {vm.name} on {host.name} in cluster {computeResource.name} in {datacenter.name} reset by HA. Reason: {[email protected]} | Informational | System | All |

| {vm.name} on {host.name} in cluster {computeResource.name} in {datacenter.name} reset by HA. Reason: {[email protected]}. A screenshot is saved at {screenshotFilePath}. | Informational | System | All |

| Cannot reset {vm.name} on {host.name} in cluster {computeResource.name} in {datacenter.name} | Warning | System | All |

| Unable to update HA agents given the state of {vm.name} | Critical | System | All |

| HA agents have been updated with the current state of the virtual machine | Informational | System | All |

| Disconnecting all hosts as the date of virtual machine {vm.name} has been rolled back | Critical | System | All |

| Cannot deploy template: {reason.msg} | Critical | System | All |

| Template {srcTemplate.name} deployed on host {host.name} | Informational | System | All |

| {vm.name} on host {host.name} in {datacenter.name} is disconnected | Informational | System | All |

| Discovered {vm.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Cannot create virtual disk {disk} | Critical | System | All |

| Migrating {vm.name} off host {host.name} in {datacenter.name} | Informational | System | All |

| End a recording session on {vm.name} | Informational | System | All |

| End a replay session on {vm.name} | Informational | System | All |

| Cannot migrate {vm.name} from {host.name} to {destHost.name} in {datacenter.name} | Critical | System | All |

| Cannot complete relayout {vm.name} on {host.name} in {datacenter.name}: {reason.msg} | Critical | System | All |

| Cannot complete relayout for virtual machine {vm.name} which has disks on a VMFS2 volume. | Critical | System | All |

| vCenter cannot start the Secondary VM {vm.name}. Reason: {[email protected]} | Critical | System | All |

| Cannot power Off {vm.name} on {host.name} in {datacenter.name}: {reason.msg} | Critical | System | All |

| Cannot power On {vm.name} on {host.name} in {datacenter.name}. {reason.msg} | Critical | System | All |

| Cannot reboot the guest OS for {vm.name} on {host.name} in {datacenter.name}. {reason.msg} | Critical | System | All |

| Cannot suspend {vm.name} on {host.name} in {datacenter.name}: {reason.msg} | Critical | System | All |

| {vm.name} cannot shut down the guest OS on {host.name} in {datacenter.name}: {reason.msg} | Critical | System | All |

| {vm.name} cannot standby the guest OS on {host.name} in {datacenter.name}: {reason.msg} | Critical | System | All |

| Cannot suspend {vm.name} on {host.name} in {datacenter.name}: {reason.msg} | Critical | System | All |

| vCenter cannot update the Secondary VM {vm.name} configuration | Critical | System | All |

| Failover unsuccessful for {vm.name} on {host.name} in cluster {computeResource.name} in {datacenter.name}. Reason: {reason.msg} | Warning | System | All |

| Fault Tolerance state on {vm.name} changed from {[email protected]} to {[email protected]} | Informational | System | All |

| Fault Tolerance protection has been turned off for {vm.name} | Informational | System | All |

| The Fault Tolerance VM ({vm.name}) has been terminated. {[email protected]} | Informational | System | All |

| Guest OS reboot for {vm.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Guest OS shut down for {vm.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Guest OS standby for {vm.name} on {host.name} in {datacenter.name} | Informational | System | All |

| VM monitoring state in {computeResource.name} in {datacenter.name} changed to {[email protected]} | Informational | System | All |

| Assign a new instance UUID ({instanceUuid}) to {vm.name} | Informational | System | All |

| The instance UUID of {vm.name} has been changed from ({oldInstanceUuid}) to ({newInstanceUuid}) | Informational | System | All |

| The instance UUID ({instanceUuid}) of {vm.name} conflicts with the instance UUID assigned to {conflictedVm.name} | Critical | System | All |

| New MAC address ({mac}) assigned to adapter {adapter} for {vm.name} | Informational | System | All |

| Changed MAC address from {oldMac} to {newMac} for adapter {adapter} for {vm.name} | Warning | System | All |

| The MAC address ({mac}) of {vm.name} conflicts with MAC assigned to {conflictedVm.name} | Critical | System | All |

| Reached maximum Secondary VM (with FT turned On) restart count for {vm.name} on {host.name} in cluster {computeResource.name} in {datacenter.name}. | Warning | System | All |

| Reached maximum VM restart count for {vm.name} on {host.name} in cluster {computeResource.name} in {datacenter.name}. | Warning | System | All |

| Error message on {vm.name} on {host.name} in {datacenter.name}: {message} | Critical | System | All |

| Message on {vm.name} on {host.name} in {datacenter.name}: {message} | Informational | System | All |

| Warning message on {vm.name} on {host.name} in {datacenter.name}: {message} | Warning | System | All |

| Migration of virtual machine {vm.name} from {sourceHost.name} to {host.name} completed | Informational | System | All |

| No compatible host for the Secondary VM {vm.name} | Critical | System | All |

| Not all networks for {vm.name} are accessible by {destHost.name} | Warning | System | All |

| {vm.name} does not exist on {host.name} in {datacenter.name} | Warning | System | All |

| {vm.name} was powered Off on the isolated host {isolatedHost.name} in cluster {computeResource.name} in {datacenter.name} | Informational | System | All |

| {vm.name} on {host.name} in {datacenter.name} is powered off | Informational | System | All |

| {vm.name} on {host.name} in {datacenter.name} is powered on | Informational | System | All |

| Virtual machine {vm.name} powered On with vNICs connected to dvPorts that have a port level configuration, which might be different from the dvPort group configuration. | Informational | System | All |

| VM ({vm.name}) failed over to {host.name}. {[email protected]} | Critical | System | All |

| Reconfigured {vm.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Registered {vm.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Relayout of {vm.name} on {host.name} in {datacenter.name} completed | Informational | System | All |

| {vm.name} on {host.name} in {datacenter.name} is in the correct format and relayout is not necessary | Informational | System | All |

| {vm.name} on {host.name} reloaded from new configuration {configPath}. | Informational | System | All |

| {vm.name} on {host.name} could not be reloaded from {configPath}. | Critical | System | All |

| Cannot relocate virtual machine '{vm.name}' in {datacenter.name} | Critical | System | All |

| Completed the relocation of the virtual machine | Informational | System | All |

| Remote console connected to {vm.name} on host {host.name} | Informational | System | All |

| Remote console disconnected from {vm.name} on host {host.name} | Informational | System | All |

| Removed {vm.name} on {host.name} from {datacenter.name} | Informational | System | All |

| Renamed {vm.name} from {oldName} to {newName} in {datacenter.name} | Warning | System | All |

| {vm.name} on {host.name} in {datacenter.name} is reset | Informational | System | All |

| Moved {vm.name} from resource pool {oldParent.name} to {newParent.name} in {datacenter.name} | Informational | System | All |

| Changed resource allocation for {vm.name} | Informational | System | All |

| Virtual machine {vm.name} was restarted on {host.name} since {sourceHost.name} failed | Informational | System | All |

| {vm.name} on {host.name} in {datacenter.name} is resumed | Informational | System | All |

| A Secondary VM has been added for {vm.name} | Informational | System | All |

| vCenter disabled Fault Tolerance on VM '{vm.name}' because the Secondary VM could not be powered On. | Critical | System | All |

| Disabled Secondary VM for {vm.name} | Informational | System | All |

| Enabled Secondary VM for {vm.name} | Informational | System | All |

| Started Secondary VM for {vm.name} | Informational | System | All |

| {vm.name} was shut down on the isolated host {isolatedHost.name} in cluster {computeResource.name} in {datacenter.name}: {[email protected]} | Informational | System | All |

| Start a recording session on {vm.name} | Informational | System | All |

| Start a replay session on {vm.name} | Informational | System | All |

| {vm.name} on host {host.name} in {datacenter.name} is starting | Informational | System | All |

| Starting Secondary VM for {vm.name} | Informational | System | All |

| The static MAC address ({mac}) of {vm.name} conflicts with MAC assigned to {conflictedVm.name} | Critical | System | All |

| {vm.name} on {host.name} in {datacenter.name} is stopping | Informational | System | All |

| {vm.name} on {host.name} in {datacenter.name} is suspended | Informational | System | All |

| {vm.name} on {host.name} in {datacenter.name} is being suspended | Informational | System | All |

| Starting the Secondary VM {vm.name} timed out within {timeout} ms | Critical | System | All |

| Unsupported guest OS {guestId} for {vm.name} on {host.name} in {datacenter.name} | Warning | System | All |

| Virtual hardware upgraded to version {version} | Informational | System | All |

| Cannot upgrade virtual hardware | Critical | System | All |

| Upgrading virtual hardware on {vm.name} in {datacenter.name} to version {version} | Informational | System | All |

| Assigned new BIOS UUID ({uuid}) to {vm.name} on {host.name} in {datacenter.name} | Informational | System | All |

| Changed BIOS UUID from {oldUuid} to {newUuid} for {vm.name} on {host.name} in {datacenter.name} | Warning | System | All |

| BIOS ID ({uuid}) of {vm.name} conflicts with that of {conflictedVm.name} | Critical | System | All |

| New WWNs assigned to {vm.name} | Informational | System | All |

| WWNs are changed for {vm.name} | Warning | System | All |

| The WWN ({wwn}) of {vm.name} conflicts with the currently registered WWN | Critical | System | All |

| {message} | Warning | System | All |

| Booting from iSCSI failed with an error. See the VMware Knowledge Base for information on configuring iBFT networking. | Warning | System | All |

| com.vmware.license.AddLicenseEvent | License {licenseKey} added to VirtualCenter | Informational | System |

| com.vmware.license.AssignLicenseEvent | License {licenseKey} assigned to asset {entityName} with id {entityId} | Informational | System |

| com.vmware.license.DLFDownloadFailedEvent | Failed to download license information from the host {hostname} due to {errorReason.@enum.com.vmware.license.DLFDownloadFailedEvent.DLFDownloadFailedReason} | Warning | System |

| com.vmware.license.LicenseAssignFailedEvent | License assignment on the host fails. Reasons: {[email protected]}. | Informational | System |

| com.vmware.license.LicenseExpiryEvent | Your host license will expire in {remainingDays} days. The host will be disconnected from VC when its license expires. | Warning | System |

| com.vmware.license.LicenseUserThresholdExceededEvent | Current license usage ({currentUsage} {costUnitText}) for {edition} exceeded the user-defined threshold ({threshold} {costUnitText}) | Warning | System |

| com.vmware.license.RemoveLicenseEvent | License {licenseKey} removed from VirtualCenter | Informational | System |

| com.vmware.license.UnassignLicenseEvent | License unassigned from asset {entityName} with id {entityId} | Informational | System |

| com.vmware.vc.HA.ClusterFailoverActionCompletedEvent | HA completed a failover action in cluster {computeResource.name} in datacenter {datacenter.name} | Informational | System |

| com.vmware.vc.HA.ClusterFailoverActionInitiatedEvent | HA initiated a failover action in cluster {computeResource.name} in datacenter {datacenter.name} | Warning | System |

| com.vmware.vc.HA.DasAgentRunningEvent | HA Agent on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} is running | Informational | System |

| com.vmware.vc.HA.DasFailoverHostFailedEvent | HA failover host {host.name} in cluster {computeResource.name} in {datacenter.name} has failed | Critical | System |

| com.vmware.vc.HA.DasHostCompleteDatastoreFailureEvent | All shared datastores failed on the host {hostName} in cluster {computeResource.name} in {datacenter.name} | Critical | System |

| com.vmware.vc.HA.DasHostCompleteNetworkFailureEvent | All VM networks failed on the host {hostName} in cluster {computeResource.name} in {datacenter.name} | Critical | System |

| com.vmware.vc.HA.DasHostFailedEvent | A possible host failure has been detected by HA on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} | Critical | System |

| com.vmware.vc.HA.DasHostMonitoringDisabledEvent | No virtual machine failover will occur until Host Monitoring is enabled in cluster {computeResource.name} in {datacenter.name} | Warning | System |

| com.vmware.vc.HA.DasTotalClusterFailureEvent | HA recovered from a total cluster failure in cluster {computeResource.name} in datacenter {datacenter.name} | Warning | System |

| com.vmware.vc.HA.HostDasAgentHealthyEvent | HA Agent on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} is healthy | Informational | System |

| com.vmware.vc.HA.HostDasErrorEvent | HA agent on {host.name} in cluster {computeResource.name} in {datacenter.name} has an error: {[email protected]} | Critical | System |

| com.vmware.vc.VCHealthStateChangedEvent | vCenter Service overall health changed from '{oldState}' to '{newState}' | Informational | System |

| com.vmware.vc.cim.CIMGroupHealthStateChanged | Health of [data.group] changed from [data.oldState] to [data.newState]. | Informational | System |

| com.vmware.vc.datastore.UpdateVmFilesFailedEvent | Failed to update VM files on datastore {ds.name} using host {hostName} | Critical | System |

| com.vmware.vc.datastore.UpdatedVmFilesEvent | Updated VM files on datastore {ds.name} using host {hostName} | Informational | System |

| com.vmware.vc.datastore.UpdatingVmFilesEvent | Updating VM files on datastore {ds.name} using host {hostName} | Informational | System |

| com.vmware.vc.ft.VmAffectedByDasDisabledEvent | VMware HA has been disabled in cluster {computeResource.name} of datacenter {datacenter.name}. HA will not restart VM {vm.name} or its Secondary VM after a failure. | Warning | System |

| com.vmware.vc.npt.VmAdapterEnteredPassthroughEvent | Network passthrough is active on adapter {deviceLabel} of virtual machine {vm.name} on host {host.name} in {datacenter.name} | Informational | System |

| com.vmware.vc.npt.VmAdapterExitedPassthroughEvent | Network passthrough is inactive on adapter {deviceLabel} of virtual machine {vm.name} on host {host.name} in {datacenter.name} | Informational | System |

| com.vmware.vc.vcp.FtDisabledVmTreatAsNonFtEvent | HA VM Component Protection protects virtual machine {vm.name} on {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} as non-FT virtual machine because the FT state is disabled | Informational | System |

| com.vmware.vc.vcp.FtFailoverEvent | FT Primary VM {vm.name} on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} is going to fail over to Secondary VM due to component failure | Informational | System |

| com.vmware.vc.vcp.FtFailoverFailedEvent | FT virtual machine {vm.name} on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} failed to failover to secondary | Critical | System |

| com.vmware.vc.vcp.FtSecondaryRestartEvent | HA VM Component Protection is restarting FT secondary virtual machine {vm.name} on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} due to component failure | Informational | System |

| com.vmware.vc.vcp.FtSecondaryRestartFailedEvent | FT Secondary VM {vm.name} on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} failed to restart | Critical | System |

| com.vmware.vc.vcp.NeedSecondaryFtVmTreatAsNonFtEvent | HA VM Component Protection protects virtual machine {vm.name} on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} as non-FT virtual machine because it has been in the needSecondary state too long | Informational | System |

| com.vmware.vc.vcp.TestEndEvent | VM Component Protection test ends on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} | Informational | System |

| com.vmware.vc.vcp.TestStartEvent | VM Component Protection test starts on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} | Informational | System |

| com.vmware.vc.vcp.VcpNoActionEvent | HA VM Component Protection did not take action on virtual machine {vm.name} on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} due to the feature configuration setting | Informational | System |

| com.vmware.vc.vcp.VmDatastoreFailedEvent | Virtual machine {vm.name} on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} lost access to {datastore} | Critical | System |

| com.vmware.vc.vcp.VmNetworkFailedEvent | Virtual machine {vm.name} on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} lost access to {network} | Critical | System |

| com.vmware.vc.vcp.VmPowerOffHangEvent | HA VM Component Protection could not power off virtual machine {vm.name} on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} successfully after trying {numTimes} times and will keep trying | Critical | System |

| com.vmware.vc.vcp.VmRestartEvent | HA VM Component Protection is restarting virtual machine {vm.name} due to component failure on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} | Informational | System |

| com.vmware.vc.vcp.VmRestartFailedEvent | Virtual machine {vm.name} affected by component failure on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} failed to restart | Critical | System |

| com.vmware.vc.vcp.VmWaitForCandidateHostEvent | HA VM Component Protection could not find a destination host for virtual machine {vm.name} on host {host.name} in cluster {computeResource.name} in datacenter {datacenter.name} after waiting {numSecWait} seconds and will keep trying | Critical | System |

| com.vmware.vc.vmam.AppMonitoringNotSupported | Application monitoring is not supported on {host.name} in cluster {computeResource.name} in {datacenter.name} | Warning | System |

| com.vmware.vc.vmam.VmAppHealthMonitoringStateChangedEvent | Application heartbeat status changed to {status} for {vm.name} on {host.name} in cluster {computeResource.name} in {datacenter.name} | Warning | System |

| com.vmware.vc.vmam.VmDasAppHeartbeatFailedEvent | Application heartbeat failed for {vm.name} on {host.name} in cluster {computeResource.name} in {datacenter.name} | Warning | System |

| esx.clear.net.connectivity.restored | Network connectivity restored on virtual switch {1}, portgroups: {2}. Physical NIC {3} is up. | Informational | System |

| esx.clear.net.dvport.connectivity.restored | Network connectivity restored on DVPorts: {1}. Physical NIC {2} is up. | Informational | System |

| esx.clear.net.dvport.redundancy.restored | Uplink redundancy restored on DVPorts: {1}. Physical NIC {2} is up. | Informational | System |

| esx.clear.net.redundancy.restored | Uplink redundancy restored on virtual switch {1}, portgroups: {2}. Physical NIC {3} is up. | Informational | System |

| esx.clear.net.vmnic.linkstate.up | Physical NIC {1} linkstate is up. | Informational | System |

| esx.clear.storage.connectivity.restored | Connectivity to storage device {1} (Datastores: {2}) restored. Path {3} is active again. | Informational | System |

| esx.clear.storage.redundancy.restored | Path redundancy to storage device {1} (Datastores: {2}) restored. Path {3} is active again. | Informational | System |

| esx.problem.apei.bert.memory.error.corrected | A corrected memory error occurred in last boot. The following details were reported. Physical Addr: {1}, Physical Addr Mask: {2}, Node: {3}, Card: {4}, Module: {5}, Bank: {6}, Device: {7}, Row: {8}, Column: {9} Error type: {10} | Critical | System |

| esx.problem.apei.bert.memory.error.fatal | A fatal memory error occurred in the last boot. The following details were reported. Physical Addr: {1}, Physical Addr Mask: {2}, Node: {3}, Card: {4}, Module: {5}, Bank: {6}, Device: {7}, Row: {8}, Column: {9} Error type: {10} | Critical | System |

| esx.problem.apei.bert.memory.error.recoverable | A recoverable memory error occurred in last boot. The following details were reported. Physical Addr: {1}, Physical Addr Mask: {2}, Node: {3}, Card: {4}, Module: {5}, Bank: {6}, Device: {7}, Row: {8}, Column: {9} Error type: {10} | Critical | System |

| esx.problem.apei.bert.pcie.error.corrected | A corrected PCIe error occurred in last boot. The following details were reported. Port Type: {1}, Device: {2}, Bus #: {3}, Function: {4}, Slot: {5}, Device Vendor: {6}, Version: {7}, Command Register: {8}, Status Register: {9}. | Critical | System |

| esx.problem.apei.bert.pcie.error.fatal | Platform encounterd a fatal PCIe error in last boot. The following details were reported. Port Type: {1}, Device: {2}, Bus #: {3}, Function: {4}, Slot: {5}, Device Vendor: {6}, Version: {7}, Command Register: {8}, Status Register: {9}. | Critical | System |

| esx.problem.apei.bert.pcie.error.recoverable | A recoverable PCIe error occurred in last boot. The following details were reported. Port Type: {1}, Device: {2}, Bus #: {3}, Function: {4}, Slot: {5}, Device Vendor: {6}, Version: {7}, Command Register: {8}, Status Register: {9}. | Critical | System |

| esx.problem.iorm.nonviworkload | An external I/O activity is detected on datastore {1}, this is an unsupported configuration. Consult the Resource Management Guide or follow the Ask VMware link for more information. | Informational | System |

| esx.problem.net.connectivity.lost | Lost network connectivity on virtual switch {1}. Physical NIC {2} is down. Affected portgroups:{3}. | Critical | System |

| esx.problem.net.dvport.connectivity.lost | Lost network connectivity on DVPorts: {1}. Physical NIC {2} is down. | Critical | System |

| esx.problem.net.dvport.redundancy.degraded | Uplink redundancy degraded on DVPorts: {1}. Physical NIC {2} is down. | Warning | System |

| esx.problem.net.dvport.redundancy.lost | Lost uplink redundancy on DVPorts: {1}. Physical NIC {2} is down. | Warning | System |

| esx.problem.net.e1000.tso6.notsupported | Guest-initiated IPv6 TCP Segmentation Offload (TSO) packets ignored. Manually disable TSO inside the guest operating system in virtual machine {1}, or use a different virtual adapter. | Critical | System |

| esx.problem.net.migrate.bindtovmk | The ESX advanced configuration option /Migrate/Vmknic is set to an invalid vmknic: {1}. /Migrate/Vmknic specifies a vmknic that vMotion binds to for improved performance. Update the configuration option with a valid vmknic. Alternatively, if you do not want vMotion to bind to a specific vmknic, remove the invalid vmknic and leave the option blank. | Warning | System |

| esx.problem.net.proxyswitch.port.unavailable | Virtual NIC with hardware address {1} failed to connect to distributed virtual port {2} on switch {3}. There are no more ports available on the host proxy switch. | Warning | System |

| esx.problem.net.redundancy.degraded | Uplink redundancy degraded on virtual switch {1}. Physical NIC {2} is down. Affected portgroups:{3}. | Warning | System |

| esx.problem.net.redundancy.lost | Lost uplink redundancy on virtual switch {1}. Physical NIC {2} is down. Affected portgroups:{3}. | Warning | System |

| esx.problem.net.uplink.mtu.failed | VMkernel failed to set the MTU value {1} on the uplink {2}. | Warning | System |

| esx.problem.net.vmknic.ip.duplicate | A duplicate IP address was detected for {1} on the interface {2}. The current owner is {3}. | Warning | System |

| esx.problem.net.vmnic.linkstate.down | Physical NIC {1} linkstate is down. | Informational | System |

| esx.problem.net.vmnic.watchdog.reset | Uplink {1} has recovered from a transient failure due to watchdog timeout | Informational | System |

| esx.problem.scsi.device.limitreached | The maximum number of supported devices of {1} has been reached. A device from plugin {2} could not be created. | Critical | System |

| esx.problem.scsi.device.thinprov.atquota | Space utilization on thin-provisioned device {1} exceeded configured threshold. Affected datastores (if any): {2}. | Warning | System |

| esx.problem.scsi.scsipath.limitreached | The maximum number of supported paths of {1} has been reached. Path {2} could not be added. | Critical | System |

| esx.problem.storage.connectivity.devicepor | Frequent PowerOn Reset Unit Attentions are occurring on device {1}. This might indicate a storage problem. Affected datastores: {2} | Warning | System |

| esx.problem.storage.connectivity.lost | Lost connectivity to storage device {1}. Path {2} is down. Affected datastores: {3}. | Critical | System |

| esx.problem.storage.connectivity.pathpor | Frequent PowerOn Reset Unit Attentions are occurring on path {1}. This might indicate a storage problem. Affected device: {2}. Affected datastores: {3} | Warning | System |

| esx.problem.storage.connectivity.pathstatechanges | Frequent path state changes are occurring for path {1}. This might indicate a storage problem. Affected device: {2}. Affected datastores: {3} | Warning | System |

| esx.problem.storage.redundancy.degraded | Path redundancy to storage device {1} degraded. Path {2} is down. Affected datastores: {3}. | Warning | System |

| esx.problem.storage.redundancy.lost | Lost path redundancy to storage device {1}. Path {2} is down. Affected datastores: {3}. | Warning | System |

| esx.problem.vmfs.heartbeat.recovered | Successfully restored access to volume {1} ({2}) following connectivity issues. | Informational | System |

| esx.problem.vmfs.heartbeat.timedout | Lost access to volume {1} ({2}) due to connectivity issues. Recovery attempt is in progress and outcome will be reported shortly. | Informational | System |

| esx.problem.vmfs.heartbeat.unrecoverable | Lost connectivity to volume {1} ({2}) and subsequent recovery attempts have failed. | Critical | System |

| esx.problem.vmfs.journal.createfailed | No space for journal on volume {1} ({2}). Opening volume in read-only metadata mode with limited write support. | Critical | System |

| esx.problem.vmfs.lock.corruptondisk | At least one corrupt on-disk lock was detected on volume {1} ({2}). Other regions of the volume might be damaged too. | Critical | System |

| esx.problem.vmfs.nfs.mount.connect.failed | Failed to mount to the server {1} mount point {2}. {3} | Critical | System |

| esx.problem.vmfs.nfs.mount.limit.exceeded | Failed to mount to the server {1} mount point {2}. {3} | Critical | System |

| esx.problem.vmfs.nfs.server.disconnect | Lost connection to server {1} mount point {2} mounted as {3} ({4}). | Critical | System |

| esx.problem.vmfs.nfs.server.restored | Restored connection to server {1} mount point {2} mounted as {3} ({4}). | Informational | System |

| esx.problem.vmfs.resource.corruptondisk | At least one corrupt resource metadata region was detected on volume {1} ({2}). Other regions of the volume might be damaged too. | Critical | System |

| esx.problem.vmfs.volume.locked | Volume on device {1} locked, possibly because remote host {2} encountered an error during a volume operation and could not recover. | Critical | System |

| vim.event.LicenseDowngradedEvent | License downgrade: {licenseKey} removes the following features: {lostFeatures} | Warning | System |

| vprob.net.connectivity.lost | Lost network connectivity on virtual switch {1}. Physical NIC {2} is down. Affected portgroups:{3}. | Critical | System |

| vprob.net.e1000.tso6.notsupported | Guest-initiated IPv6 TCP Segmentation Offload (TSO) packets ignored. Manually disable TSO inside the guest operating system in virtual machine {1}, or use a different virtual adapter. | Critical | System |

| vprob.net.migrate.bindtovmk | The ESX advanced config option /Migrate/Vmknic is set to an invalid vmknic: {1}. /Migrate/Vmknic specifies a vmknic that vMotion binds to for improved performance. Please update the config option with a valid vmknic or, if you do not want vMotion to bind to a specific vmknic, remove the invalid vmknic and leave the option blank. | Warning | System |

| vprob.net.proxyswitch.port.unavailable | Virtual NIC with hardware address {1} failed to connect to distributed virtual port {2} on switch {3}. There are no more ports available on the host proxy switch. | Warning | System |

| vprob.net.redundancy.degraded | Uplink redundancy degraded on virtual switch {1}. Physical NIC {2} is down. {3} uplinks still up. Affected portgroups:{4}. | Warning | System |

| vprob.net.redundancy.lost | Lost uplink redundancy on virtual switch {1}. Physical NIC {2} is down. Affected portgroups:{3}. | Warning | System |

| vprob.scsi.device.thinprov.atquota | Space utilization on thin-provisioned device {1} exceeded configured threshold. | Warning | System |

| vprob.storage.connectivity.lost | Lost connectivity to storage device {1}. Path {2} is down. Affected datastores: {3}. | Critical | System |

| vprob.storage.redundancy.degraded | Path redundancy to storage device {1} degraded. Path {2} is down. {3} remaining active paths. Affected datastores: {4}. | Warning | System |

| vprob.storage.redundancy.lost | Lost path redundancy to storage device {1}. Path {2} is down. Affected datastores: {3}. | Warning | System |

| vprob.vmfs.heartbeat.recovered | Successfully restored access to volume {1} ({2}) following connectivity issues. | Informational | System |

| vprob.vmfs.heartbeat.timedout | Lost access to volume {1} ({2}) due to connectivity issues. Recovery attempt is in progress and outcome will be reported shortly. | Informational | System |

| vprob.vmfs.heartbeat.unrecoverable | Lost connectivity to volume {1} ({2}) and subsequent recovery attempts have failed. | Critical | System |

| vprob.vmfs.journal.createfailed | No space for journal on volume {1} ({2}). Opening volume in read-only metadata mode with limited write support. | Critical | System |

| vprob.vmfs.lock.corruptondisk | At least one corrupt on-disk lock was detected on volume {1} ({2}). Other regions of the volume may be damaged too. | Critical | System |

| vprob.vmfs.nfs.server.disconnect | Lost connection to server {1} mount point {2} mounted as {3} ({4}). | Critical | System |

| vprob.vmfs.nfs.server.restored | Restored connection to server {1} mount point {2} mounted as {3} ({4}). | Informational | System |

| vprob.vmfs.resource.corruptondisk | At least one corrupt resource metadata region was detected on volume {1} ({2}). Other regions of the volume might be damaged too. | Critical | System |

| vprob.vmfs.volume.locked | Volume on device {1} locked, possibly because remote host {2} encountered an error during a volume operation and could not recover. | Critical | System |