Configuration

The plugin makes use of some Spark rest api endpoints, in order to access them from the plugin we will have to have a series of active ports that are not blocked by the firewall, these are the following :

firewall-cmd --permanent --zone=public --add-port=6066/tcp

firewall-cmd --permanent --zone=public --add-port=7077/tcp

firewall-cmd --permanent --zone=public --add-port=8080-8081/tcp

firewall-cmd --permanent --zone=public --add-port=4040/tcp

firewall-cmd --permanent --zone=public --add-port=18080/tcp

firewall-cmd --reload6066: Rest url (cluster mode).

7077: Server master.

8080 : Web UI.

4040: Para aplicaciones en ejecución.

18080: Para el history server.

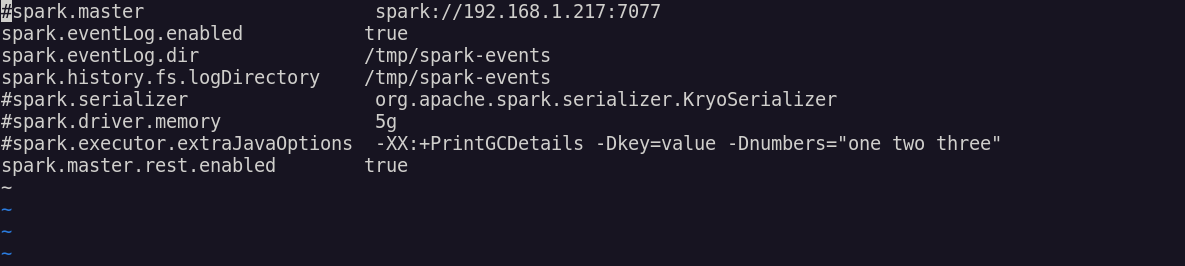

In order to make use of the history server we will have to enable spark.eventLog.enabled, spark.eventLog.dir and spark.history.fs.logDirectory in spark-defaults.conf.

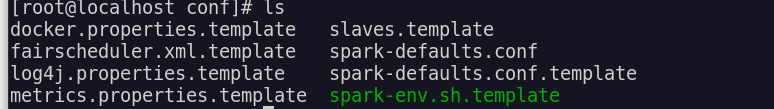

You can find a conf template in /conf

We will create in that path the file with:

vi spark-defaults.confAnd we'll leave it at that, you can choose the path where you want to save the events.

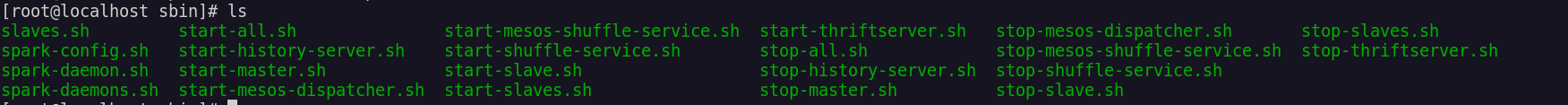

Now we can activate the history server, in /sbin, the same path where the master, workers, etc. are activated.

We will start it with :

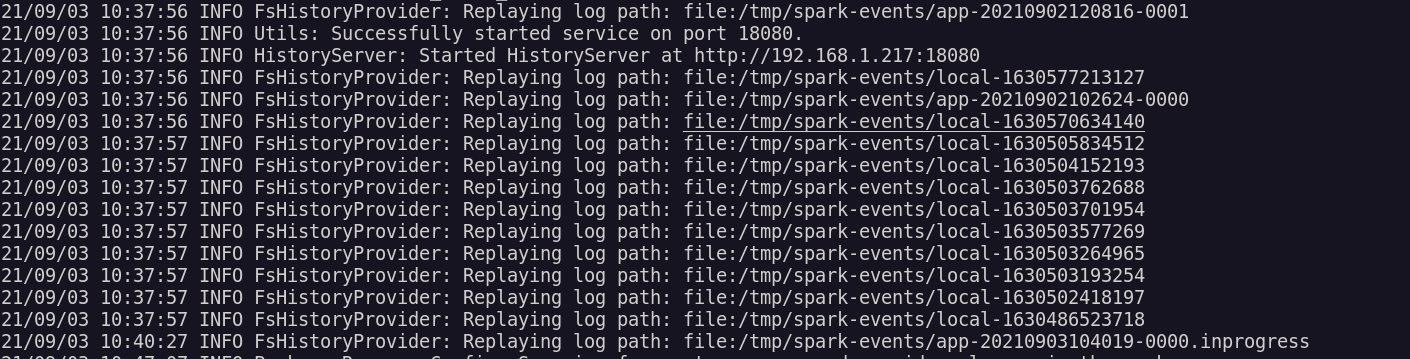

./start-history-server.shIf we go to the log that returns the output we will see how correctly it has been started and its url.

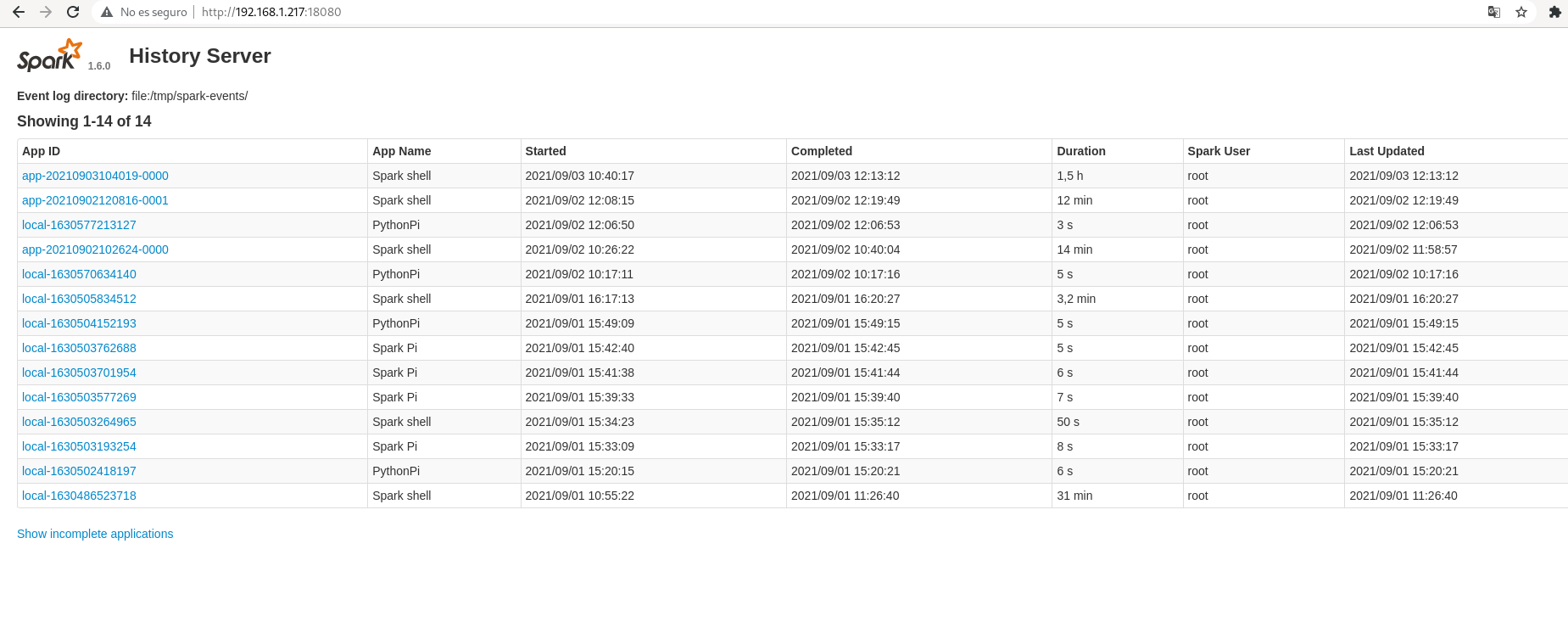

If we access the url we will see the history server.

Note:

This is assumed, but for the plugin to work you will need to have the master server active, as well as have applications running or that have been run and have finished, as it is from the applications that we will take the metrics, specifically from their runners.